Init

This commit is contained in:

BIN

03-UnrealEngine/Rendering/Shader/Effect/Ocean/水下逻辑判断.jpg

Normal file

BIN

03-UnrealEngine/Rendering/Shader/Effect/Ocean/水下逻辑判断.jpg

Normal file

Binary file not shown.

|

After Width: | Height: | Size: 56 KiB |

BIN

03-UnrealEngine/Rendering/Shader/Effect/Ocean/水下逻辑判断2.jpg

Normal file

BIN

03-UnrealEngine/Rendering/Shader/Effect/Ocean/水下逻辑判断2.jpg

Normal file

Binary file not shown.

|

After Width: | Height: | Size: 2.6 KiB |

76

03-UnrealEngine/Rendering/Shader/Effect/Ocean/海洋SSS效果论文笔记.md

Normal file

76

03-UnrealEngine/Rendering/Shader/Effect/Ocean/海洋SSS效果论文笔记.md

Normal file

@@ -0,0 +1,76 @@

|

||||

## 论文与相关资料

|

||||

### 寒霜的快速SSS

|

||||

在GDCVault中搜索对应的演讲,之后就可以下载了。一些PPT比较大,可以直接去控件里找下载地址。

|

||||

|

||||

https://www.slideshare.net/colinbb/colin-barrebrisebois-gdc-2011-approximating-translucency-for-a-fast-cheap-and-convincing-subsurfacescattering-look-7170855

|

||||

|

||||

#### 视频

|

||||

https://www.gdcvault.com/play/1014536/Approximating-Translucency-for-a-Fast

|

||||

|

||||

因为视频是blob模式的,所以可以去下面的网站下载:

|

||||

http://downloadblob.com/

|

||||

|

||||

#### ppt

|

||||

https://twvideo01.ubm-us.net/o1/vault/gdc2011/slides/Colin_BarreBrisebois_Programming_ApproximatingTranslucency.pptx

|

||||

|

||||

### SIGGRAPH2019ppt

|

||||

http://advances.realtimerendering.com/s2019/index.htm

|

||||

|

||||

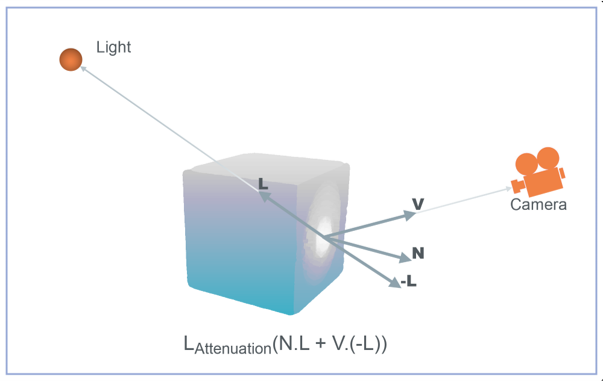

## Approximating Translucency for a Fast, Cheap and Convincing Subsurface Scattering Look

|

||||

### 数据管理

|

||||

数据可以分成材质相关与灯光类型相关。在寒霜中材质相关会使用GBuffer传递(UE4可以使用CustomData吧),光类型相关会在LightPass中传递。

|

||||

|

||||

### 计算厚度值

|

||||

通过AO的方式来计算厚度:

|

||||

- 反转表面法线

|

||||

- 渲染AO

|

||||

- 反转颜色最后渲染到贴图中

|

||||

|

||||

### 技术细节

|

||||

|

||||

|

||||

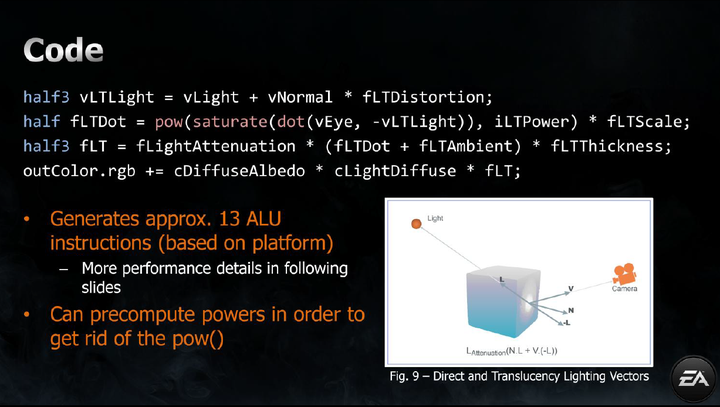

LT应该是Light Translucency的意思。v代表Vector,f代表float,i代表int。

|

||||

```

|

||||

half3 vLTLight = vLight + vNormal * fLTDistortion;

|

||||

half fLTDot = pow(saturate(dot(vEye, -vLTLight)), iLTPower) * fLTScale;

|

||||

half3 fLT = fLightAttenuation * (fLTDot + fLTAmbient) * fLTThickness;

|

||||

outColor.rgb += cDiffuseAlbedo * cLightDiffuse * fLT;

|

||||

```

|

||||

|

||||

#### fLTAmbient

|

||||

Ambient项,代表了始终存的各个方向的透射值。材质相关变量。

|

||||

|

||||

|

||||

|

||||

#### iLTPower

|

||||

强度衰减项,直接透射强度。与视口相关。可以通过预计算进行优化。材质相关变量。

|

||||

|

||||

|

||||

|

||||

#### fLTDistortion

|

||||

透射方向形变项,用于模拟光线传输的不规则的效果,类似于毛玻璃的效果。主要的功能是控制法线对于透射光方向的影响。与视口相关。材质相关变量。

|

||||

|

||||

|

||||

|

||||

#### fLTThickness

|

||||

厚底项,预计算的Local坐标的表面厚度值。材质相关变量。

|

||||

|

||||

|

||||

|

||||

#### fLTScale

|

||||

缩放项,用于缩放直接透射效果。视口相关。灯光相关变量。

|

||||

|

||||

|

||||

|

||||

### 最终效果

|

||||

|

||||

|

||||

### GBuffer设计

|

||||

最低的要求是GBufer中使用8位位宽灰度贴图的方式来存储translucency。使用24位位宽以颜色贴图的方式,可以实现材质对于不同光谱的光线不同的散射效果。

|

||||

|

||||

### 技术缺点

|

||||

因为是一种近似技术,所以只适合在凸物体上演示。这种技术对变形物体不起作用,因为需要烘焙厚度贴图。此外,我们可以使用实时AO算法配合倒置法线来计算厚度。

|

||||

|

||||

|

||||

|

||||

PS.该技术的详细描述可以在《GPU PRO2》中找到。

|

||||

202

03-UnrealEngine/Rendering/Shader/Effect/Ocean/海洋论文笔记.md

Normal file

202

03-UnrealEngine/Rendering/Shader/Effect/Ocean/海洋论文笔记.md

Normal file

@@ -0,0 +1,202 @@

|

||||

## UE4 渲染功能探究

|

||||

New: Planar Reflections

|

||||

New: High Quality Reflections

|

||||

|

||||

## UE4.26 SingleLayerWater笔记

|

||||

官方论坛讨论

|

||||

|

||||

https://forums.unrealengine.com/development-discussion/rendering/1746626-actually-realistic-water-shader#post1789028

|

||||

### SingleLayerCommon.ush

|

||||

计算光照强度、透明度。

|

||||

struct WaterVolumeLightingOutput

|

||||

{

|

||||

float3 Luminance;

|

||||

float3 WaterToSceneTransmittance;

|

||||

float3 WaterToSceneToLightTransmittance;

|

||||

};

|

||||

|

||||

Output.Luminance = WaterVisibility * (ScatteredLuminance + Transmittance * BehindWaterSceneLuminance);

|

||||

Output.WaterToSceneTransmittance = Transmittance;

|

||||

Output.WaterToSceneToLightTransmittance;

|

||||

|

||||

目前没有开启RayMarching,所以核心代码为:

|

||||

```

|

||||

const float3 OpticalDepth = ExtinctionCoeff * BehindWaterDeltaDepth;

|

||||

float3 Transmittance = exp(-OpticalDepth);

|

||||

float3 ScatteredLuminance = ScatteringCoeff * (AmbScattLuminance + SunScattLuminance * DirectionalLightShadow);

|

||||

ScatteredLuminance = (ScatteredLuminance - ScatteredLuminance * Transmittance) / ExtinctionCoeffSafe;

|

||||

|

||||

// Apply Fresnel effect to out-scattering towards the view

|

||||

ScatteredLuminance *= CameraIsUnderWater ? 1.0 : (1.0 - EnvBrdf); // Under water is less visible due to Fresnel effect

|

||||

Transmittance *= CameraIsUnderWater ? (1.0 - EnvBrdf) : 1.0; // Above " " " " "

|

||||

|

||||

// Add single in-scattering apply colored transmittance to scene color

|

||||

Output.Luminance = WaterVisibility * (ScatteredLuminance + Transmittance * (BehindWaterSceneLuminance* ColorScaleBehindWater));

|

||||

Output.WaterToSceneTransmittance = Transmittance;

|

||||

Output.WaterToSceneToLightTransmittance = Transmittance * MeanTransmittanceToLightSources;

|

||||

```

|

||||

|

||||

|

||||

```c++

|

||||

const float BehindWaterDeltaDepth = CameraIsUnderWater ? WaterDepth : max(0.0f, SceneDepth - WaterDepth);

|

||||

|

||||

const float3 ScatteringCoeff = max(0.0f, GetSingleLayerWaterMaterialOutput0(MaterialParameters));

|

||||

const float3 AbsorptionCoeff = max(0.0f, GetSingleLayerWaterMaterialOutput1(MaterialParameters));

|

||||

const float PhaseG = clamp(GetSingleLayerWaterMaterialOutput2(MaterialParameters), -1.0f, 1.0f);

|

||||

//Sample the optional Material Input ColorScaleBehindWater and fade it out at shorelines to avoid hard edge intersections

|

||||

float3 ColorScaleBehindWater = lerp(1.0f, max(0.0f, GetSingleLayerWaterMaterialOutput3(MaterialParameters)), saturate(BehindWaterDeltaDepth * 0.02f));

|

||||

|

||||

const float3 ExtinctionCoeff = ScatteringCoeff + AbsorptionCoeff;

|

||||

// Max to avoid division by 0 with the analytical integral below.

|

||||

// 1e-5 is high enough to avoid denorms on mobile

|

||||

const float3 ExtinctionCoeffSafe = max(ScatteringCoeff + AbsorptionCoeff, 1e-5);

|

||||

|

||||

float DirLightPhaseValue = 0.0f; // Default when Total Internal Reflection happens.

|

||||

{

|

||||

#if SIMPLE_SINGLE_LAYER_WATER

|

||||

DirLightPhaseValue = IsotropicPhase();

|

||||

#else

|

||||

float IorFrom = 1.0f; // assumes we come from air

|

||||

float IorTo = DielectricF0ToIor(DielectricSpecularToF0(Specular)); // Wrong if metal is set to >1. But we still keep refraction on the water surface nonetheless.

|

||||

const float relativeIOR = IorFrom / IorTo;

|

||||

float3 UnderWaterRayDir = 0.0f;

|

||||

if (WaterRefract(MaterialParameters.CameraVector, MaterialParameters.WorldNormal, relativeIOR, UnderWaterRayDir))

|

||||

{

|

||||

DirLightPhaseValue = SchlickPhase(PhaseG, dot(-ResolvedView.DirectionalLightDirection.xyz, UnderWaterRayDir));

|

||||

}

|

||||

#endif

|

||||

}

|

||||

|

||||

// We also apply transmittance from light to under water surface. However, the scene has been lit by many sources already.

|

||||

// So the transmittance toabove surface is simply approximated using the travel distance from the scene pixel to the water top, assuming a flat water surface.

|

||||

// We cannot combine this transmittance with the transmittance from view because this would change the behavior of the analytical integration of light scattering integration.

|

||||

const float3 BehindWaterSceneWorldPos = SvPositionToWorld(float4(MaterialParameters.SvPosition.xy, SceneDeviceZ, 1.0));

|

||||

const float DistanceFromScenePixelToWaterTop = max(0.0, MaterialParameters.AbsoluteWorldPosition.z - BehindWaterSceneWorldPos.z);

|

||||

const float3 MeanTransmittanceToLightSources = exp(-DistanceFromScenePixelToWaterTop * ExtinctionCoeff);

|

||||

|

||||

#if SIMPLE_SINGLE_LAYER_WATER

|

||||

const float3 BehindWaterSceneLuminance = 0.0f; // Cannot read back the scene color in this case

|

||||

#else

|

||||

// We use the pixel SvPosition instead of the scene one pre refraction/distortion to avoid those extra ALUs.

|

||||

float3 BehindWaterSceneLuminance = SceneColorWithoutSingleLayerWaterTexture.SampleLevel(SceneColorWithoutSingleLayerWaterSampler, ViewportUV, 0).rgb;

|

||||

BehindWaterSceneLuminance = MeanTransmittanceToLightSources * (USE_PREEXPOSURE ? ResolvedView.OneOverPreExposure : 1.0f) * BehindWaterSceneLuminance;

|

||||

#endif

|

||||

|

||||

float3 SunScattLuminance = DirLightPhaseValue * SunIlluminance;

|

||||

float3 AmbScattLuminance = IsotropicPhase() * AmbiantIlluminance;

|

||||

|

||||

#define VOLUMETRICSHADOW 0

|

||||

#if !VOLUMETRICSHADOW || SIMPLE_SINGLE_LAYER_WATER

|

||||

|

||||

const float3 OpticalDepth = ExtinctionCoeff * BehindWaterDeltaDepth;

|

||||

float3 Transmittance = exp(-OpticalDepth);

|

||||

float3 ScatteredLuminance = ScatteringCoeff * (AmbScattLuminance + SunScattLuminance * DirectionalLightShadow);

|

||||

ScatteredLuminance = (ScatteredLuminance - ScatteredLuminance * Transmittance) / ExtinctionCoeffSafe;

|

||||

|

||||

#else

|

||||

// TODO Make the volumetric shadow part work again

|

||||

float3 Transmittance = 1.0f;

|

||||

float3 ScatteredLuminance = 0.0f;

|

||||

const float RayMarchMaxDistance = min(BehindWaterDeltaDepth, 200.0f); // 20 meters

|

||||

const float RayMarchStepSize = RayMarchMaxDistance / 10.0f; // Less samples wil lresult in a bit brighter look due to TransmittanceToLightThroughWater being 1 on a longer first sample. Would need it part of analiytical integration

|

||||

const float ShadowDither = RayMarchStepSize * GBufferDither;

|

||||

for (float s = 0.0f; s < RayMarchMaxDistance; s += RayMarchStepSize)

|

||||

{

|

||||

// Only jitter shadow map sampling to not lose energy on first sample

|

||||

float Shadow = ComputeDirectionalLightDynamicShadowing(MaterialParameters.AbsoluteWorldPosition - (s + ShadowDither)*MaterialParameters.CameraVector, GBuffer.Depth);

|

||||

|

||||

float3 WP = MaterialParameters.AbsoluteWorldPosition - s * MaterialParameters.CameraVector;

|

||||

float WaterHeightAboveSample = max(0.0, MaterialParameters.AbsoluteWorldPosition.z - WP.z);

|

||||

float3 TransmittanceToLightThroughWater = 1.0; // no self shadow, same energy as above analytical solution

|

||||

//float3 TransmittanceToLightThroughWater = exp(-ExtinctionCoeff * WaterHeightAboveSample); // self shadow as transmittance to water level, close to reference, depends a bit on sample count due to first sample being critical for dense medium

|

||||

|

||||

float3 SampleTransmittance = exp(-ExtinctionCoeff * RayMarchStepSize); // Constant

|

||||

float3 SS = (ScatteringCoeff * TransmittanceToLightThroughWater * (SunScattLuminance * Shadow + AmbScattLuminance));

|

||||

ScatteredLuminance += Transmittance * (SS - SS * SampleTransmittance) / ExtinctionCoeffSafe;

|

||||

Transmittance *= SampleTransmittance;

|

||||

}

|

||||

|

||||

// The rest of the medium

|

||||

const float3 OpticalDepth2 = ExtinctionCoeff * max(0.0, BehindWaterDeltaDepth - RayMarchMaxDistance);

|

||||

if (any(OpticalDepth2 > 0.0f))

|

||||

{

|

||||

float3 Transmittance2 = exp(-OpticalDepth2);

|

||||

float3 ScatteredLuminance2 = ScatteringCoeff * (SunScattLuminance + AmbScattLuminance);

|

||||

ScatteredLuminance += Transmittance * (ScatteredLuminance2 - ScatteredLuminance2 * Transmittance2) / ExtinctionCoeffSafe;

|

||||

Transmittance *= Transmittance2;

|

||||

}

|

||||

#endif

|

||||

|

||||

// Apply Fresnel effect to out-scattering towards the view

|

||||

ScatteredLuminance *= CameraIsUnderWater ? 1.0 : (1.0 - EnvBrdf); // Under water is less visible due to Fresnel effect

|

||||

Transmittance *= CameraIsUnderWater ? (1.0 - EnvBrdf) : 1.0; // Above " " " " "

|

||||

|

||||

// Add single in-scattering apply colored transmittance to scene color

|

||||

Output.Luminance = WaterVisibility * (ScatteredLuminance + Transmittance * (BehindWaterSceneLuminance* ColorScaleBehindWater));

|

||||

Output.WaterToSceneTransmittance = Transmittance;

|

||||

Output.WaterToSceneToLightTransmittance = Transmittance * MeanTransmittanceToLightSources;

|

||||

}

|

||||

|

||||

```

|

||||

海洋是不透明的,使用SceneColor缓存合成出的透明效果。

|

||||

|

||||

## GDC2012 神秘海域3演讲

|

||||

### 渲染方案

|

||||

|

||||

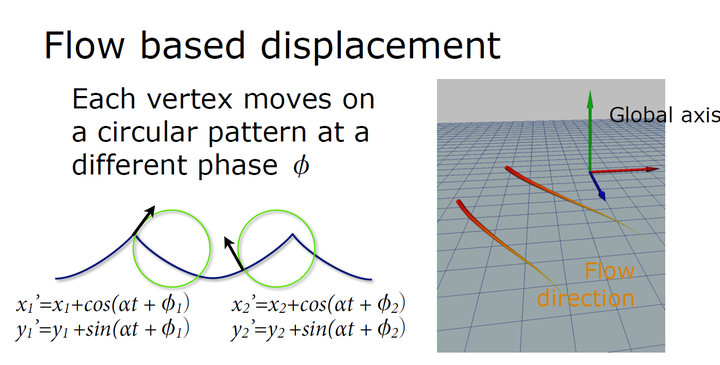

### FlowShader

|

||||

没看懂,为什么需要用2张贴图叠加,是因为要过度么?

|

||||

|

||||

4.5.1.1 Flow Map变体:《神秘海域3》Flow Map + Displacement

|

||||

另外,Flow Map可以和其他渲染技术结合使用,比如《神秘海域3》中的Flow Map + Displacement:

|

||||

|

||||

|

||||

4.5.1.2 Flow Map变体:《堡垒之夜》Flow Map + Distance Fields + Normal Maps

|

||||

以及《堡垒之夜》中的Flow Map + Distance Fields + Normal Maps [GDC 2019, Technical Artist Bootcamp Distance Fields and Shader Simulation Tricks]

|

||||

|

||||

4.5.1.3 Flow Map变体:《神秘海域4》Flow Map + Wave Particles

|

||||

或者《神秘海域4》中的Flow Map + Wave Particles[SIGGRAPH 2016, Rendering Rapids in Uncharted 4],都是进阶模拟水体表面流动与起伏效果的不错选择。

|

||||

|

||||

### Wave System

|

||||

如果我们能找到一个好的模型,程序化的几何和动画是不错的。

|

||||

仿真计算成本太高(即使在SPU中),设计者也很难控制。

|

||||

Perlin噪音效果在视觉上不是很好,往往看起来很人工化

|

||||

FFT技术很好,但是参数很难被艺术家控制和调整。也是很难搞好的

|

||||

|

||||

**Gerstner waves**

|

||||

简单易于控制效果,但高频细节不够多,只能叠加几组大浪,否则太消耗资源。

|

||||

|

||||

**FFT Waves**

|

||||

真实,细节丰富。但是美术难以控制效果。

|

||||

|

||||

神秘海域3采用4组Gerstner waves+4组波动粒子的方式来实现Wave Vector Displacement。

|

||||

|

||||

#### 大浪

|

||||

大浪采用贝塞尔曲线建模完成

|

||||

**之后再叠加大浪**。

|

||||

|

||||

|

||||

|

||||

这是整个波系的局部公式。

|

||||

bspline是一个均匀的、非理性的bspline。我们本可以使用贝塞尔,但它需要更多的代码。

|

||||

grid(u,v)函数返回一个给定坐标u,v的标量值。在这种情况下,我们有一个波标的乘数

|

||||

|

||||

## Sea of Thieves approach [Ang, 2018]

|

||||

|

||||

## Crest Siggraph2019

|

||||

### Light Scattering

|

||||

使用了类似盗贼之海的光线散射算法,光线散射项是基于海面置换项的水平长度。这里补充一下:使它在我们的框架中更好地工作--我们通过将置换项除以波长来做一种特殊的归一化,并将这个归一化版本用于光散射项。

|

||||

|

||||

基于海平面高度的散射在海洋参数发生改变时容易出问题。

|

||||

|

||||

如果将置换项除以波长,就可以针对大波与小波进行缩放。

|

||||

|

||||

### Shadering

|

||||

Cascade scale used to scale shading inputs

|

||||

Normals

|

||||

Foam

|

||||

Underwater bubbles

|

||||

Works for range of viewpoints

|

||||

Breaks up patterns

|

||||

Combats mipmapping

|

||||

Increase visible range of detail

|

||||

Doubles texture samples

|

||||

252

03-UnrealEngine/Rendering/Shader/Effect/PostProcess 后处理材质笔记.md

Normal file

252

03-UnrealEngine/Rendering/Shader/Effect/PostProcess 后处理材质笔记.md

Normal file

@@ -0,0 +1,252 @@

|

||||

|

||||

|

||||

|

||||

|

||||

```c++

|

||||

// Manually clamp scene texture UV as if using a clamp sampler.

|

||||

MaterialFloat2 ClampSceneTextureUV(MaterialFloat2 BufferUV, const uint SceneTextureId)

|

||||

{

|

||||

float4 MinMax = GetSceneTextureUVMinMax(SceneTextureId);

|

||||

|

||||

return clamp(BufferUV, MinMax.xy, MinMax.zw);

|

||||

}

|

||||

```

|

||||

|

||||

## 相关函数的HLSL代码

|

||||

```c++

|

||||

float GetPixelDepth(FMaterialVertexParameters Parameters)

|

||||

{

|

||||

FLATTEN

|

||||

if (View.ViewToClip[3][3] < 1.0f)

|

||||

{

|

||||

// Perspective

|

||||

return GetScreenPosition(Parameters).w;

|

||||

}

|

||||

else

|

||||

{

|

||||

// Ortho

|

||||

return ConvertFromDeviceZ(GetScreenPosition(Parameters).z);

|

||||

}

|

||||

}

|

||||

|

||||

float GetPixelDepth(FMaterialPixelParameters Parameters)

|

||||

{

|

||||

FLATTEN

|

||||

if (View.ViewToClip[3][3] < 1.0f)

|

||||

{

|

||||

// Perspective

|

||||

return GetScreenPosition(Parameters).w;

|

||||

}

|

||||

else

|

||||

{

|

||||

// Ortho

|

||||

return ConvertFromDeviceZ(GetScreenPosition(Parameters).z);

|

||||

}

|

||||

}

|

||||

|

||||

float2 GetSceneTextureUV(FMaterialVertexParameters Parameters)

|

||||

{

|

||||

return ScreenAlignedPosition(GetScreenPosition(Parameters));

|

||||

}

|

||||

|

||||

float2 GetSceneTextureUV(FMaterialPixelParameters Parameters)

|

||||

{

|

||||

return SvPositionToBufferUV(Parameters.SvPosition);

|

||||

}

|

||||

|

||||

float2 GetViewportUV(FMaterialVertexParameters Parameters)

|

||||

{

|

||||

#if POST_PROCESS_MATERIAL

|

||||

return Parameters.WorldPosition.xy;

|

||||

#else

|

||||

return BufferUVToViewportUV(GetSceneTextureUV(Parameters));

|

||||

#endif

|

||||

}

|

||||

|

||||

float2 GetPixelPosition(FMaterialVertexParameters Parameters)

|

||||

{

|

||||

return GetViewportUV(Parameters) * View.ViewSizeAndInvSize.xy;

|

||||

}

|

||||

|

||||

|

||||

#if POST_PROCESS_MATERIAL

|

||||

|

||||

float2 GetPixelPosition(FMaterialPixelParameters Parameters)

|

||||

{

|

||||

return Parameters.SvPosition.xy - float2(PostProcessOutput_ViewportMin);

|

||||

}

|

||||

|

||||

float2 GetViewportUV(FMaterialPixelParameters Parameters)

|

||||

{

|

||||

return GetPixelPosition(Parameters) * PostProcessOutput_ViewportSizeInverse;

|

||||

}

|

||||

|

||||

#else

|

||||

|

||||

float2 GetPixelPosition(FMaterialPixelParameters Parameters)

|

||||

{

|

||||

return Parameters.SvPosition.xy - float2(View.ViewRectMin.xy);

|

||||

}

|

||||

|

||||

float2 GetViewportUV(FMaterialPixelParameters Parameters)

|

||||

{

|

||||

return SvPositionToViewportUV(Parameters.SvPosition);

|

||||

}

|

||||

|

||||

```

|

||||

|

||||

## 本质上是调用了SceneTextureLookup

|

||||

float4 SceneTextureLookup(float2 UV, int SceneTextureIndex, bool bFiltered)

|

||||

{

|

||||

FScreenSpaceData ScreenSpaceData = GetScreenSpaceData(UV, false);

|

||||

switch(SceneTextureIndex)

|

||||

{

|

||||

// order needs to match to ESceneTextureId

|

||||

|

||||

case PPI_SceneColor:

|

||||

return float4(CalcSceneColor(UV), 0);

|

||||

case PPI_SceneDepth:

|

||||

return ScreenSpaceData.GBuffer.Depth;

|

||||

case PPI_DiffuseColor:

|

||||

return float4(ScreenSpaceData.GBuffer.DiffuseColor, 0);

|

||||

case PPI_SpecularColor:

|

||||

return float4(ScreenSpaceData.GBuffer.SpecularColor, 0);

|

||||

case PPI_SubsurfaceColor:

|

||||

return IsSubsurfaceModel(ScreenSpaceData.GBuffer.ShadingModelID) ? float4( ExtractSubsurfaceColor(ScreenSpaceData.GBuffer), ScreenSpaceData.GBuffer.CustomData.a ) : ScreenSpaceData.GBuffer.CustomData;

|

||||

case PPI_BaseColor:

|

||||

return float4(ScreenSpaceData.GBuffer.BaseColor, 0);

|

||||

case PPI_Specular:

|

||||

return ScreenSpaceData.GBuffer.Specular;

|

||||

case PPI_Metallic:

|

||||

return ScreenSpaceData.GBuffer.Metallic;

|

||||

case PPI_WorldNormal:

|

||||

return float4(ScreenSpaceData.GBuffer.WorldNormal, 0);

|

||||

case PPI_SeparateTranslucency:

|

||||

return float4(1, 1, 1, 1); // todo

|

||||

case PPI_Opacity:

|

||||

return ScreenSpaceData.GBuffer.CustomData.a;

|

||||

case PPI_Roughness:

|

||||

return ScreenSpaceData.GBuffer.Roughness;

|

||||

case PPI_MaterialAO:

|

||||

return ScreenSpaceData.GBuffer.GBufferAO;

|

||||

case PPI_CustomDepth:

|

||||

return ScreenSpaceData.GBuffer.CustomDepth;

|

||||

|

||||

case PPI_PostProcessInput0:

|

||||

return Texture2DSample(PostProcessInput_0_Texture, bFiltered ? PostProcessInput_BilinearSampler : PostProcessInput_0_SharedSampler, UV);

|

||||

case PPI_PostProcessInput1:

|

||||

return Texture2DSample(PostProcessInput_1_Texture, bFiltered ? PostProcessInput_BilinearSampler : PostProcessInput_1_SharedSampler, UV);

|

||||

case PPI_PostProcessInput2:

|

||||

return Texture2DSample(PostProcessInput_2_Texture, bFiltered ? PostProcessInput_BilinearSampler : PostProcessInput_2_SharedSampler, UV);

|

||||

case PPI_PostProcessInput3:

|

||||

return Texture2DSample(PostProcessInput_3_Texture, bFiltered ? PostProcessInput_BilinearSampler : PostProcessInput_3_SharedSampler, UV);

|

||||

case PPI_PostProcessInput4:

|

||||

return Texture2DSample(PostProcessInput_4_Texture, bFiltered ? PostProcessInput_BilinearSampler : PostProcessInput_4_SharedSampler, UV);

|

||||

|

||||

case PPI_DecalMask:

|

||||

return 0; // material compiler will return an error

|

||||

case PPI_ShadingModelColor:

|

||||

return float4(GetShadingModelColor(ScreenSpaceData.GBuffer.ShadingModelID), 1);

|

||||

case PPI_ShadingModelID:

|

||||

return float4(ScreenSpaceData.GBuffer.ShadingModelID, 0, 0, 0);

|

||||

case PPI_AmbientOcclusion:

|

||||

return ScreenSpaceData.AmbientOcclusion;

|

||||

case PPI_CustomStencil:

|

||||

return ScreenSpaceData.GBuffer.CustomStencil;

|

||||

case PPI_StoredBaseColor:

|

||||

return float4(ScreenSpaceData.GBuffer.StoredBaseColor, 0);

|

||||

case PPI_StoredSpecular:

|

||||

return float4(ScreenSpaceData.GBuffer.StoredSpecular.rrr, 0);

|

||||

|

||||

case PPI_WorldTangent:

|

||||

return float4(ScreenSpaceData.GBuffer.WorldTangent, 0);

|

||||

case PPI_Anisotropy:

|

||||

return ScreenSpaceData.GBuffer.Anisotropy;

|

||||

default:

|

||||

return float4(0, 0, 0, 0);

|

||||

}

|

||||

}

|

||||

##

|

||||

FScreenSpaceData GetScreenSpaceData(float2 UV, bool bGetNormalizedNormal = true)

|

||||

{

|

||||

FScreenSpaceData Out;

|

||||

|

||||

Out.GBuffer = GetGBufferData(UV, bGetNormalizedNormal);

|

||||

float4 ScreenSpaceAO = Texture2DSampleLevel(SceneTexturesStruct.ScreenSpaceAOTexture, SceneTexturesStruct_ScreenSpaceAOTextureSampler, UV, 0);

|

||||

|

||||

Out.AmbientOcclusion = ScreenSpaceAO.r;

|

||||

|

||||

return Out;

|

||||

}

|

||||

|

||||

## GetGBufferData使用的GBufferXTexture的传入位置

|

||||

比如AOUpsamplePS(c++中为FDistanceFieldAOUpsamplePS)就带有FSceneTextureUniformParameters, SceneTextures。都是由对应的渲染函数的TRDGUniformBufferRef<FSceneTextureUniformParameters> SceneTexturesUniformBuffer形参传入。

|

||||

|

||||

```c#

|

||||

FGBufferData GetGBufferData(float2 UV, bool bGetNormalizedNormal = true)

|

||||

{

|

||||

float4 GBufferA = Texture2DSampleLevel(SceneTexturesStruct.GBufferATexture, SceneTexturesStruct_GBufferATextureSampler, UV, 0);

|

||||

float4 GBufferB = Texture2DSampleLevel(SceneTexturesStruct.GBufferBTexture, SceneTexturesStruct_GBufferBTextureSampler, UV, 0);

|

||||

float4 GBufferC = Texture2DSampleLevel(SceneTexturesStruct.GBufferCTexture, SceneTexturesStruct_GBufferCTextureSampler, UV, 0);

|

||||

float4 GBufferD = Texture2DSampleLevel(SceneTexturesStruct.GBufferDTexture, SceneTexturesStruct_GBufferDTextureSampler, UV, 0);

|

||||

float CustomNativeDepth = Texture2DSampleLevel(SceneTexturesStruct.CustomDepthTexture, SceneTexturesStruct_CustomDepthTextureSampler, UV, 0).r;

|

||||

|

||||

int2 IntUV = (int2)trunc(UV * View.BufferSizeAndInvSize.xy);

|

||||

uint CustomStencil = SceneTexturesStruct.CustomStencilTexture.Load(int3(IntUV, 0)) STENCIL_COMPONENT_SWIZZLE;

|

||||

|

||||

#if ALLOW_STATIC_LIGHTING

|

||||

float4 GBufferE = Texture2DSampleLevel(SceneTexturesStruct.GBufferETexture, SceneTexturesStruct_GBufferETextureSampler, UV, 0);

|

||||

#else

|

||||

float4 GBufferE = 1;

|

||||

#endif

|

||||

|

||||

float4 GBufferF = Texture2DSampleLevel(SceneTexturesStruct.GBufferFTexture, SceneTexturesStruct_GBufferFTextureSampler, UV, 0);

|

||||

|

||||

#if WRITES_VELOCITY_TO_GBUFFER

|

||||

float4 GBufferVelocity = Texture2DSampleLevel(SceneTexturesStruct.GBufferVelocityTexture, SceneTexturesStruct_GBufferVelocityTextureSampler, UV, 0);

|

||||

#else

|

||||

float4 GBufferVelocity = 0;

|

||||

#endif

|

||||

|

||||

float SceneDepth = CalcSceneDepth(UV);

|

||||

|

||||

//FGBufferData DecodeGBufferData()通过解码GBuffer之后返回FGBufferData 结构体

|

||||

return DecodeGBufferData(GBufferA, GBufferB, GBufferC, GBufferD, GBufferE, GBufferF, GBufferVelocity, CustomNativeDepth, CustomStencil, SceneDepth, bGetNormalizedNormal, CheckerFromSceneColorUV(UV));

|

||||

}

|

||||

```

|

||||

|

||||

## GetGBufferDataFromSceneTextures中使用的GBufferXTexture的传入位置

|

||||

SingleLayerWaterCompositePS中使用了GetGBufferDataFromSceneTextures()来获取GBuffer,这个数据是在SingleLayerWaterRendering.cpp中传入的。传入的变量FSingleLayerWaterCommonShaderParameters->FSceneTextureParameters SceneTextures中带有GBufferABCEDF与Depth、Stencil、Velocity贴图。

|

||||

```c++

|

||||

Texture2D SceneDepthTexture;

|

||||

Texture2D<uint2> SceneStencilTexture;

|

||||

Texture2D GBufferATexture;

|

||||

Texture2D GBufferBTexture;

|

||||

Texture2D GBufferCTexture;

|

||||

Texture2D GBufferDTexture;

|

||||

Texture2D GBufferETexture;

|

||||

Texture2D GBufferVelocityTexture;

|

||||

Texture2D GBufferFTexture;

|

||||

Texture2D<uint> SceneLightingChannels;

|

||||

|

||||

FGBufferData GetGBufferDataFromSceneTextures(float2 UV, bool bGetNormalizedNormal = true)

|

||||

{

|

||||

float4 GBufferA = GBufferATexture.SampleLevel(GBufferATextureSampler, UV, 0);

|

||||

float4 GBufferB = GBufferBTexture.SampleLevel(GBufferBTextureSampler, UV, 0);

|

||||

float4 GBufferC = GBufferCTexture.SampleLevel(GBufferCTextureSampler, UV, 0);

|

||||

float4 GBufferD = GBufferDTexture.SampleLevel(GBufferDTextureSampler, UV, 0);

|

||||

float4 GBufferE = GBufferETexture.SampleLevel(GBufferETextureSampler, UV, 0);

|

||||

float4 GBufferF = GBufferFTexture.SampleLevel(GBufferFTextureSampler, UV, 0);

|

||||

float4 GBufferVelocity = GBufferVelocityTexture.SampleLevel(GBufferVelocityTextureSampler, UV, 0);

|

||||

|

||||

uint CustomStencil = 0;

|

||||

float CustomNativeDepth = 0;

|

||||

|

||||

float DeviceZ = SampleDeviceZFromSceneTextures(UV);

|

||||

|

||||

float SceneDepth = ConvertFromDeviceZ(DeviceZ);

|

||||

|

||||

//FGBufferData DecodeGBufferData()通过解码GBuffer之后返回FGBufferData 结构体

|

||||

return DecodeGBufferData(GBufferA, GBufferB, GBufferC, GBufferD, GBufferE, GBufferF, GBufferVelocity, CustomNativeDepth, CustomStencil, SceneDepth, bGetNormalizedNormal, CheckerFromSceneColorUV(UV));

|

||||

}

|

||||

```

|

||||

53

03-UnrealEngine/Rendering/Shader/Effect/UE4剖切效果实现.md

Normal file

53

03-UnrealEngine/Rendering/Shader/Effect/UE4剖切效果实现.md

Normal file

@@ -0,0 +1,53 @@

|

||||

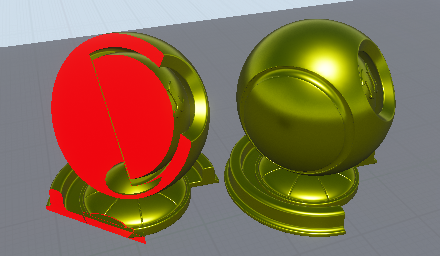

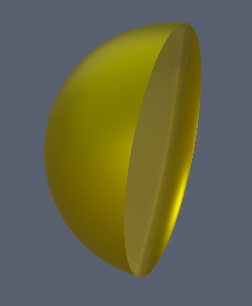

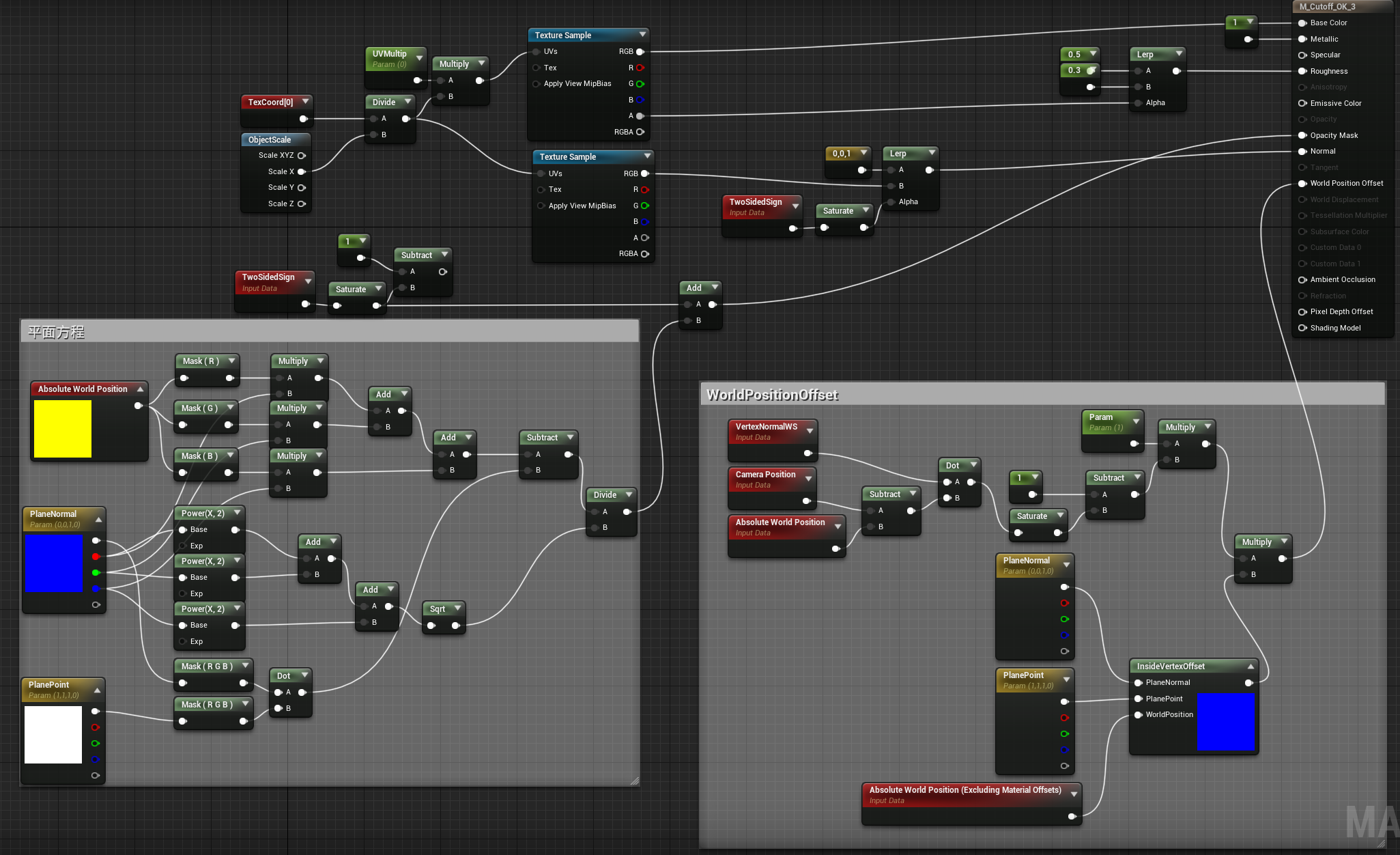

## 前言

|

||||

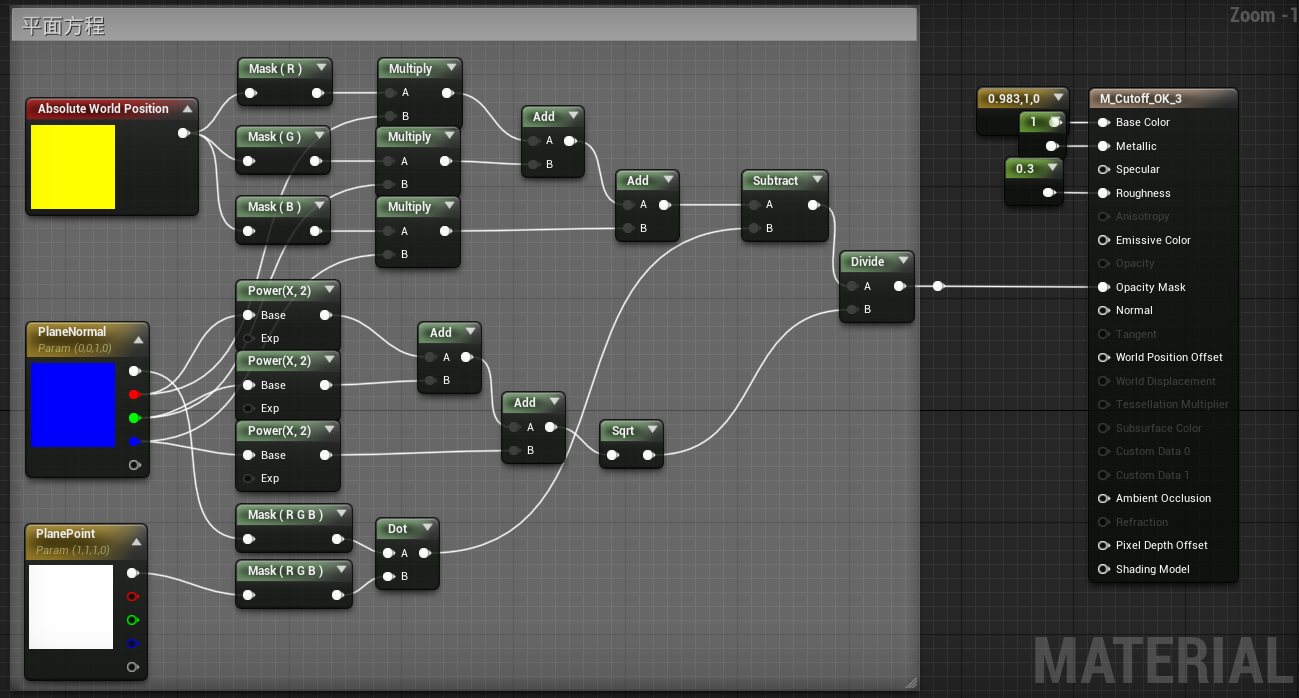

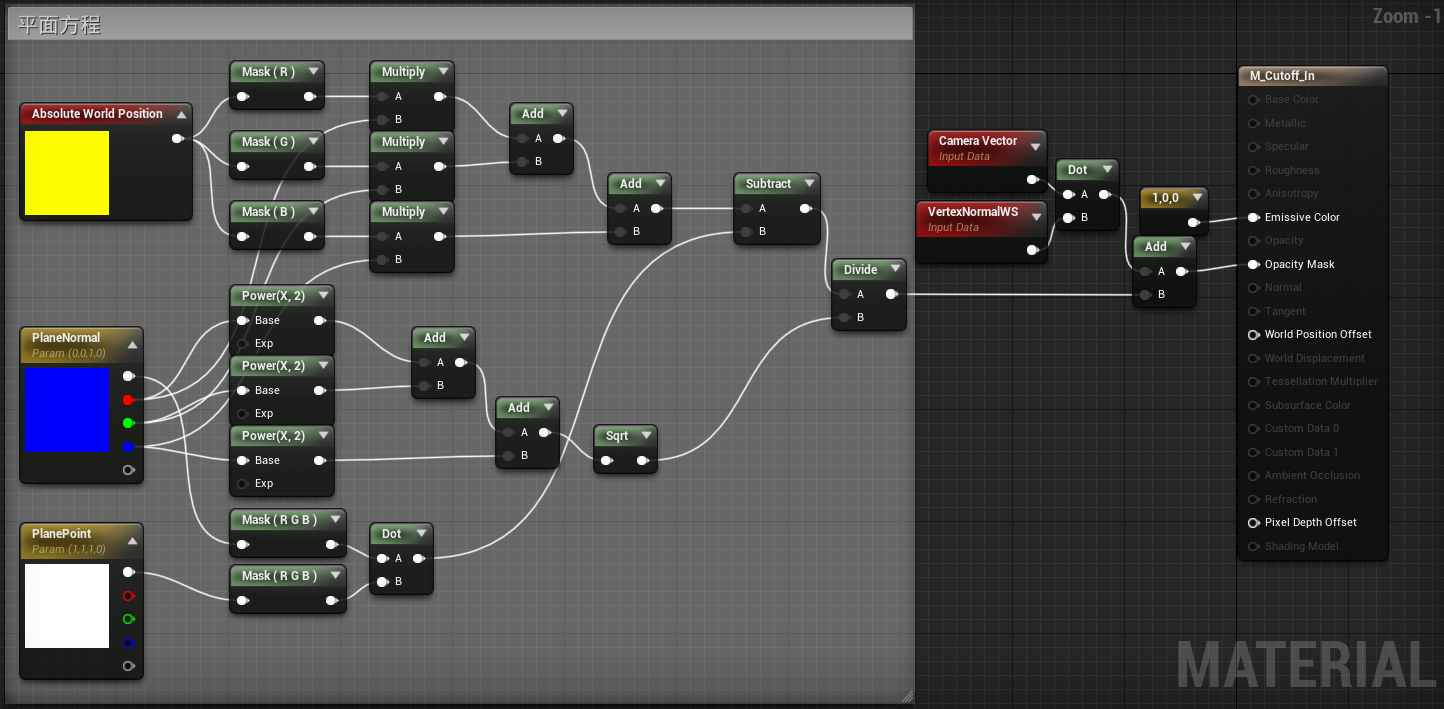

思路是使用平面方程来判断模型裁切,之后在另一面使用UnLit的自发光材质显示剖面。但Ue4的BlendingMaterialAttributes不能指定UnLit作为ShaderModel。所以可以使用我之前开发的多Pass插件搞定。

|

||||

|

||||

## 外部普通表面材质

|

||||

使用平面方程来做一个Mask即可。

|

||||

|

||||

|

||||

## 内部剖面材质

|

||||

坡面材质需要使用UnLit材质模型,这样就不会有阴影与法线的干扰了。但Unlit不能翻转法线,所以需要再勾选**双面渲染**选项。

|

||||

|

||||

|

||||

## 使用方法说明

|

||||

https://zhuanlan.zhihu.com/p/69139579

|

||||

|

||||

1. 使用上面链接介绍的代码,新建一个蓝图类并且挂载UStrokeStaticMeshComponent。

|

||||

2. 赋予UStrokeStaticMeshComponent所需剖切的模型,以及上文制作好的外部表面材质。

|

||||

3. 赋予SecondPassMaterial内部剖面材质,并勾选UStrokeStaticMeshComponent的NeedSecondPass变量。

|

||||

4. 将蓝图类拖到关卡中,并且设置2个材质的PlaneNormal与PlanePoint。PlanePoint为世界坐标,PlaneNormal需要归一化。

|

||||

|

||||

## 最终效果

|

||||

锯齿是因为垃圾笔记本散热差,把效果开低的关系。

|

||||

|

||||

|

||||

|

||||

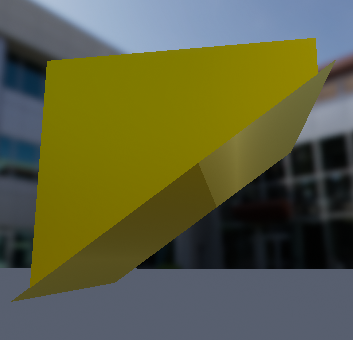

## 错误的尝试

|

||||

之前还尝试WorldPositionOffset的思路来做,不过后来发现没有实用性。因为剖面的大小与形状是不确定的,用投射的方式来平移内部可见顶点,会造成多余的顶点平移到平面外的问题。所以只适合拿来做规整模型的剖切效果。

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

错误结果:

|

||||

|

||||

|

||||

实现节点如下:

|

||||

|

||||

|

||||

CustomNode代码:

|

||||

```

|

||||

// Input PlaneNormal

|

||||

// Input PlanePoint

|

||||

// Input WorldPosition

|

||||

float NormalPower2= pow(PlaneNormal.x,2) + pow(PlaneNormal.y,2) + pow(PlaneNormal.z,2);

|

||||

float D=dot(PlaneNormal.xyz,PlanePoint.xyz)*-1;

|

||||

|

||||

float posX= ((pow(PlaneNormal.y,2) + pow(PlaneNormal.z,2))*WorldPosition.x-PlaneNormal.x*(PlaneNormal.y*WorldPosition.y+PlaneNormal.z*WorldPosition.z+D))/NormalPower2;

|

||||

|

||||

float posY=((pow(PlaneNormal.x,2) + pow(PlaneNormal.z,2))*WorldPosition.y-PlaneNormal.y*(PlaneNormal.x*WorldPosition.x+PlaneNormal.z*WorldPosition.z+D))/NormalPower2;

|

||||

|

||||

float posZ=((pow(PlaneNormal.x,2) + pow(PlaneNormal.y,2))*WorldPosition.z-PlaneNormal.z*(PlaneNormal.x*WorldPosition.x+PlaneNormal.y*WorldPosition.y+D))/NormalPower2;

|

||||

|

||||

return float3(posX,posY,posZ)-WorldPosition.xyz;

|

||||

```

|

||||

@@ -0,0 +1,57 @@

|

||||

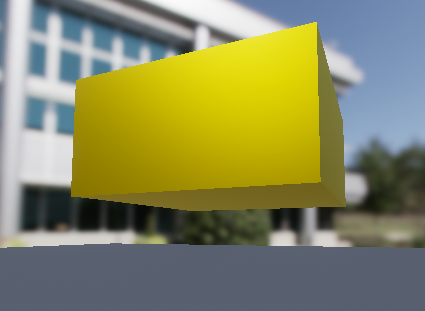

早几年学习后处理Shader的时候,遇到编辑器视口中的渲染结果与视口大小不匹配的问题:

|

||||

|

||||

|

||||

PS.该问题不会在使用SceneTexture节点制作的后处理效果材质中发生。但它不易于维护与修改。所以我花了些时间来解决这个问题。

|

||||

|

||||

## 问题成因与解决方法

|

||||

可以看得出这个问题是因为ViewSize与BufferSize不一造成的。经过一系列测试使用ViewportUVToBufferUV()对UV坐标进行转换就可以解决问题,该函数位于Common.h。

|

||||

```c#

|

||||

float2 ViewportUVToBufferUV(float2 ViewportUV)

|

||||

{

|

||||

float2 PixelPos = ViewportUV * View.ViewSizeAndInvSize.xy;

|

||||

return (PixelPos + View.ViewRectMin.xy) * View.BufferSizeAndInvSize.zw;

|

||||

}

|

||||

```

|

||||

ScreenUV使用GetViewportUV(Parameters)来获取,Step为ResolvedView.ViewSizeAndInvSize.zw,用法大致如下:

|

||||

```c#

|

||||

float GetDepthTestWeight(float2 ScreenUV,float Stepx, float Stepy, float3x3 KernelX, float3x3 KernelY,float DepthTestThreshold)

|

||||

{

|

||||

float3x3 image;

|

||||

float CurrentPixelDepth=GetScreenSpaceData(ViewportUVToBufferUV(ScreenUV)).GBuffer.Depth;

|

||||

image = float3x3(

|

||||

length(GetScreenSpaceData(ViewportUVToBufferUV(ScreenUV + float2(-Stepx,Stepy))).GBuffer.Depth-CurrentPixelDepth),

|

||||

length(GetScreenSpaceData(ViewportUVToBufferUV(ScreenUV + float2(0,Stepy))).GBuffer.Depth-CurrentPixelDepth),

|

||||

length(GetScreenSpaceData(ViewportUVToBufferUV(ScreenUV + float2(Stepx,Stepy))).GBuffer.Depth-CurrentPixelDepth),

|

||||

length(GetScreenSpaceData(ViewportUVToBufferUV(ScreenUV + float2(-Stepx,0))).GBuffer.Depth-CurrentPixelDepth),

|

||||

0,

|

||||

length(GetScreenSpaceData(ViewportUVToBufferUV(ScreenUV + float2(Stepx,0))).GBuffer.Depth-CurrentPixelDepth),

|

||||

length(GetScreenSpaceData(ViewportUVToBufferUV(ScreenUV + float2(-Stepx,-Stepy))).GBuffer.Depth-CurrentPixelDepth),

|

||||

length(GetScreenSpaceData(ViewportUVToBufferUV(ScreenUV + float2(0,-Stepy))).GBuffer.Depth-CurrentPixelDepth),

|

||||

length(GetScreenSpaceData(ViewportUVToBufferUV(ScreenUV + float2(Stepx,-Stepy))).GBuffer.Depth-CurrentPixelDepth)

|

||||

);

|

||||

|

||||

UNROLL

|

||||

for (int i = 0; i < 3; i++) {

|

||||

for (int j = 0; j < 3; j++) {

|

||||

// image[i][j] = image[i][j] > CurrentPixelDepth * DepthTestThreshold ? 1 : 0;

|

||||

image[i][j] = saturate(image[i][j] / (CurrentPixelDepth * DepthTestThreshold));

|

||||

}

|

||||

}

|

||||

|

||||

float2 StrokeWeight;

|

||||

StrokeWeight.x = convolve(KernelX, image);

|

||||

StrokeWeight.y = convolve(KernelY, image);

|

||||

|

||||

return length(StrokeWeight);

|

||||

}

|

||||

```

|

||||

|

||||

当然如果是直接在渲染管线里添加渲染Pass的方式来实现后处理效果,那也不会出现这个问题,因为每帧都会按照View大小创建新的buffer,所以不会出现这个问题。

|

||||

|

||||

## 材质编辑器的相关大小节点。

|

||||

- ScreenPosition:代码为GetViewportUV(FMaterialVertexParameters Parameters)。

|

||||

- ViewSize:可视视口的大小。代码为View.ViewSizeAndInvSize.xy,zw为倒数值。

|

||||

- MF_ScreenResolution:具体有VisibleResolution(就是ViewSize节点输出结果) 与BufferResolution(ViewProperty的RenderTargetSize)

|

||||

- SceneTexelSize(场景纹素大小):这是一个float2的值,对应着UV,(u,v),uv均为正数。

|

||||

- MF_ScreenAlignedPixelToPixelUVs:RenderTargetSize / TextureSize。 其中TextureSize可以是ViewSize。可以用来实现一些修改View大小后,后处理尺寸不变得效果。

|

||||

- 后处理节点的Size:ClampSceneTextureUV(ViewportUVToSceneTextureUV,SceneTextureId)

|

||||

@@ -0,0 +1,8 @@

|

||||

# 在UE4中实现LowPoly效果

|

||||

|

||||

## 代码

|

||||

|

||||

|

||||

## 效果

|

||||

|

||||

|

||||

8

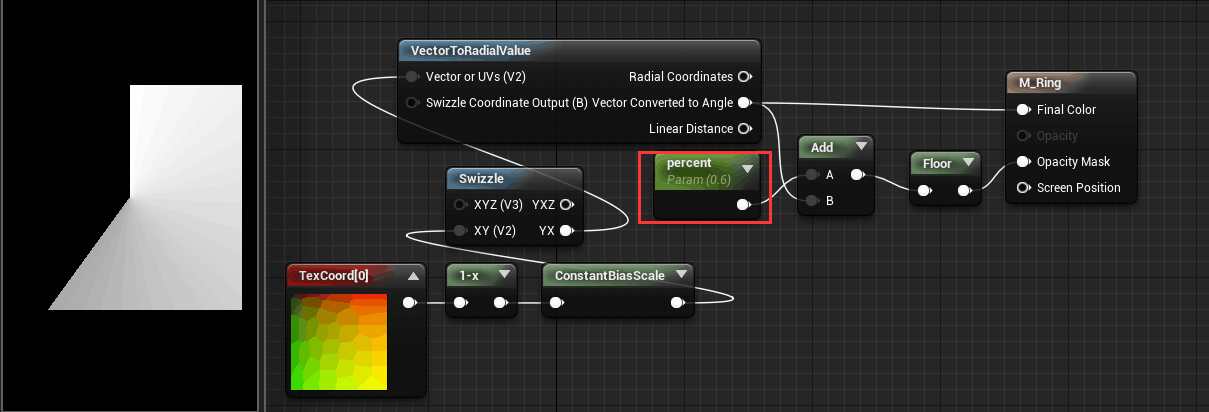

03-UnrealEngine/Rendering/Shader/Effect/技能CD转圈效果实现.md

Normal file

8

03-UnrealEngine/Rendering/Shader/Effect/技能CD转圈效果实现.md

Normal file

@@ -0,0 +1,8 @@

|

||||

---

|

||||

title: 技能CD转圈效果实现

|

||||

date: 2022-11-04 09:38:11

|

||||

excerpt:

|

||||

tags:

|

||||

rating: ⭐

|

||||

---

|

||||

|

||||

209

03-UnrealEngine/Rendering/Shader/Effect/海洋、云Demo制作方案.md

Normal file

209

03-UnrealEngine/Rendering/Shader/Effect/海洋、云Demo制作方案.md

Normal file

@@ -0,0 +1,209 @@

|

||||

---

|

||||

title: 海洋、云Demo制作方案

|

||||

date: 2022-11-04 09:38:11

|

||||

excerpt:

|

||||

tags:

|

||||

rating: ⭐

|

||||

---

|

||||

# 海洋方案

|

||||

浅墨的总结:https://zhuanlan.zhihu.com/p/95917609

|

||||

|

||||

波形:Gerstner 波、快速傅立叶变换、空间-频谱混合方法、离线FFT贴图烘焙(Offline FFT Texture)

|

||||

着色:Depth Based LUT Approach、Sub-Surface Scattering Approximation Approach

|

||||

白沫:浪尖=>[Tessendorf 2001] 基于雅克比矩阵的方法、岸边=》[GDC 2018]《孤岛惊魂5》基于有向距离场的方法、交互白浪:ASher的Niagara方案https://zhuanlan.zhihu.com/p/100700549

|

||||

|

||||

## 波形方案

|

||||

### 线性波形叠加方法

|

||||

正弦波(Sinusoids Wave)[Max 1981]

|

||||

Gerstner 波 (Gerstner Wave) [Fournier 1986]

|

||||

|

||||

### 统计学模型法

|

||||

快速傅立叶变换(Fast Fourier Transform)[Mastin 1987]

|

||||

空间-频谱混合方法(Spatial -Spectral Approaches)[Thon 2000]

|

||||

|

||||

### 波动粒子方法(不考虑)

|

||||

波动粒子方法((Wave Particles) [Yuksel 2007]

|

||||

水波小包方法(Water Wave Packets)[Jeschke 2017]

|

||||

水面小波方法(Water Surface Wavelets)[Jeschke 2018]

|

||||

|

||||

### 预渲染

|

||||

流型图(Flow Map)[Vlachos 2010]

|

||||

离线FFT贴图烘焙(Offline FFT Texture) [Torres 2012]

|

||||

离线流体帧动画烘焙(bake to flipbook)[Bowles 2017]

|

||||

|

||||

## 水体渲染中的着色方案

|

||||

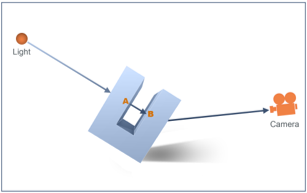

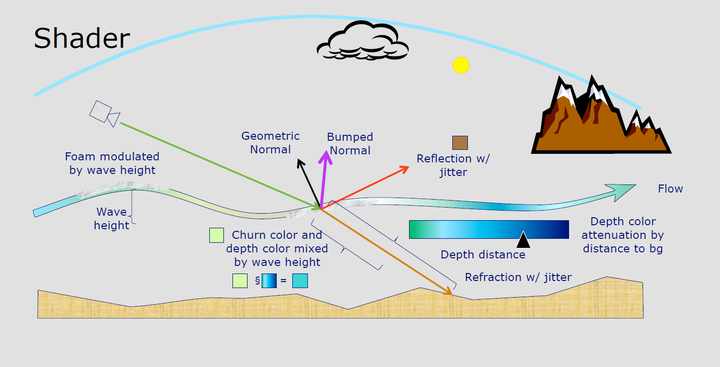

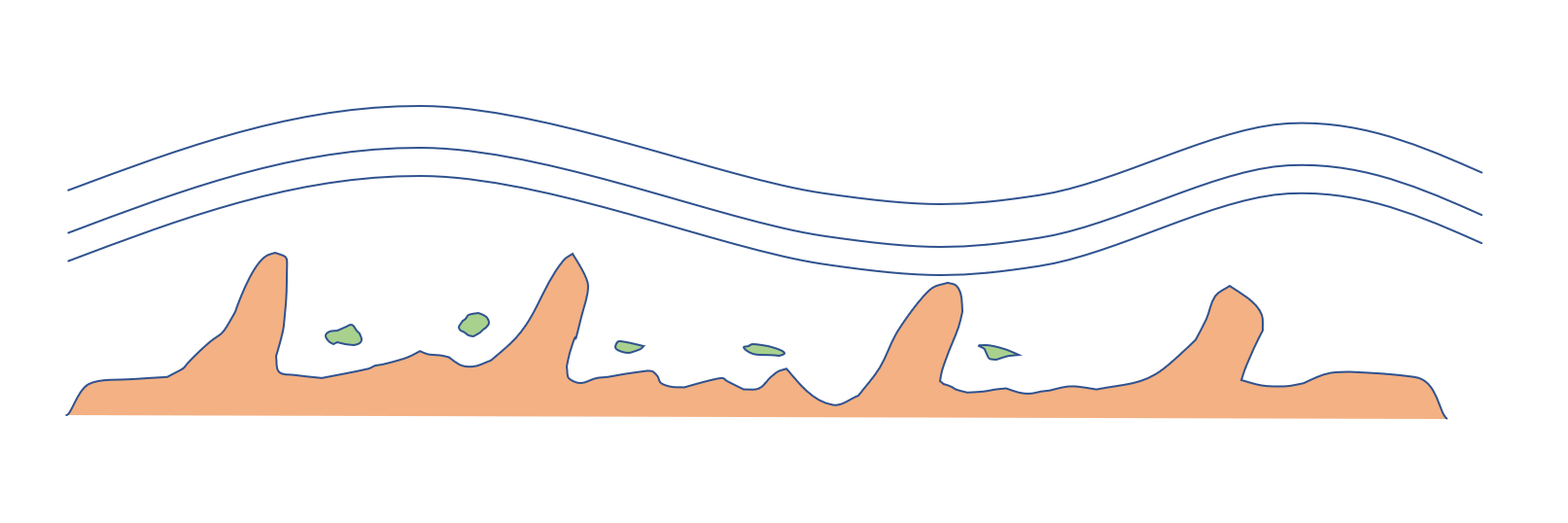

《神秘海域3》在2012年SIGGRAPH上的技术分享中有一张分析水体渲染技术非常经典的图,如下。

|

||||

|

||||

|

||||

|

||||

- 漫反射(Diffuse)

|

||||

- 镜面反射(Specular)

|

||||

- 法线贴图(Normal Map)

|

||||

- 折射(Reflection)

|

||||

- 通透感(Translucency)

|

||||

- 基于深度的查找表方法(Depth Based LUT Approach)

|

||||

- 次表面散射(Subsurface Scattering)

|

||||

- 白沫(Foam/WhiteCap)

|

||||

- 流动表现(Flow)

|

||||

- 水下雾效(Underwater Haze)

|

||||

|

||||

### 通透感(Translucency)

|

||||

#### 水体散射效果

|

||||

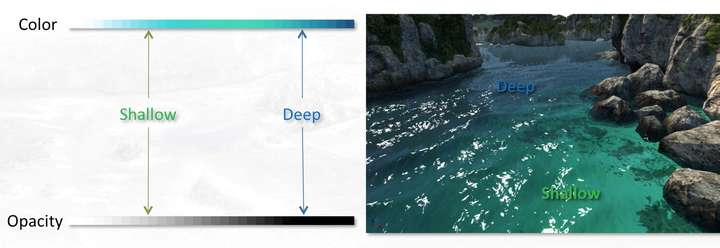

##### 基于深度的查找表方法(Depth Based LUT Approach)

|

||||

Depth Based-LUT方法的思路是,计算视线方向的水体像素深度,然后基于此深度值采样吸收/散射LUT(Absorb/Scatter LUT)纹理,以控制不同深度水体的上色,得到通透的水体质感表现。

|

||||

|

||||

|

||||

|

||||

|

||||

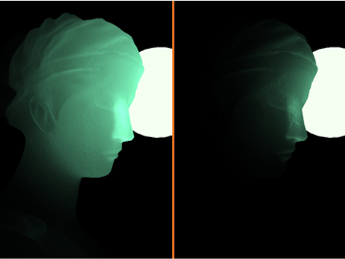

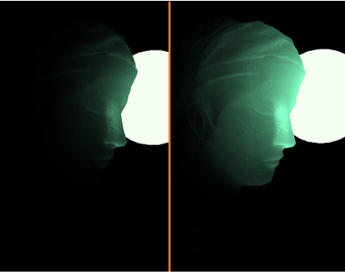

##### 次表面散射近似方法(Sub-Surface Scattering Approximation Approach)

|

||||

- [SIGGRAPH 2019] Crest Ocean System改进的《盗贼之海》SSS方案。

|

||||

- [GDC 2011] 寒霜引擎的Fast SSS方案。

|

||||

|

||||

经过Crest Ocean System改进的《盗贼之海》的SSS方案可以总结如下:

|

||||

|

||||

假设光更有可能在波浪的一侧被水散射与透射。

|

||||

基于FFT模拟产生的顶点偏移,为波的侧面生成波峰mask

|

||||

根据视角,光源方向和波峰mask的组合,将深水颜色和次表面散射水体颜色之间进行混合,得到次表面散射颜色。

|

||||

将位移值(Displacement)除以波长,并用此缩放后的新的位移值计算得出次表面散射项强度。

|

||||

对应的核心实现代码如下:

|

||||

```hlsl

|

||||

float v = abs(i_view.y);

|

||||

half towardsSun = pow(max(0., dot(i_lightDir, -i_view)),_SubSurfaceSunFallOff);

|

||||

half3 subsurface = (_SubSurfaceBase + _SubSurfaceSun * towardsSun) *_SubSurfaceColour.rgb * _LightColor0 * shadow;

|

||||

subsurface *= (1.0 - v * v) * sssIndensity;

|

||||

```

|

||||

col += subsurface;

|

||||

其中,sssIndensity,即散射强度,由采样位移值计算得出。

|

||||

|

||||

|

||||

图 《Crest Ocean System》中基于次表面散射近似的水体表现

|

||||

|

||||

|

||||

|

||||

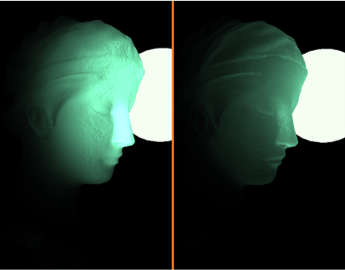

[GDC 2011] 寒霜引擎的Fast SSS方案

|

||||

[GDC 2011]上,Frostbite引擎提出的Fast Approximating Subsurface Scattering方案,也可以用于水体渲染的模拟。

|

||||

|

||||

|

||||

|

||||

### 白沫(Foam/WhiteCap)

|

||||

白沫(Foam),在有些文献中也被称为Whitecap,White Water,是一种复杂的现象。即使白沫下方的材质具有其他颜色,白沫也通常看起来是白色的。出现这种现象的原因是因为白沫是由包含空气的流体薄膜组成的。随着每单位体积薄膜的数量增加,所有入射光都被反射而没有任何光穿透到其下方。这种光学现象使泡沫看起来比材质本身更亮,以至于看起来几乎是白色的。

|

||||

|

||||

|

||||

对于白沫的渲染而言,白沫可被视为水面上的纹理,其可直接由对象交互作用,浪花的飞溅,或气泡与水表面碰撞而产生。

|

||||

|

||||

白沫的渲染方案,按大的渲染思路而言,可以分为两类:

|

||||

- 基于动态纹理(dynamic texture)

|

||||

- 基于粒子系统(particle system)

|

||||

|

||||

按照类型,可以将白沫分为三种:

|

||||

- 浪尖白沫

|

||||

- 岸边白沫

|

||||

- 交互白沫

|

||||

|

||||

而按照渲染方法,可将白沫渲染的主流方案总结如下:

|

||||

- 基于粒子系统的方法[Tessendorf 2001]

|

||||

- 基于Saturate高度的模拟方法 [GPU Gems 2]

|

||||

- 基于雅可比矩阵的方法 [Tessendorf 2001]

|

||||

- 屏幕空间方法 [Akinci 2013]

|

||||

- 基于场景深度的方法 [Kozin 2018][Sea of Thieves]

|

||||

- 基于有向距离场的方法 [GDC 2018][Far Cry 5]

|

||||

|

||||

#### 浪尖白沫

|

||||

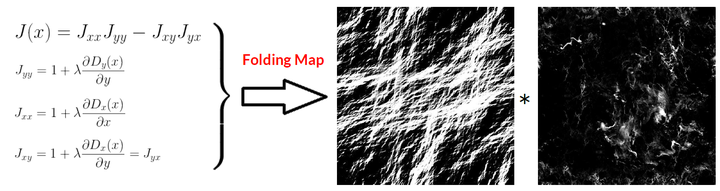

##### 5.2.1 浪尖白沫:[Tessendorf 2001] 基于雅克比矩阵的方法

|

||||

Tessendorf在其著名的水体渲染paper《Simulating Ocean Water》[Tessendorf 2001]中提出了可以基于雅克比矩阵(Jacobian)为负的部分作为求解白沫分布区域的方案。据此,即可导出一张或多张标记了波峰白沫区域的Folding Map贴图。

|

||||

|

||||

|

||||

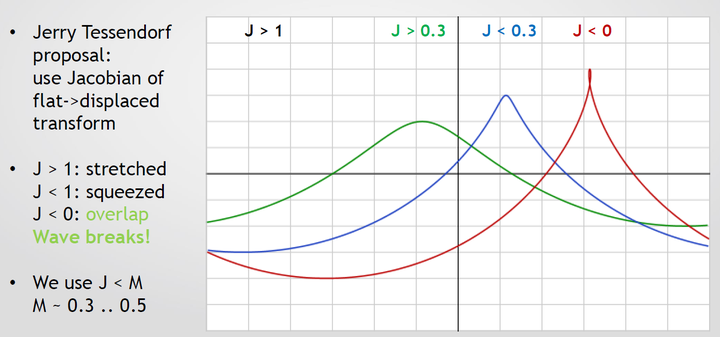

《战争雷霆(War Thunder)》团队在CGDC 2015上对此的改进方案为,取雅克比矩阵小于M的区域作为求解白沫的区域,其中M~0.3...05。

|

||||

|

||||

|

||||

图 《战争雷霆(War Thunder)》选取雅克比矩阵小于M的区域作为求解白沫的区域 [CGDC 2015]

|

||||

|

||||

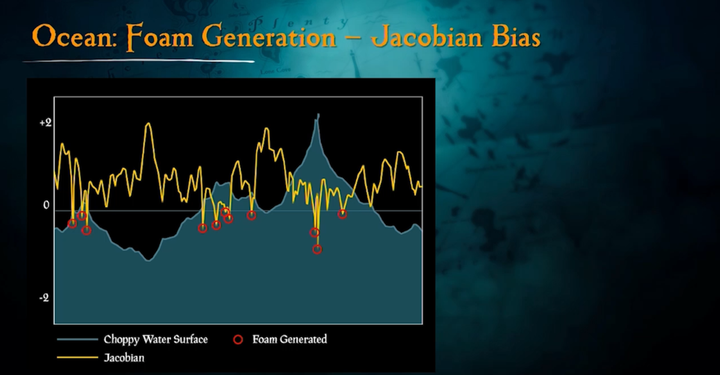

另外,《盗贼之海(Sea of Thieves)》团队在SIGGRPAPH 2018上提出,可以对雅可比矩阵进行偏移,以获得更多白沫。且可以采用渐进模糊(Progressive Blur)来解决噪点(noisy)问题以及提供风格化的白沫表现。

|

||||

|

||||

!(https://vdn1.vzuu.com/SD/cfb76434-ec75-11ea-acfd-5ab503a75443.mp4?disable_local_cache=1&bu=pico&expiration=1601438226&auth_key=1601438226-0-0-1b75c02f8faa2d5a94b74ad8ebb6e595&f=mp4&v=hw)

|

||||

|

||||

图 《盗贼之海》基于雅可比矩阵偏移 + 渐进模糊(Progressive Blur)的风格化白沫表现

|

||||

|

||||

##### 浪尖白沫:[GPU Gems 2] 基于Saturate高度的方法

|

||||

《GPU Gems 2》中提出的白沫渲染方案,思路是将一个预先创建的白沫纹理在高于某一高度H0的顶点上进行混合。白沫纹理的透明度根据以下公式进行计算:

|

||||

|

||||

`$ Foam.a=saturate(\frac {H-H_0} {H_{max}-H_0}) $`

|

||||

|

||||

- 其中, `$H_{max}$` 是泡沫最大时的高度, `$H_0$` 是基准高度,`$H$` 是当前高度。

|

||||

- 白沫纹理可以做成序列帧来表示白沫的产生和消失的演变过程。此动画序列帧既可以由美术师进行制作,也可以采用程序化生成。

|

||||

- 将上述结果和噪声图进行合理的结合,可以得到更真实的泡沫表现。

|

||||

|

||||

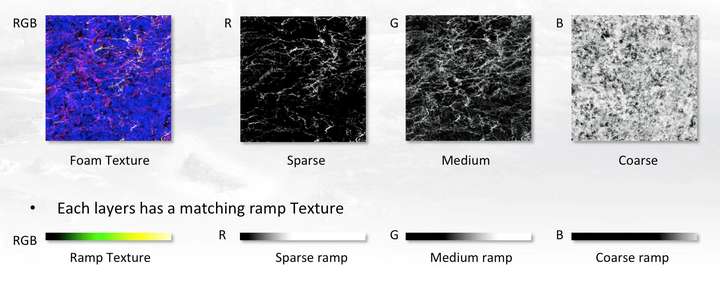

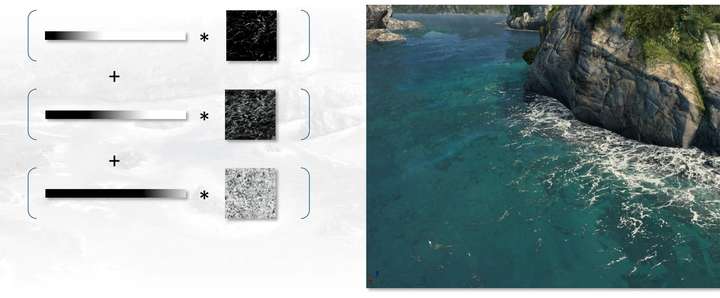

#### 岸边白沫:[2012]《刺客信条3》基于Multi Ramp Map的方法

|

||||

《刺客信条3》中的岸边白沫的渲染方案可以总结为:

|

||||

|

||||

- 以规则的间距对地形结构进行离线采样,标记出白沫出现的区域。

|

||||

- 采用高斯模糊和Perlin噪声来丰富泡沫的表现形式,以模拟海岸上泡沫的褪色现象。

|

||||

- 由于白沫是白色的,因此在R,G和B通道中的每个通道中都放置三张灰度图,然后颜色ramp图将定义三者之间的混合比率,来实现稠密、中等、稀疏三个级别的白沫。要修改白沫表现,美术师只需对ramp图进行颜色校正即可。如下图所示:

|

||||

|

||||

|

||||

|

||||

|

||||

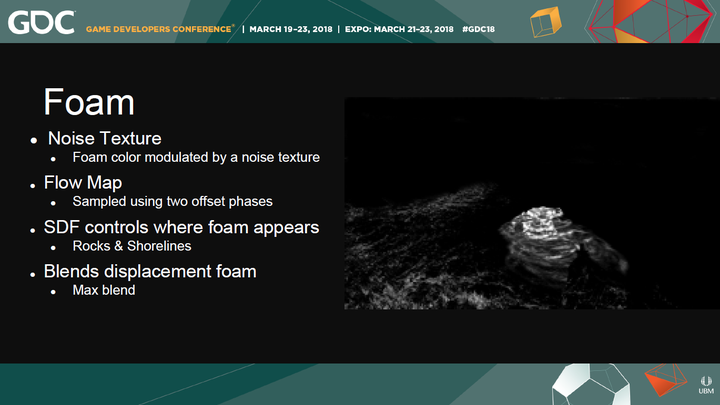

#### 浪尖白沫&岸边白沫:[GDC 2018]《孤岛惊魂5》基于有向距离场的方法

|

||||

GDC 2018上《孤岛惊魂5》团队分享的白沫渲染技术也不失为一种优秀的方案,主要思路是基于单张Noise贴图控制白沫颜色,结合两个offset采样Flow Map控制白沫的流动,并基于有向距离场(Signed Distance Field,SDF)控制岩石和海岸线处白沫的出现,然后根据位移对白沫进行混合。

|

||||

|

||||

|

||||

|

||||

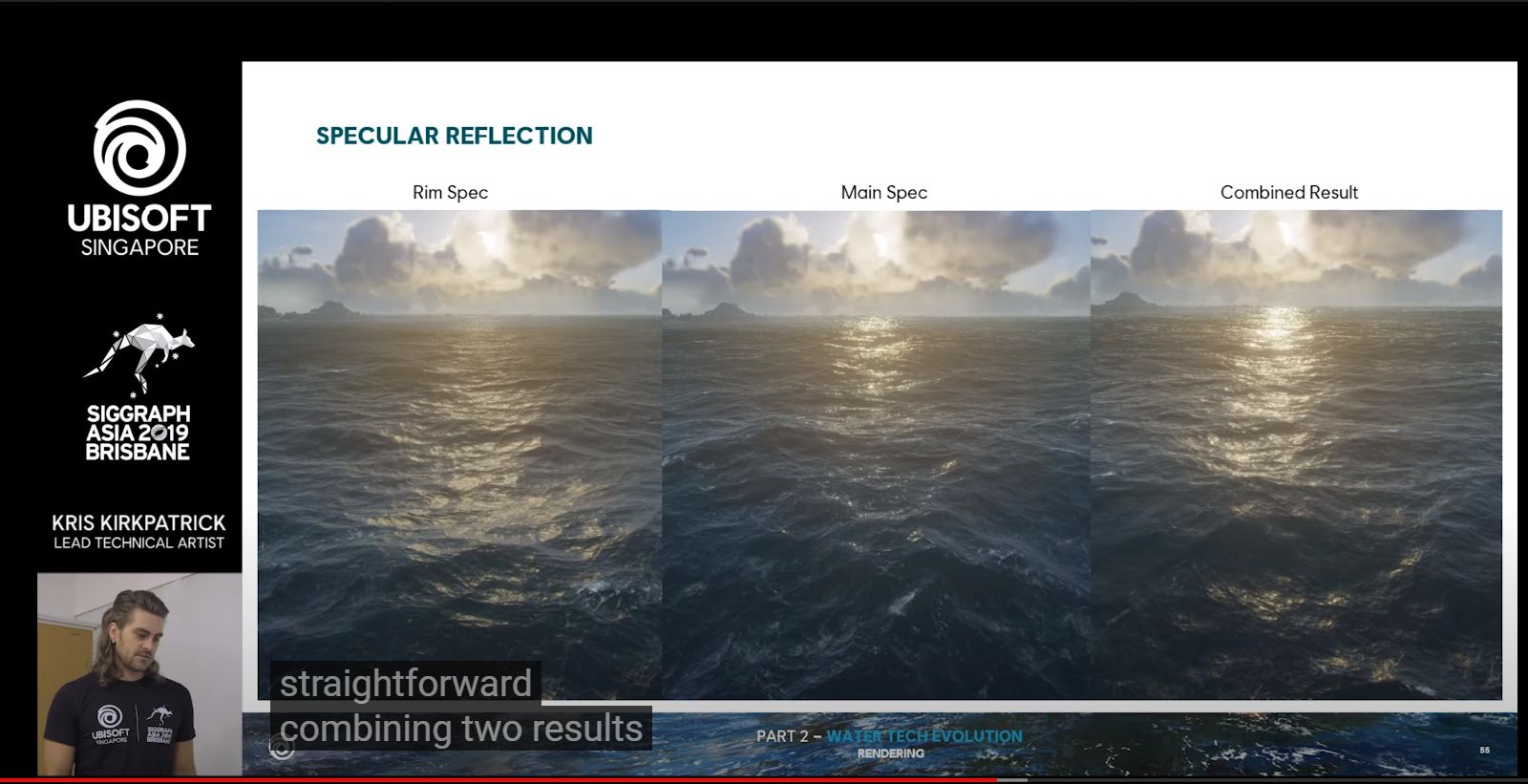

### 水面高光

|

||||

|

||||

|

||||

### 水焦散

|

||||

水焦散的渲染 (Rendering Water Caustics)

|

||||

|

||||

GPU Gems 1

|

||||

ShaderBits.com

|

||||

### 其他Demo

|

||||

6.5 NVIDIA UE4 WaveWorks

|

||||

GDC 2017上,NVIDIA和Unreal Engine合作推出了WaveWorks,以集成到Unreal Engine 4.15引擎中的形式放出。

|

||||

|

||||

源代码传送门:https://github.com/NvPhysX/UnrealEngine/tree/WaveWorks

|

||||

|

||||

demo视频:https://www.youtube.com/watch?v=DhrNvZLPBGE&list=PLN8o8XBheMDxCCfKijfZ3IlTP3cUCH6Jq&index=11&t=0s

|

||||

|

||||

## 云方案

|

||||

|

||||

eg2020的一篇很思路很新的论文

|

||||

有空写篇文章分享一下思路

|

||||

下面是改编了一下的shader

|

||||

raymarch的次数降低了很多倍(同样也增加了不少sdf的采样次数)

|

||||

优化思路想到再发。

|

||||

|

||||

https://www.shadertoy.com/view/WdKczW

|

||||

### ASher的方案

|

||||

https://zhuanlan.zhihu.com/p/107016039

|

||||

https://www.bilibili.com/video/BV1FE411n7rU

|

||||

|

||||

https://www.bilibili.com/video/BV1L54y1271W

|

||||

|

||||

### 大表哥2

|

||||

分帧渲染

|

||||

https://zhuanlan.zhihu.com/p/127435500

|

||||

|

||||

安泊霖的分析:

|

||||

https://zhuanlan.zhihu.com/p/91359071

|

||||

|

||||

### 地平线:黎明时分

|

||||

在有道云里有pdf

|

||||

https://zhuanlan.zhihu.com/p/97257247

|

||||

https://zhuanlan.zhihu.com/p/57463381

|

||||

|

||||

### UE4云层阴影实现

|

||||

https://zhuanlan.zhihu.com/p/130785313

|

||||

|

||||

## 其他相关

|

||||

### GPU Gems 1

|

||||

https://developer.nvidia.com/gpugems/gpugems/foreword

|

||||

|

||||

http://http.download.nvidia.com/developer/GPU_Gems/CD_Image/Index.html

|

||||

|

||||

浅墨的总结:

|

||||

https://zhuanlan.zhihu.com/p/35974789

|

||||

https://zhuanlan.zhihu.com/p/36499291

|

||||

|

||||

真实感水体渲染(Realistic Water Rendering)

|

||||

无尽草地的渲染(Rendering Countless Blades of Waving Grass)

|

||||

水焦散渲染(Rendering Water Caustics)

|

||||

57

03-UnrealEngine/Rendering/Shader/Effect/皮皮虾材质方案.md

Normal file

57

03-UnrealEngine/Rendering/Shader/Effect/皮皮虾材质方案.md

Normal file

@@ -0,0 +1,57 @@

|

||||

---

|

||||

title: 皮皮虾材质方案

|

||||

date: 2022-12-06 16:06:06

|

||||

excerpt:

|

||||

tags:

|

||||

rating: ⭐

|

||||

---

|

||||

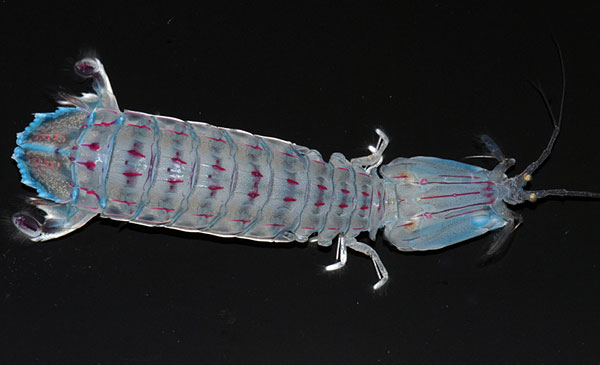

## 效果分析

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

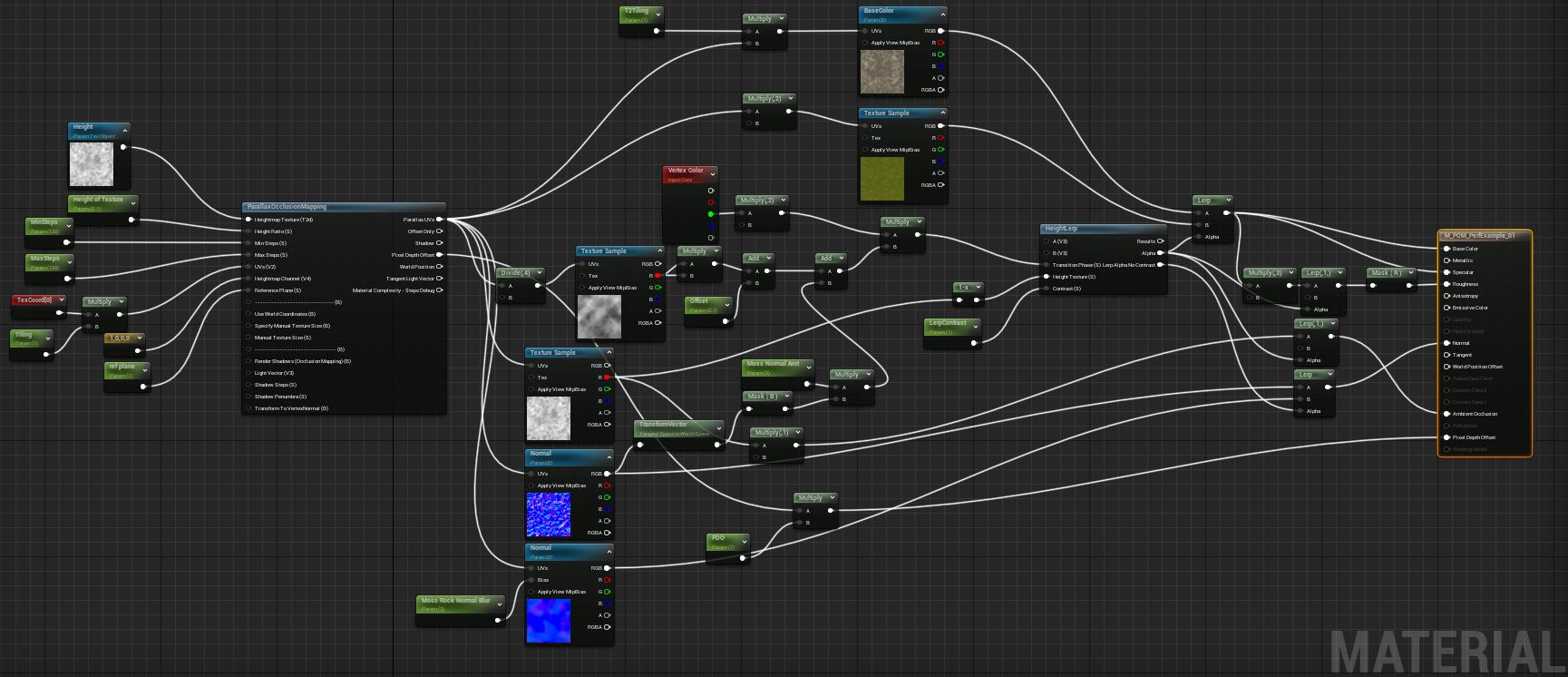

由观察可得皮皮虾由外表面的透明甲壳材质与内部的肉材质组成。结构如下图:

|

||||

|

||||

解决方案:

|

||||

- 外层甲壳材质:

|

||||

- 透明度:以最薄处作为基础的透明度,叠加上 BumpOffset/POM 的HeightTexture的采样结果(深度)乘以参数计算出的透明度。

|

||||

- Roughness:很光滑光滑 0~0.2

|

||||

- Metallic:非金属(我们不需要反射)

|

||||

- 内部肉材质:

|

||||

- 贴图使用 BumpOffset/POM 进行UV偏移。

|

||||

- Roughness:粗糙 1。

|

||||

- Metallic:非金属

|

||||

- 中间层

|

||||

- 中间发光亮片:通过建模实现,内部放上一些小亮片,并且添加独立的自发光材质控制。

|

||||

- 中间雾气效果:通过建模实现,里面放上几个条带,添加类似雾气粒子的材质。

|

||||

|

||||

## 多层材质的距离感

|

||||

可以使用2种方案,主要都是通过偏移UV的方式来实现视差效果:

|

||||

1. BumpOffset

|

||||

2. POM

|

||||

|

||||

POM的会有较大的性能损耗,但与BumpOffset相比在视角较小时依然有着不错的效果。部分效果不佳的地方mask掉,防止穿帮。所以BumpOffset可以作为其下位代替。

|

||||

|

||||

|

||||

如果这2个方法不行就只能考虑使用2个模型,也就是一个外壳一个内部肉(不推荐)

|

||||

|

||||

## 具体方案

|

||||

### 两层模型

|

||||

- 外壳使用DefaultLit、Translucent

|

||||

- 内部使用Subsurface

|

||||

|

||||

缺点:内部模型需要蒙皮。

|

||||

|

||||

### MultiDraw

|

||||

MultiDraw在5.1支持不透明材质渲染透明效果了。所以可以是只用一个模型+HeightTexture配合MultiDraw二次绘制第二个材质效果。

|

||||

|

||||

缺点:有穿帮的危险。

|

||||

|

||||

### BumpOffset/POM

|

||||

使用DefaultLit或者车漆材质尝试 外壳/内部肉(视差),参考龙虾人的眼睛

|

||||

|

||||

1. 实现DefaultLit版本

|

||||

2. 查看车漆材质并且实现

|

||||

|

||||

缺点:5.1版本前无法做到材质物理上的正确。

|

||||

118

03-UnrealEngine/Rendering/Shader/SNN_Kuwahara Filter.md

Normal file

118

03-UnrealEngine/Rendering/Shader/SNN_Kuwahara Filter.md

Normal file

@@ -0,0 +1,118 @@

|

||||

---

|

||||

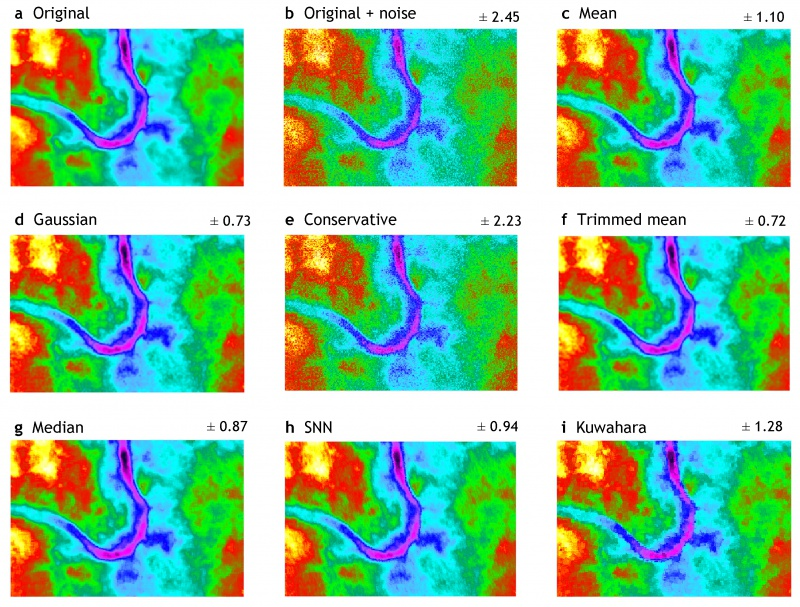

title: SNN/Kuwahara Filter

|

||||

date: 2022-10-19 13:44:01

|

||||

excerpt:

|

||||

tags: PostProcess

|

||||

rating: ⭐⭐

|

||||

---

|

||||

## 参考文章

|

||||

|

||||

## Kuwahara(算法发明者是日本人)

|

||||

Wiki:https://en.wikipedia.org/wiki/Kuwahara_filter

|

||||

各种Kuwahara算法:https://zhuanlan.zhihu.com/p/354523349

|

||||

|

||||

- Kuwahara:

|

||||

- 广义Kuwahara(Generalized Kuwahara filter):

|

||||

- 各向异性Kuwahara( Anisotropic Kuwahara filter):https://www.shadertoy.com/view/ltyyDd

|

||||

- Adaptative Kuwahara filter:https://link.springer.com/article/10.1007/s11760-015-0791-3

|

||||

- Ganeralized Kuwahara:https://www.shadertoy.com/view/ltGcWD

|

||||

|

||||

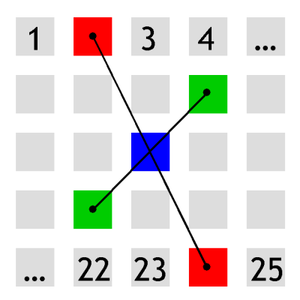

## SNN(Symmetric nearest neighbour filter)

|

||||

Wiki:https://subsurfwiki.org/wiki/Symmetric_nearest_neighbour_filter

|

||||

|

||||

https://www.shadertoy.com/view/MlyfWd

|

||||

|

||||

|

||||

|

||||

|

||||

比较相反方向的像素对,并选择那些值最接近中心输入像素的像素(见图)。然后它返回所选像素的平均值。通过这种方式,它可以保留更多的边缘细节。

|

||||

|

||||

|

||||

|

||||

```c++

|

||||

vec3 CalcSNN(in vec2 fragCoord) {

|

||||

vec2 src_size = iResolution.xy;

|

||||

vec2 inv_src_size = 1.0f / src_size;

|

||||

vec2 uv = fragCoord * inv_src_size;

|

||||

|

||||

vec3 c0 = texture(iChannel0, uv).rgb;

|

||||

|

||||

vec4 sum = vec4(0.0f, 0.0f, 0.0f, 0.0f);

|

||||

|

||||

//{(1,0)~(5,0)}

|

||||

for (int i = 1; i <= half_width; ++i) {

|

||||

vec3 c1 = texture(iChannel0, uv + vec2(+i, 0) * inv_src_size).rgb;

|

||||

vec3 c2 = texture(iChannel0, uv + vec2(-i, 0) * inv_src_size).rgb;

|

||||

|

||||

float d1 = CalcDistance(c1, c0);

|

||||

float d2 = CalcDistance(c2, c0);

|

||||

if (d1 < d2) {

|

||||

sum.rgb += c1;

|

||||

} else {

|

||||

sum.rgb += c2;

|

||||

}

|

||||

sum.a += 1.0f;

|

||||

}

|

||||

|

||||

//{(-5,1)~(5,5)}

|

||||

for (int j = 1; j <= half_width; ++j) {

|

||||

for (int i = -half_width; i <= half_width; ++i) {

|

||||

vec3 c1 = texture(iChannel0, uv + vec2(+i, +j) * inv_src_size).rgb;

|

||||

vec3 c2 = texture(iChannel0, uv + vec2(-i, -j) * inv_src_size).rgb;

|

||||

|

||||

float d1 = CalcDistance(c1, c0);

|

||||

float d2 = CalcDistance(c2, c0);

|

||||

if (d1 < d2) {

|

||||

sum.rgb += c1;

|

||||

} else {

|

||||

sum.rgb += c2;

|

||||

}

|

||||

sum.a += 1.0f;

|

||||

}

|

||||

}

|

||||

|

||||

return sum.rgb / sum.a;

|

||||

}

|

||||

|

||||

```

|

||||

|

||||

### 双边滤波

|

||||

https://www.shadertoy.com/view/4dfGDH

|

||||

https://zhuanlan.zhihu.com/p/180497579

|

||||

高斯是基于距离的权重,双边是这个基础上增加了颜色权重。

|

||||

|

||||

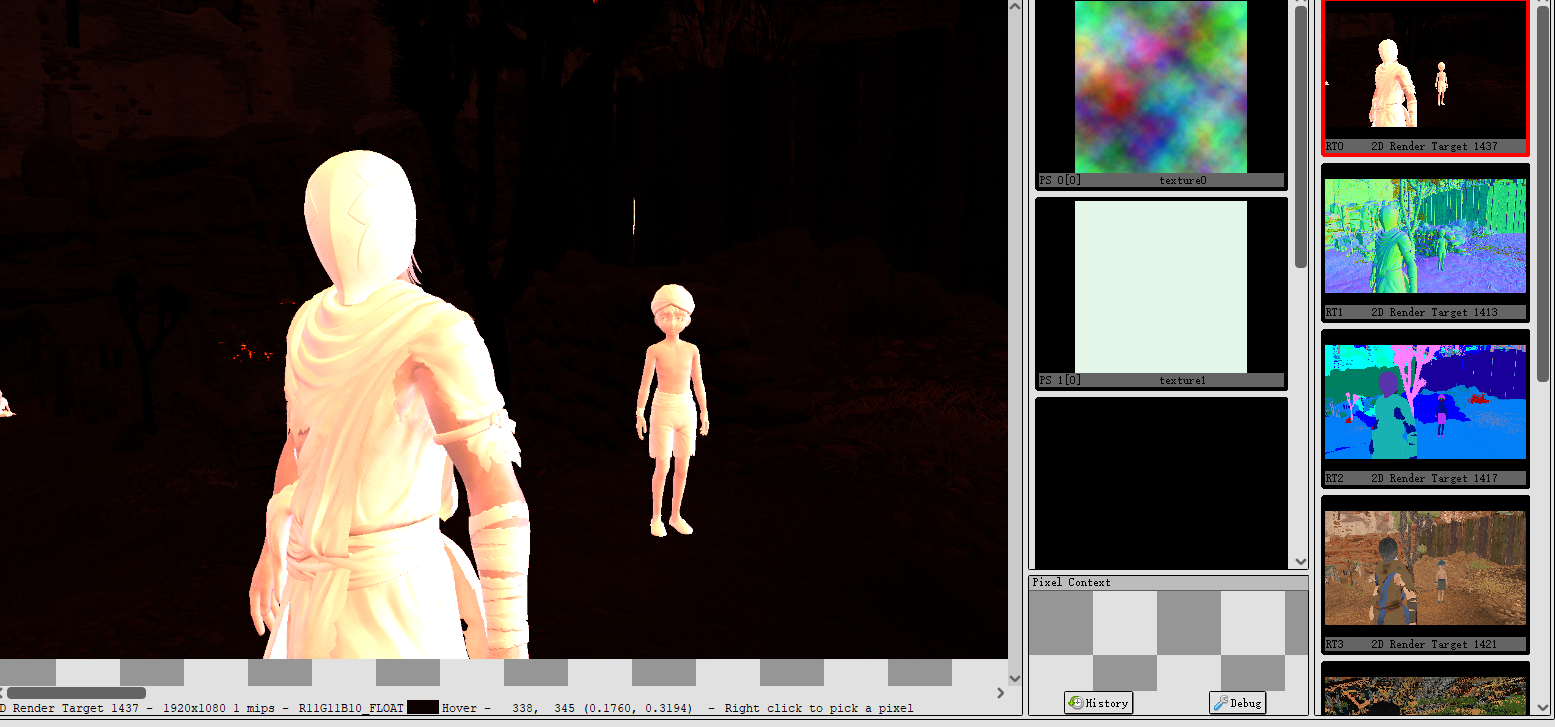

### 破晓传说后处理

|

||||

|

||||

BasePass中。角色会渲染出奇怪的黄色。

|

||||

|

||||

- R通道为1

|

||||

- G通道为猜测为灯光光照结果,因为能看得出场景的光照。

|

||||

- B通道为猜测为天光+AO(很不确定)

|

||||

- A通道为角色Mask

|

||||

G、B概率是存了2种数据。但最后依然是在Lighting渲染出整个场景与角色的光照。

|

||||

|

||||

9650:之前是BasePass

|

||||

14046~14139:脸的SSS效果

|

||||

14146:眼睛

|

||||

14258~14296:角色头发

|

||||

14318~14420:

|

||||

14438~14589:

|

||||

14620~14848:渲染角色外的场景

|

||||

14868:开始渲染角色

|

||||

|

||||

光照渲染用了很多Ramp

|

||||

|

||||

15117~15159:锐化。智能锐化 1半径、130%强度效果接近,但可以看得出,角色暗部的锐化效果会更加明显。

|

||||

15182:SNN处理场景(不对角色进行处理)

|

||||

15202:描边。法线+深度描边。

|

||||

15219:描边合并。

|

||||

15240:提取暗部细节。

|

||||

|

||||

中间是粒子

|

||||

|

||||

17738:LUT 调整颜色

|

||||

17758:有点像计算 sobel 过滤u与v之后,`vec3 col = vec3(dot(u, u), dot(v, v), dot(u, v));`的结果。

|

||||

17776:感觉是重要步骤,但看不出是啥

|

||||

17790:边缘模糊

|

||||

|

||||

绘制UI

|

||||

@@ -0,0 +1,71 @@

|

||||

---

|

||||

title: ScreenPercentage

|

||||

date: 2022-11-09 10:32:14

|

||||

excerpt:

|

||||

tags:

|

||||

rating: ⭐

|

||||

---

|

||||

|

||||

## ScreenPercentage

|

||||

基本都与后处理盒子的ScreenPercentage相关。

|

||||

- ScreenPercentage:Scene.h

|

||||

- ScreenPercentageInterface:SceneView.h

|

||||

- ScreenPercentage:ShowFlag.h

|

||||

- ScreenPercentage:TemporalAA.cpp,FTAAStandaloneCS::FParameters

|

||||

- ScreenPercentage:PlanarReflectionComponent.h

|

||||

最后用于TAA上采样计算。

|

||||

|

||||

## DPI

|

||||

使用`UserInterfaceSettings->GetDPIScaleBasedOnSize(ViewportSize);`作为采样步长的因子即可。

|

||||

```c++

|

||||

float SGameLayerManager::GetGameViewportDPIScale() const

|

||||

{

|

||||

const FSceneViewport* Viewport = SceneViewport.Get();

|

||||

|

||||

if (Viewport == nullptr)

|

||||

{ return 1;

|

||||

}

|

||||

const auto UserInterfaceSettings = GetDefault<UUserInterfaceSettings>(UUserInterfaceSettings::StaticClass());

|

||||

|

||||

if (UserInterfaceSettings == nullptr)

|

||||

{ return 1;

|

||||

}

|

||||

FIntPoint ViewportSize = Viewport->GetSize();

|

||||

float GameUIScale;

|

||||

|

||||

if (bUseScaledDPI)

|

||||

{ float DPIValue = UserInterfaceSettings->GetDPIScaleBasedOnSize(ScaledDPIViewportReference);

|

||||

float ViewportScale = FMath::Min((float)ViewportSize.X / (float)ScaledDPIViewportReference.X, (float)ViewportSize.Y / (float)ScaledDPIViewportReference.Y);

|

||||

|

||||

GameUIScale = DPIValue * ViewportScale;

|

||||

} else

|

||||

{

|

||||

GameUIScale = UserInterfaceSettings->GetDPIScaleBasedOnSize(ViewportSize);

|

||||

}

|

||||

// Remove the platform DPI scale from the incoming size. Since the platform DPI is already

|

||||

// attempt to normalize the UI for a high DPI, and the DPI scale curve is based on raw resolution // for what a assumed platform scale of 1, extract that scale the calculated scale, since that will // already be applied by slate. const float FinalUIScale = GameUIScale / Viewport->GetCachedGeometry().Scale;

|

||||

|

||||

return FinalUIScale;

|

||||

}

|

||||

```

|

||||

|

||||

```c++

|

||||

// this function requires the UserInterfaceSettings header to be included

|

||||

#include Runtime/Engine/Classes/Engine/UserInterfaceSettings.h

|

||||

|

||||

// this function can be marked as Blueprint Pure in its declaration, as it simply returns a float

|

||||

float MyBPFL::GetUMG_DPI_Scale() {

|

||||

// need a variable here to pass to the GetViewportSize function

|

||||

FVector2D viewportSize;

|

||||

// as this function returns through the parameter, we just need to call it by passing in our FVector2D variable

|

||||

GEngine->GameViewport->GetViewportSize(viewportSize);

|

||||

|

||||

// we need to floor the float values of the viewport size so we can pass those into the GetDPIScaleBasedOnSize function

|

||||

int32 X = FGenericPlatformMath::FloorToInt(viewportSize.X);

|

||||

int32 Y = FGenericPlatformMath::FloorToInt(viewportSize.Y);

|

||||

|

||||

// the GetDPIScaleBasedOnSize function takes an FIntPoint, so we construct one out of the floored floats of the viewport

|

||||

// the fuction returns a float, so we can return the value out of our function here

|

||||

return GetDefault<UUserInterfaceSettings>(UUserInterfaceSettings::StaticClass())->GetDPIScaleBasedOnSize(FIntPoint(X,Y));

|

||||

}

|

||||

```

|

||||

113

03-UnrealEngine/Rendering/Shader/UE GBuffer存储数据.md

Normal file

113

03-UnrealEngine/Rendering/Shader/UE GBuffer存储数据.md

Normal file

@@ -0,0 +1,113 @@

|

||||

---

|

||||

title: UE GBuffer存储数据

|

||||

date: 2022-10-11 13:07:27

|

||||

excerpt:

|

||||

tags: Rendering

|

||||

rating: ⭐

|

||||

---

|

||||

|

||||

https://zhuanlan.zhihu.com/p/400677108

|

||||

| MRT | R | G | B | A |

|

||||

| -------------------------------- | -------------------------- | -------------------------- | -------------------------- | ------------------------------------- |

|

||||

| MRT0 | SceneColor.r | SceneColor.g | SceneColor.b | Alpha(Opacity?) |

|

||||

| MRT1 GBufferA | WorldNormal.r | WorldNormal.g | WorldNormal.b | PerObjectGBufferData |

|

||||

| MRT2 GBUfferB | Metallic | Specular | Roughness | ShadingModelId && SelectiveOutputMask |

|

||||

| MRT3 GBUfferC | BaseColor.r | BaseColor.g | BaseColor.b | IndirectIrradiance |

|

||||

| MRT4 GBUfferD(根据需求插入) | Velocity.r | Velocity.g | Velocity.b | Velocity.a |

|

||||

| MRT5 GBUfferE(Velocity不渲染为D) | CustomData.r | CustomData.g | CustomData.b | CustomData.a |

|

||||

| MRT6 GBUfferF(Velocity不渲染为E) | PrecomputedShadowFactors.r | PrecomputedShadowFactors.g | PrecomputedShadowFactors.b | PrecomputedShadowFactors.a |

|

||||

| MRT7 | | | | |

|

||||

|

||||

## Depth Stencil

|

||||

[0] sandbox bit (bit to be use by any rendering passes, but must be properly reset to 0 after using)

|

||||

STENCIL LOD抖动过渡,后处理,贴花,Local光源都有使用,用来绘制临时Mask

|

||||

**[1] unallocated**

|

||||

**[2] unallocated**

|

||||

[3] Temporal AA mask for translucent object.

|

||||

标记后TAA将不再读取History数据,用来处理半透物体的TAA问题

|

||||

|

||||

**1-3在移动延迟会被借用储存ShadingMode,但小于ShadingMode总数量,不清楚怎么处理的。但因为毕竟有重复使用,不适合直接占用这两个值。**

|

||||

[4] Lighting channels

|

||||

[5] Lighting channels

|

||||

[6] Lighting channels

|

||||

[7] Primitive receive decal bit

|

||||

|

||||

## GBuffer

|

||||

**SceneColorDeferred(场景颜色)**

|

||||

RGBAHalf(移动默认R11G11B10)

|

||||

Emissive直接写入这里,非自发光写入BaseColor。A通道正常储存Alpha值,通常情况没用。

|

||||

>**原神将其和BaseColor合并了,由Alpha来标记自发光强度。这使得自发光颜色必须和BaseColor相同,限制了其表达。在需要不同颜色的时候,原神则用叠加半透材质的方法实现。**

|

||||

**用这个方法需要给原本在BaseColor.a的IndirectIrradiance * AO 再找个位置。**

|

||||

|

||||

**GBufferA(法线)**

|

||||

R10G10B10A2

|

||||

移动延迟用八面体Encode法线到RG,B为PrecomputedShadowFactors.x。

|

||||

|

||||

PC直接储存法线到RGB(禁用的分支里可以Encode法线到RG,如果这样做了B可以空出一个10bit,但移动没法使用。不如和移动一样把PrecomputedShadowFactors.x移到这来。不过PrecomputedShadowFactors.x其实也不需要10bit,可以拆2bit做特殊用途)

|

||||

|

||||

A为PerObjectGBufferData(bit1:CastContactShadow,bit2:HasDynamicIndirectShadowCasterRepresentation)

|

||||

|

||||

**GBufferB**

|

||||

RGBA8888

|

||||

金属,高光,粗糙,ShadingModelID+SelectiveOutputMask(各占4bit,Shading Mode最大值16)

|

||||

|

||||

SelectiveOutputMask记录了绘制时以下宏的开启结果:

|

||||

- MATERIAL_USES_ANISOTROPY 禁止计算各向异性

|

||||

- !GBUFFER_HAS_PRECSHADOWFACTOR 禁止读取GBufferE数据作为预计算阴影

|

||||

- GBUFFER_HAS_PRECSHADOWFACTOR && WRITES_PRECSHADOWFACTOR_ZERO 当不读取GBufferE时,若此值为1时,预计算阴影设为0,否则为1。

|

||||

- WRITES_VELOCITY_TO_GBUFFER 禁止从Gbuffer中读取速度值。

|

||||

|

||||

**因为没写速度的时候Buffer里是0,并不是零速度,所以必须靠它来Mask,但如果将速度图Clear为零速度,按说可以不需要这个Mask,就可以用在别处**

|

||||

**并不觉得高光值得占用8bit的容量,可以压缩其他数据到高光上。**

|

||||

> 常见的做法还有将F0储存至RGB,让SSR无需采样BaseColor,但这样一来粗糙度又需要另找地方储存(可储存在法线B通道上)

|

||||

|

||||

**GBufferC(BaseColor)**

|

||||

RGBA8888

|

||||

RGB储存Lit物体的BaseColor,A在有静态光照时候储存随机抖动过的IndirectIrradiance * Material AO,否则直接储存Material AO。

|

||||

|

||||

**GBufferD(自定义数据)**

|

||||

|

||||

**GBufferE(静态阴影)**

|

||||

|

||||

**GBufferVelocity(速度)**

|

||||

|

||||

|

||||

## 实用函数

|

||||

LightingPass中的调用:

|

||||

```c++

|

||||

uint PixelShadingModelID = GetScreenSpaceData(ScreenUV).GBuffer.ShadingModelID;

|

||||

```

|

||||

最终:

|

||||

```c++

|

||||

FGBufferData GetGBufferData(float2 UV, bool bGetNormalizedNormal = true)

|

||||

{

|

||||

float4 GBufferA = Texture2DSampleLevel(SceneTexturesStruct.GBufferATexture, SceneTexturesStruct_GBufferATextureSampler, UV, 0);

|

||||

float4 GBufferB = Texture2DSampleLevel(SceneTexturesStruct.GBufferBTexture, SceneTexturesStruct_GBufferBTextureSampler, UV, 0);

|

||||

float4 GBufferC = Texture2DSampleLevel(SceneTexturesStruct.GBufferCTexture, SceneTexturesStruct_GBufferCTextureSampler, UV, 0);

|

||||

float4 GBufferD = Texture2DSampleLevel(SceneTexturesStruct.GBufferDTexture, SceneTexturesStruct_GBufferDTextureSampler, UV, 0);

|

||||

float4 ToonDataA = Texture2DSampleLevel(SceneTexturesStruct.ToonBufferATexture, SceneTexturesStruct_ToonBufferATextureSampler, UV, 0);

|

||||

float CustomNativeDepth = Texture2DSampleLevel(SceneTexturesStruct.CustomDepthTexture, SceneTexturesStruct_CustomDepthTextureSampler, UV, 0).r;

|

||||

|

||||

int2 IntUV = (int2)trunc(UV * View.BufferSizeAndInvSize.xy);

|

||||

uint CustomStencil = SceneTexturesStruct.CustomStencilTexture.Load(int3(IntUV, 0)) STENCIL_COMPONENT_SWIZZLE;

|

||||

|

||||

#if ALLOW_STATIC_LIGHTING

|

||||

float4 GBufferE = Texture2DSampleLevel(SceneTexturesStruct.GBufferETexture, SceneTexturesStruct_GBufferETextureSampler, UV, 0);

|

||||

#else

|

||||

float4 GBufferE = 1;

|

||||

#endif

|

||||

|

||||

float4 GBufferF = Texture2DSampleLevel(SceneTexturesStruct.GBufferFTexture, SceneTexturesStruct_GBufferFTextureSampler, UV, 0);

|

||||

|

||||

#if WRITES_VELOCITY_TO_GBUFFER

|

||||

float4 GBufferVelocity = Texture2DSampleLevel(SceneTexturesStruct.GBufferVelocityTexture, SceneTexturesStruct_GBufferVelocityTextureSampler, UV, 0);

|

||||

#else

|

||||

float4 GBufferVelocity = 0;

|

||||

#endif

|

||||

|

||||

float SceneDepth = CalcSceneDepth(UV);

|

||||

return DecodeGBufferData(GBufferA, GBufferB, GBufferC, GBufferD, GBufferE, GBufferF, GBufferVelocity, ToonDataA, CustomNativeDepth, CustomStencil, SceneDepth, bGetNormalizedNormal, CheckerFromSceneColorUV(UV));

|

||||

}

|

||||

```

|

||||

|

||||

>GBuffer.ShadingModelID = DecodeShadingModelId(InGBufferB.a);

|

||||

83

03-UnrealEngine/Rendering/Shader/UE4.25传统渲染方式的代码迁移指南.md

Normal file

83

03-UnrealEngine/Rendering/Shader/UE4.25传统渲染方式的代码迁移指南.md

Normal file

@@ -0,0 +1,83 @@

|

||||

---

|

||||

title: UE4.25传统渲染方式的代码迁移指南

|

||||

date: 2020-11-10 13:55:15

|

||||

tags: Shader

|

||||

rating: ⭐️

|

||||

---

|

||||

## 前言

|

||||

4.25的GlobalShader的调用代码发生了一下变化,我在写完RDG例子后顺便写了一下GlobalShader的使用案例。具体的可以参考我的插件:

|

||||

|

||||

[https://github.com/blueroseslol/BRPlugins](https://github.com/blueroseslol/BRPlugins)

|

||||

|

||||

代码位于SimpleGlobalShader.cpp中。

|

||||

|

||||

## 变量使用LAYOUT_FIELD宏进行包裹

|

||||

对于新定义用于设置变量的GlobalShader基类使用,需要使用**DECLARE_INLINE_TYPE_LAYOUT**(FYourShaderName,NonVirtual)宏进行声明。另外还有**DECLARE_TYPE_LAYOUT**(FYourShaderName,NonVirtual)宏,不太清楚差别,但作用应该是一样的。

|

||||

|

||||

除此之外还有

|

||||

```

|

||||

class FSimpleGlobalShader : public FGlobalShader

|

||||

{

|

||||

DECLARE_INLINE_TYPE_LAYOUT(FSimpleGlobalShader, NonVirtual);

|

||||

public:

|

||||

static bool ShouldCompilePermutation(const FGlobalShaderPermutationParameters& Parameters)

|

||||

{

|

||||

return IsFeatureLevelSupported(Parameters.Platform, ERHIFeatureLevel::SM5);

|

||||

}

|

||||

|

||||

static void ModifyCompilationEnvironment(const FGlobalShaderPermutationParameters& Parameters, FShaderCompilerEnvironment& OutEnvironment)

|

||||

{

|

||||

FGlobalShader::ModifyCompilationEnvironment(Parameters, OutEnvironment);

|

||||

OutEnvironment.SetDefine(TEXT("TEST_MICRO"), 1);

|

||||

}

|

||||

|

||||

FSimpleGlobalShader(){}

|

||||

|

||||

FSimpleGlobalShader(const ShaderMetaType::CompiledShaderInitializerType& Initializer):FGlobalShader(Initializer)

|

||||

{

|

||||

SimpleColorVal.Bind(Initializer.ParameterMap, TEXT("SimpleColor"));

|

||||

TextureVal.Bind(Initializer.ParameterMap, TEXT("TextureVal"));

|

||||

TextureSampler.Bind(Initializer.ParameterMap, TEXT("TextureSampler"));

|

||||

}

|

||||

|

||||

template<typename TRHIShader>

|

||||

void SetParameters(FRHICommandList& RHICmdList,TRHIShader* ShaderRHI,const FLinearColor &MyColor, FTexture2DRHIRef InInputTexture)

|

||||

{

|

||||

SetShaderValue(RHICmdList, ShaderRHI, SimpleColorVal, MyColor);

|

||||

SetTextureParameter(RHICmdList, ShaderRHI, TextureVal, TextureSampler,TStaticSamplerState<SF_Trilinear,AM_Clamp,AM_Clamp,AM_Clamp>::GetRHI(), InInputTexture);

|

||||

}

|

||||

|

||||

private:

|

||||

LAYOUT_FIELD(FShaderResourceParameter, TextureVal);

|

||||

LAYOUT_FIELD(FShaderResourceParameter, TextureSampler);

|

||||

LAYOUT_FIELD(FShaderParameter, SimpleColorVal);

|

||||

};

|

||||

```

|

||||

|

||||

第二个形参视类内是否有虚函数,选择Abstract或者NonVirtual。源代码里基本上都是使用NonVirtual的。

|

||||

|

||||

另一个不常用的宏是**DECLARE_EXPORTED_TYPE_LAYOUT**(FYourShaderName, YOUR_API, Virtual),它用于声明需要被其他类继承的基类。具体的使用方法还请参照UE4源码。

|

||||

|

||||

Shader变量需要使用LAYOUT_FIELD宏进行包裹,第一个形参为类型,普通类型声明为FShaderParameter,Texture2d与Sampler声明为FShaderResourceParameter。例如:

|

||||

```

|

||||

LAYOUT_FIELD(FShaderParameter, SimpleColorVal);

|

||||

LAYOUT_FIELD(FShaderResourceParameter, TextureVal);

|

||||

LAYOUT_FIELD(FShaderResourceParameter, TextureSampler);

|

||||

```

|

||||

## Shader声明改变

|

||||

声明宏由DECLARE_SHADER_TYPE(FSimplePixelShader, Global)转变为DECLARE_GLOBAL_SHADER(FSimplePixelShader)。

|

||||

|

||||

## 去除序列化相关函数

|

||||

去掉virtual bool Serialize(FArchive& Ar) override函数。

|

||||

|

||||

LAYOUT_FIELD宏会实现这部分功能。

|

||||

|

||||

## FImageWriteTask

|

||||

FImageWriteTask现在接受TArray64的数据。而不是 TArray。如果你想传递TArray数据,可以这么写:

|

||||

```

|

||||

TArray<FColor> OutBMP;

|

||||

// Fill OutBMP somehow

|

||||

TUniquePtr<TImagePixelData<FColor>> PixelData = MakeUnique<TImagePixelData<FColor>>(DestSize);

|

||||

PixelData->Pixels = OutBMP;

|

||||

ImageTask->PixelData = MoveTemp(PixelData);

|

||||

```

|

||||

175

03-UnrealEngine/Rendering/Shader/UE的透明渲染功能笔记.md

Normal file

175

03-UnrealEngine/Rendering/Shader/UE的透明渲染功能笔记.md

Normal file

@@ -0,0 +1,175 @@

|

||||

---

|

||||

title: UE的透明渲染功能笔记

|

||||

date: 2022-10-30 21:36:08

|

||||

excerpt:

|

||||

tags: Rendering

|

||||

rating: ⭐

|

||||

---

|

||||

|

||||