Init

This commit is contained in:

504

03-UnrealEngine/卡通渲染相关资料/渲染功能/PostProcess/ToonPostProcess.md

Normal file

504

03-UnrealEngine/卡通渲染相关资料/渲染功能/PostProcess/ToonPostProcess.md

Normal file

@@ -0,0 +1,504 @@

|

||||

---

|

||||

title: ToonPostProcess

|

||||

date: 2024-05-15 16:50:13

|

||||

excerpt:

|

||||

tags:

|

||||

rating: ⭐

|

||||

---

|

||||

# FFT

|

||||

|

||||

# Bloom

|

||||

Bloom主要分

|

||||

- Bloom

|

||||

- FFTBloom

|

||||

- LensFlares

|

||||

|

||||

|

||||

BloomThreshold,ClampMin = "-1.0", UIMax = "8.0"。

|

||||

相关逻辑位于:

|

||||

```c++

|

||||

if (bBloomSetupRequiredEnabled)

|

||||

{

|

||||

const float BloomThreshold = View.FinalPostProcessSettings.BloomThreshold;

|

||||

|

||||

FBloomSetupInputs SetupPassInputs;

|

||||

SetupPassInputs.SceneColor = DownsampleInput;

|

||||

SetupPassInputs.EyeAdaptationBuffer = EyeAdaptationBuffer;

|

||||

SetupPassInputs.EyeAdaptationParameters = &EyeAdaptationParameters;

|

||||

SetupPassInputs.LocalExposureParameters = &LocalExposureParameters;

|

||||

SetupPassInputs.LocalExposureTexture = CVarBloomApplyLocalExposure.GetValueOnRenderThread() ? LocalExposureTexture : nullptr;

|

||||

SetupPassInputs.BlurredLogLuminanceTexture = LocalExposureBlurredLogLumTexture;

|

||||

SetupPassInputs.Threshold = BloomThreshold;

|

||||

SetupPassInputs.ToonThreshold = View.FinalPostProcessSettings.ToonBloomThreshold;

|

||||

|

||||

DownsampleInput = AddBloomSetupPass(GraphBuilder, View, SetupPassInputs);

|

||||

}

|

||||

```

|

||||

## FFTBloom

|

||||

***普通Bloom算法只能做到圆形光斑,对于自定义形状的就需要使用FFTBloom。***

|

||||

|

||||

- FFT Bloom:https://zhuanlan.zhihu.com/p/611582936

|

||||

- Unity FFT Bloom:https://github.com/AKGWSB/FFTConvolutionBloom

|

||||

|

||||

### 频域与卷积定理

|

||||

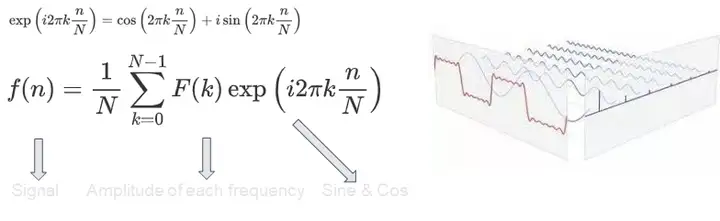

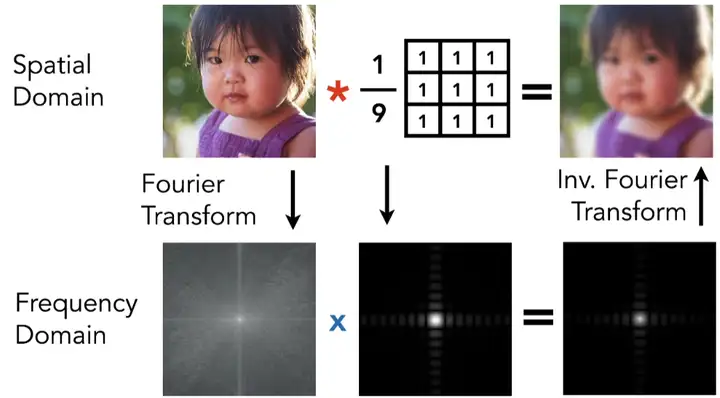

图像可以视为二维的信号,而一个信号可以通过 **不同频率** 的 Sine & Cosine 函数的线性叠加来近似得到。对于每个频率的函数,我们乘以一个常数振幅并叠加到最终的结果上,这些振幅叫做 **频谱**。值得注意的是所有的 F_k 都是 **复数**:

|

||||

|

||||

|

||||

此时频域上的每个振幅不再代表某个单个的时域样本,而是代表该频段的 Sine & Cosine 函数对时域信号的 **整体** 贡献。频域信号包含了输入图像的全部时域信息,***因此卷积定理告诉我们在时域上对信号做卷积,等同于将源图像与滤波盒图像在频域上的频谱(上图系数 V_k)做简单复数 **乘法***:

|

||||

|

||||

一一对位的乘法速度是远远快于需要循环累加的朴素卷积操作。因此接下来我们的目标就是找到一种方法,建立图像信号与其频域之间的联系。在通信领域通常使用傅里叶变换来进行信号的频、时域转换

|

||||

|

||||

### 相关代码

|

||||

- c++

|

||||

- AddFFTBloomPass()

|

||||

- FBloomFinalizeApplyConstantsCS (Bloom计算完成)

|

||||

- AddTonemapPass(),PassInputs.Bloom = Bloom与PassInputs.SceneColorApplyParamaters

|

||||

- Shader

|

||||

-

|

||||

|

||||

**FBloomFindKernelCenterCS**:用于找到Bloom效果的核(Kernel)中心(纹理中找到最亮的像素)。用于在一个,并记录其位置。主要通过计算Luminance来获取到中心区域,而在这里的中心区域可以有多个,这也代表着在最终输出的SceneColor里可以有多个【曝点光晕(Bloom)效果】

|

||||

|

||||

|

||||

|

||||

# 实用代码

|

||||

代码位于DeferredShadingCommon.ush:

|

||||

```c++

|

||||

// @param UV - UV space in the GBuffer textures (BufferSize resolution)

|

||||

FGBufferData GetGBufferData(float2 UV, bool bGetNormalizedNormal = true)

|

||||

{

|

||||

#if GBUFFER_REFACTOR

|

||||

return DecodeGBufferDataUV(UV,bGetNormalizedNormal);

|

||||

#else

|

||||

float4 GBufferA = Texture2DSampleLevel(SceneTexturesStruct.GBufferATexture, SceneTexturesStruct_GBufferATextureSampler, UV, 0);

|

||||

float4 GBufferB = Texture2DSampleLevel(SceneTexturesStruct.GBufferBTexture, SceneTexturesStruct_GBufferBTextureSampler, UV, 0);

|

||||

float4 GBufferC = Texture2DSampleLevel(SceneTexturesStruct.GBufferCTexture, SceneTexturesStruct_GBufferCTextureSampler, UV, 0);

|

||||

float4 GBufferD = Texture2DSampleLevel(SceneTexturesStruct.GBufferDTexture, SceneTexturesStruct_GBufferDTextureSampler, UV, 0);

|

||||

float CustomNativeDepth = Texture2DSampleLevel(SceneTexturesStruct.CustomDepthTexture, SceneTexturesStruct_CustomDepthTextureSampler, UV, 0).r;

|

||||

|

||||

// BufferToSceneTextureScale is necessary when translucent materials are rendered in a render target

|

||||

// that has a different resolution than the scene color textures, e.g. r.SeparateTranslucencyScreenPercentage < 100.

|

||||

int2 IntUV = (int2)trunc(UV * View.BufferSizeAndInvSize.xy * View.BufferToSceneTextureScale.xy);

|

||||

uint CustomStencil = SceneTexturesStruct.CustomStencilTexture.Load(int3(IntUV, 0)) STENCIL_COMPONENT_SWIZZLE;

|

||||

|

||||

#if ALLOW_STATIC_LIGHTING

|

||||

float4 GBufferE = Texture2DSampleLevel(SceneTexturesStruct.GBufferETexture, SceneTexturesStruct_GBufferETextureSampler, UV, 0);

|

||||

#else

|

||||

float4 GBufferE = 1;

|

||||

#endif

|

||||

|

||||

float4 GBufferF = Texture2DSampleLevel(SceneTexturesStruct.GBufferFTexture, SceneTexturesStruct_GBufferFTextureSampler, UV, 0);

|

||||

|

||||

#if WRITES_VELOCITY_TO_GBUFFER

|

||||

float4 GBufferVelocity = Texture2DSampleLevel(SceneTexturesStruct.GBufferVelocityTexture, SceneTexturesStruct_GBufferVelocityTextureSampler, UV, 0);

|

||||

#else

|

||||

float4 GBufferVelocity = 0;

|

||||

#endif

|

||||

|

||||

float SceneDepth = CalcSceneDepth(UV);

|

||||

|

||||

return DecodeGBufferData(GBufferA, GBufferB, GBufferC, GBufferD, GBufferE, GBufferF, GBufferVelocity, CustomNativeDepth, CustomStencil, SceneDepth, bGetNormalizedNormal, CheckerFromSceneColorUV(UV));

|

||||

#endif

|

||||

}

|

||||

|

||||

// Minimal path for just the lighting model, used to branch around unlit pixels (skybox)

|

||||

uint GetShadingModelId(float2 UV)

|

||||

{

|

||||

return DecodeShadingModelId(Texture2DSampleLevel(SceneTexturesStruct.GBufferBTexture, SceneTexturesStruct_GBufferBTextureSampler, UV, 0).a);

|

||||

}

|

||||

```

|

||||

|

||||

## ShadingModel判断

|

||||

```c++

|

||||

bool IsToonShadingModel(float2 UV)

|

||||

{

|

||||

uint ShadingModel = DecodeShadingModelId(Texture2DSampleLevel(SceneTexturesStruct.GBufferBTexture, SceneTexturesStruct_GBufferBTextureSampler, UV, 0).a);

|

||||

return ShadingModel == SHADINGMODELID_TOONSTANDARD

|

||||

|| ShadingModel == SHADINGMODELID_PREINTEGRATED_SKIN;

|

||||

}

|

||||

```

|

||||

PS.需要Shader添加FSceneTextureShaderParameters/FSceneTextureUniformParameters。

|

||||

```c++

|

||||

IMPLEMENT_STATIC_UNIFORM_BUFFER_STRUCT(FSceneTextureUniformParameters, "SceneTexturesStruct", SceneTextures);

|

||||

|

||||

BEGIN_SHADER_PARAMETER_STRUCT(FSceneTextureShaderParameters, ENGINE_API)

|

||||

SHADER_PARAMETER_RDG_UNIFORM_BUFFER(FSceneTextureUniformParameters, SceneTextures)

|

||||

SHADER_PARAMETER_RDG_UNIFORM_BUFFER(FMobileSceneTextureUniformParameters, MobileSceneTextures)

|

||||

END_SHADER_PARAMETER_STRUCT()

|

||||

```

|

||||

|

||||

|

||||

|

||||

# ToneMapping

|

||||

- UE5 官方文档High Dynamic Range Display Output:https://dev.epicgames.com/documentation/en-us/unreal-engine/high-dynamic-range-display-output-in-unreal-engine?application_version=5.3

|

||||

- ACES官方文档:https://docs.acescentral.com/#aces-white-point-derivation

|

||||

- https://modelviewer.dev/examples/tone-mapping

|

||||

- [现代游戏图形中的sRGB18%灰-中性灰的定义](https://zhuanlan.zhihu.com/p/654557489)

|

||||

- 游戏中的后处理(三):渲染流水线、ACES、Tonemapping和 HDR:https://zhuanlan.zhihu.com/p/118272193

|

||||

|

||||

## ACES 色彩空间

|

||||

ACES 标准定义了一些色域和色彩空间如下:

|

||||

|

||||

色域有:

|

||||

- AP0,包含所有颜色的色域

|

||||

- AP1,工作色域

|

||||

|

||||

色彩空间有:

|

||||

- ACES2065-1/ACES 色彩空间,使用 AP0 色域,用于存储颜色,处理色彩转换

|

||||

- ACEScg 色彩空间,使用 AP1 色域,一个线性的渲染计算工作空间

|

||||

- ACEScc 色彩空间,AP1 色域,指数空间,用于调色

|

||||

- ACEScct 色彩空间,使用 AP1 色域,和 ACEScc 类似,只是曲线略有不同,适用于不同的场景

|

||||

|

||||

## UE5的ACES流程

|

||||

ACES Viewing Transform在查看流程中将按以下顺序进行:

|

||||

|

||||

- **Look Modification Transform (LMT)** - 这部分抓取应用了创意"外观"(颜色分级和矫正)的ACES颜色编码图像, 输出由ACES和Reference Rendering Transform(RRT)及Output Device Transform(ODT)渲染的图像。

|

||||

- **Reference Rendering Transform (RRT)** - 之后,这部分抓取参考场景的颜色值,将它们转换为参考显示。 在此流程中,它使渲染图像不再依赖于特定显示器,反而能保证它输出到特定显示器时拥有正确而宽泛的色域和动态范围(尚未创建的图像同样如此)。

|

||||

- **Output Device Transform (ODT)** - 最后,这部分抓取RRT的HDR数据输出,将其与它们能够显示的不同设备和色彩空间进行比对。 因此,每个目标需要将其自身的ODT与Rec709、Rec2020、DCI-P3等进行比对。

|

||||

|

||||

## ToneMapping种类

|

||||

- ShaderToy效果演示:

|

||||

- https://www.shadertoy.com/view/McG3WW

|

||||

- ACES

|

||||

- Narkowicz 2015, "ACES Filmic Tone Mapping Curve"

|

||||

- https://knarkowicz.wordpress.com/2016/01/06/aces-filmic-tone-mapping-curve/

|

||||

- PBR Neutral https://modelviewer.dev/examples/tone-mapping

|

||||

- Uncharted tonemapping

|

||||

- http://filmicworlds.com/blog/filmic-tonemapping-operators/

|

||||

- https://www.gdcvault.com/play/1012351/Uncharted-2-HDR

|

||||

- AgX

|

||||

- https://github.com/sobotka/AgX

|

||||

- https://www.shadertoy.com/view/cd3XWr

|

||||

- https://www.shadertoy.com/view/lslGzl

|

||||

- https://www.shadertoy.com/view/Xstyzn

|

||||

- GT-ToneMapping:https://github.com/yaoling1997/GT-ToneMapping

|

||||

- 曲线:https://www.desmos.com/calculator/gslcdxvipg?lang=zh-CN

|

||||

- CCA-ToneMapping:?

|

||||

## UE中的相关实现

|

||||

UE4版本的笔记:[[UE4 ToneMapping]]

|

||||

|

||||

TonemapCommon.ush中的FilmToneMap()在CombineLUTsCommon()中调用。其顺序为:

|

||||

1. AddCombineLUTPass() => PostProcessCombineLUTs.usf

|

||||

2. AddTonemapPass() => PostProcessTonemap.usf

|

||||

|

||||

```c++

|

||||

void AddPostProcessingPasses()

|

||||

{

|

||||

...

|

||||

{

|

||||

FRDGTextureRef ColorGradingTexture = nullptr;

|

||||

|

||||

if (bPrimaryView)

|

||||

{

|

||||

ColorGradingTexture = AddCombineLUTPass(GraphBuilder, View);

|

||||

}

|

||||

// We can re-use the color grading texture from the primary view.

|

||||

else if (View.GetTonemappingLUT())

|

||||

{

|

||||

ColorGradingTexture = TryRegisterExternalTexture(GraphBuilder, View.GetTonemappingLUT());

|

||||

}

|

||||

else

|

||||

{

|

||||

const FViewInfo* PrimaryView = static_cast<const FViewInfo*>(View.Family->Views[0]);

|

||||

ColorGradingTexture = TryRegisterExternalTexture(GraphBuilder, PrimaryView->GetTonemappingLUT());

|

||||

}

|

||||

|

||||

FTonemapInputs PassInputs;

|

||||

PassSequence.AcceptOverrideIfLastPass(EPass::Tonemap, PassInputs.OverrideOutput);

|

||||

PassInputs.SceneColor = SceneColorSlice;

|

||||

PassInputs.Bloom = Bloom;

|

||||

PassInputs.SceneColorApplyParamaters = SceneColorApplyParameters;

|

||||

PassInputs.LocalExposureTexture = LocalExposureTexture;

|

||||

PassInputs.BlurredLogLuminanceTexture = LocalExposureBlurredLogLumTexture;

|

||||

PassInputs.LocalExposureParameters = &LocalExposureParameters;

|

||||

PassInputs.EyeAdaptationParameters = &EyeAdaptationParameters;

|

||||

PassInputs.EyeAdaptationBuffer = EyeAdaptationBuffer;

|

||||

PassInputs.ColorGradingTexture = ColorGradingTexture;

|

||||

PassInputs.bWriteAlphaChannel = AntiAliasingMethod == AAM_FXAA || bProcessSceneColorAlpha;

|

||||

PassInputs.bOutputInHDR = bTonemapOutputInHDR;

|

||||

|

||||

SceneColor = AddTonemapPass(GraphBuilder, View, PassInputs);

|

||||

}

|

||||

...

|

||||

}

|

||||

```

|

||||

|

||||

|

||||

SHADER_PARAMETER_STRUCT_INCLUDE(FSceneTextureShaderParameters, SceneTextures)

|

||||

CommonPassParameters.SceneTextures = SceneTextures.GetSceneTextureShaderParameters(View.FeatureLevel);

|

||||

## PostProcessCombineLUTs.usf

|

||||

相关变量更新函数位于FCachedLUTSettings::GetCombineLUTParameters()

|

||||

|

||||

## PostProcessTonemap.usf

|

||||

|

||||

## 实现方法

|

||||

|

||||

```c++

|

||||

//BlueRose Modify

|

||||

FGBufferData SamplerBuffer = GetGBufferData(UV * View.ResolutionFractionAndInv.x, false);

|

||||

if (SamplerBuffer.CustomStencil > 1.0f && abs(SamplerBuffer.CustomDepth - SamplerBuffer.Depth) < 1)

|

||||

{

|

||||

// OutColor = SampleSceneColor(UV);

|

||||

OutColor = TonemapCommonPS(UV, InVignette, GrainUV, ScreenPos, FullViewUV, SvPosition, Luminance);

|

||||

}else

|

||||

{

|

||||

OutColor = TonemapCommonPS(UV, InVignette, GrainUV, ScreenPos, FullViewUV, SvPosition, Luminance);

|

||||

}

|

||||

//BlueRose Modify End

|

||||

```

|

||||

|

||||

### TextureArray参考

|

||||

FIESAtlasAddTextureCS::FParameters:

|

||||

- SHADER_PARAMETER_TEXTURE_ARRAY

|

||||

- /Engine/Private/IESAtlas.usf

|

||||

|

||||

```c++

|

||||

static void AddSlotsPassCS(

|

||||

FRDGBuilder& GraphBuilder,

|

||||

FGlobalShaderMap* ShaderMap,

|

||||

const TArray<FAtlasSlot>& Slots,

|

||||

FRDGTextureRef& OutAtlas)

|

||||

{

|

||||

FRDGTextureUAVRef AtlasTextureUAV = GraphBuilder.CreateUAV(OutAtlas);

|

||||

TShaderMapRef<FIESAtlasAddTextureCS> ComputeShader(ShaderMap);

|

||||

|

||||

// Batch new slots into several passes

|

||||

const uint32 SlotCountPerPass = 8u;

|

||||

const uint32 PassCount = FMath::DivideAndRoundUp(uint32(Slots.Num()), SlotCountPerPass);

|

||||

for (uint32 PassIt = 0; PassIt < PassCount; ++PassIt)

|

||||

{

|

||||

const uint32 SlotOffset = PassIt * SlotCountPerPass;

|

||||

const uint32 SlotCount = SlotCountPerPass * (PassIt+1) <= uint32(Slots.Num()) ? SlotCountPerPass : uint32(Slots.Num()) - (SlotCountPerPass * PassIt);

|

||||

|

||||

FIESAtlasAddTextureCS::FParameters* Parameters = GraphBuilder.AllocParameters<FIESAtlasAddTextureCS::FParameters>();

|

||||

Parameters->OutAtlasTexture = AtlasTextureUAV;

|

||||

Parameters->AtlasResolution = OutAtlas->Desc.Extent;

|

||||

Parameters->AtlasSliceCount = OutAtlas->Desc.ArraySize;

|

||||

Parameters->ValidCount = SlotCount;

|

||||

for (uint32 SlotIt = 0; SlotIt < SlotCountPerPass; ++SlotIt)

|

||||

{

|

||||

Parameters->InTexture[SlotIt] = GSystemTextures.BlackDummy->GetRHI();

|

||||

Parameters->InSliceIndex[SlotIt].X = InvalidSlotIndex;

|

||||

Parameters->InSampler[SlotIt] = TStaticSamplerState<SF_Bilinear, AM_Clamp, AM_Clamp, AM_Clamp>::GetRHI();

|

||||

}

|

||||

for (uint32 SlotIt = 0; SlotIt<SlotCount;++SlotIt)

|

||||

{

|

||||

const FAtlasSlot& Slot = Slots[SlotOffset + SlotIt];

|

||||

check(Slot.SourceTexture);

|

||||

Parameters->InTexture[SlotIt] = Slot.GetTextureRHI();

|

||||

Parameters->InSliceIndex[SlotIt].X = Slot.SliceIndex;

|

||||

}

|

||||

|

||||

const FIntVector DispatchCount = FComputeShaderUtils::GetGroupCount(FIntVector(Parameters->AtlasResolution.X, Parameters->AtlasResolution.Y, SlotCount), FIntVector(8, 8, 1));

|

||||

FComputeShaderUtils::AddPass(GraphBuilder, RDG_EVENT_NAME("IESAtlas::AddTexture"), ComputeShader, Parameters, DispatchCount);

|

||||

}

|

||||

|

||||

GraphBuilder.UseExternalAccessMode(OutAtlas, ERHIAccess::SRVMask);

|

||||

}

|

||||

```

|

||||

|

||||

```c++

|

||||

Texture2D<float4> InTexture_0;

|

||||

Texture2D<float4> InTexture_1;

|

||||

Texture2D<float4> InTexture_2;

|

||||

Texture2D<float4> InTexture_3;

|

||||

Texture2D<float4> InTexture_4;

|

||||

Texture2D<float4> InTexture_5;

|

||||

Texture2D<float4> InTexture_6;

|

||||

Texture2D<float4> InTexture_7;

|

||||

|

||||

uint4 InSliceIndex[8];

|

||||

|

||||

SamplerState InSampler_0;

|

||||

SamplerState InSampler_1;

|

||||

SamplerState InSampler_2;

|

||||

SamplerState InSampler_3;

|

||||

SamplerState InSampler_4;

|

||||

SamplerState InSampler_5;

|

||||

SamplerState InSampler_6;

|

||||

SamplerState InSampler_7;

|

||||

|

||||

int2 AtlasResolution;

|

||||

uint AtlasSliceCount;

|

||||

uint ValidCount;

|

||||

|

||||

RWTexture2DArray<float> OutAtlasTexture;

|

||||

|

||||

[numthreads(8, 8, 1)]

|

||||

void MainCS(uint3 DispatchThreadId : SV_DispatchThreadID)

|

||||

{

|

||||

if (all(DispatchThreadId.xy < uint2(AtlasResolution)))

|

||||

{

|

||||

const uint2 DstPixelPos = DispatchThreadId.xy;

|

||||

uint DstSlice = 0;

|

||||

|

||||

const float2 SrcUV = (DstPixelPos + 0.5) / float2(AtlasResolution);

|

||||

const uint SrcSlice = DispatchThreadId.z;

|

||||

|

||||

if (SrcSlice < ValidCount)

|

||||

{

|

||||

float Color = 0;

|

||||

switch (SrcSlice)

|

||||

{

|

||||

case 0: Color = InTexture_0.SampleLevel(InSampler_0, SrcUV, 0).x; DstSlice = InSliceIndex[0].x; break;

|

||||

case 1: Color = InTexture_1.SampleLevel(InSampler_1, SrcUV, 0).x; DstSlice = InSliceIndex[1].x; break;

|

||||

case 2: Color = InTexture_2.SampleLevel(InSampler_2, SrcUV, 0).x; DstSlice = InSliceIndex[2].x; break;

|

||||

case 3: Color = InTexture_3.SampleLevel(InSampler_3, SrcUV, 0).x; DstSlice = InSliceIndex[3].x; break;

|

||||

case 4: Color = InTexture_4.SampleLevel(InSampler_4, SrcUV, 0).x; DstSlice = InSliceIndex[4].x; break;

|

||||

case 5: Color = InTexture_5.SampleLevel(InSampler_5, SrcUV, 0).x; DstSlice = InSliceIndex[5].x; break;

|

||||

case 6: Color = InTexture_6.SampleLevel(InSampler_6, SrcUV, 0).x; DstSlice = InSliceIndex[6].x; break;

|

||||

case 7: Color = InTexture_7.SampleLevel(InSampler_7, SrcUV, 0).x; DstSlice = InSliceIndex[7].x; break;

|

||||

}

|

||||

|

||||

// Ensure there is no NaN value

|

||||

Color = -min(-Color, 0);

|

||||

|

||||

DstSlice = min(DstSlice, AtlasSliceCount-1);

|

||||

OutAtlasTexture[uint3(DstPixelPos, DstSlice)] = Color;

|

||||

}

|

||||

}

|

||||

}

|

||||

```

|

||||

|

||||

### ToneMapping Method更换

|

||||

```c++

|

||||

// Tonemapped color in the AP1 gamut

|

||||

float3 ToneMappedColorAP1 = FilmToneMap( ColorAP1 );

|

||||

// float3 ToneMappedColorAP1 = ColorAP1;

|

||||

// float3 ToneMappedColorAP1 = AGXToneMap(ColorAP1);

|

||||

// float3 ToneMappedColorAP1 = GTToneMap(ColorAP1);

|

||||

// float3 ToneMappedColorAP1 = PBRNeutralToneMap(ColorAP1);

|

||||

```

|

||||

|

||||

# UE5 PostProcess 添加Pass代码

|

||||

- UE4中添加自定义ComputerShader:https://zhuanlan.zhihu.com/p/413884878

|

||||

- UE渲染学习(2)- 自定义PostProcess - Kuwahara滤镜:https://zhuanlan.zhihu.com/p/25790491262

|

||||

|

||||

# 在UE5添加自定义后处理Pass的方法

|

||||

## 在if(bPostProcessingEnabled)代码段

|

||||

1. 在AddPostProcessingPasses()(PostProcessing.cpp)中的**EPass**与**PassNames**数组中添加Pass名称枚举与Pass名称字符串。

|

||||

2. 在**PassSequence.Finalize()** 之前,添加上一步EPass同名的`PassSequence.SetEnabled(EPass::XXX)` 。该逻辑用于控制对应Pass是否起作用。

|

||||

3. 根据步骤1中新增Pass的顺序,在对应的位置添加对应的代码段。额外需要注意的Tonemap前后的Pass代码有所不同。

|

||||

|

||||

### Pass代码

|

||||

1. `MotionBlur`之前(后处理材质BL_BeforeTonemapping之前),传入FScreenPassTexture SceneColor进行绘制。

|

||||

2. `MotionBlur`~`Tonemap`传入FScreenPassTextureSlice SceneColorSlice进行绘制。

|

||||

3. `Tonemap`之后,传入FScreenPassTexture SceneColor进行绘制。

|

||||

|

||||

`MotionBlur`~`Tonemap`大致这么写:

|

||||

```c++

|

||||

if (PassSequence.IsEnabled(EPass::XXX))

|

||||

{

|

||||

FXXXInputs PassInputs;

|

||||

PassSequence.AcceptOverrideIfLastPass(EPass::XXX, PassInputs.OverrideOutput);

|

||||

PassInputs.SceneColorSlice = SceneColorSlice;

|

||||

|

||||

SceneColorSlice = FScreenPassTextureSlice::CreateFromScreenPassTexture(GraphBuilder, AddXXXPass(GraphBuilder, View, PassInputs));

|

||||

}

|

||||

```

|

||||

|

||||

其他位置大致这么写:

|

||||

```c++

|

||||

if (PassSequence.IsEnabled(EPass::XXX))

|

||||

{

|

||||

FXXXInputs PassInputs;

|

||||

PassSequence.AcceptOverrideIfLastPass(EPass::XXX, PassInputs.OverrideOutput);

|

||||

PassInputs.SceneColor = SceneColor;

|

||||

|

||||

SceneColor = AddXXXPass(GraphBuilder, View, PassInputs);

|

||||

}

|

||||

```

|

||||

|

||||

### 超采样之后的UV转换

|

||||

`Tonemap`之后的Pass因为超采样的关系使得ViewportResoution与BufferResoution不同,所以需要使用`SHADER_PARAMETER(FScreenTransform, SvPositionToInputTextureUV)`以及`FScreenTransform::ChangeTextureBasisFromTo`计算变换比例。具体可以参考FFXAAPS。

|

||||

|

||||

SvPosition => ViewportUV => TextureUV:

|

||||

```c++

|

||||

PassParameters->SvPositionToInputTextureUV = (

|

||||

FScreenTransform::SvPositionToViewportUV(Output.ViewRect) *

|

||||

FScreenTransform::ChangeTextureBasisFromTo(FScreenPassTextureViewport(Inputs.SceneColorSlice), FScreenTransform::ETextureBasis::ViewportUV, FScreenTransform::ETextureBasis::TextureUV));

|

||||

```

|

||||

|

||||

之后在Shader中:

|

||||

```c++

|

||||

Texture2D SceneColorTexture;

|

||||

SamplerState SceneColorSampler;

|

||||

FScreenTransform SvPositionToInputTextureUV;

|

||||

|

||||

void MainPS(

|

||||

float4 SvPosition : SV_POSITION,

|

||||

out float4 OutColor : SV_Target0)

|

||||

{

|

||||

float2 SceneColorUV = ApplyScreenTransform(SvPosition.xy, SvPositionToInputTextureUV);

|

||||

OutColor.rgba = SceneColorTexture.SampleLevel(SceneColorSampler, UV, 0).rgba;

|

||||

}

|

||||

```

|

||||

|

||||

## 在else代码段

|

||||

在else代码段中添加新Pass禁用代码段。不添加则会在切换到其他View(比如材质编辑器的Preview)时崩溃。例如:

|

||||

```c++

|

||||

if(bPostProcessingEnabled){

|

||||

...

|

||||

...

|

||||

}else

|

||||

{

|

||||

PassSequence.SetEnabled(EPass::XXX, false);

|

||||

|

||||

PassSequence.SetEnabled(EPass::MotionBlur, false);

|

||||

PassSequence.SetEnabled(EPass::Tonemap, true);

|

||||

PassSequence.SetEnabled(EPass::FXAA, false);

|

||||

PassSequence.SetEnabled(EPass::PostProcessMaterialAfterTonemapping, false);

|

||||

PassSequence.SetEnabled(EPass::VisualizeDepthOfField, false);

|

||||

PassSequence.SetEnabled(EPass::VisualizeLocalExposure, false);

|

||||

PassSequence.Finalize();

|

||||

...

|

||||

}

|

||||

```

|

||||

|

||||

##

|

||||

PS:

|

||||

1. `Tonemap`之后的Pass因为超采样的关系使得ViewportResoution与BufferResoution不同,所以需要使用`SHADER_PARAMETER(FScreenTransform, SvPositionToInputTextureUV)`以及`FScreenTransform::ChangeTextureBasisFromTo`计算变换比例。具体可以参考FFXAAPS。

|

||||

1. ToneMapPass 传入FScreenPassTextureSlice,传出FScreenPassTexture。如果关闭Tonemap则直接运行`SceneColor = FScreenPassTexture(SceneColorSlice);`。

|

||||

2. `MotionBlur`~`Tonemap`

|

||||

3. `MotionBlur`之前(后处理材质BL_BeforeTonemapping之前),传入FScreenPassTexture SceneColor进行绘制。

|

||||

##

|

||||

```c++

|

||||

FPostProcessMaterialInputs PassInputs;

|

||||

PassSequence.AcceptOverrideIfLastPass(EPass::Tonemap, PassInputs.OverrideOutput);

|

||||

PassInputs.SetInput(EPostProcessMaterialInput::SceneColor, FScreenPassTexture::CopyFromSlice(GraphBuilder, SceneColorSlice));

|

||||

```

|

||||

|

||||

创建`FScreenPassTextureSlice`:

|

||||

```c++

|

||||

FScreenPassTextureSlice SceneColorSlice = FScreenPassTextureSlice::CreateFromScreenPassTexture(GraphBuilder, SceneColor);//FScreenPassTexture SceneColor

|

||||

```

|

||||

|

||||

将渲染结果转换成`FScreenPassTextureSlice`:

|

||||

```c++

|

||||

SceneColorSlice = FScreenPassTextureSlice::CreateFromScreenPassTexture(GraphBuilder, AddToonPostProcessBeforeTonemappingPass(GraphBuilder, View, PassInputs));

|

||||

```

|

||||

|

||||

```c++

|

||||

// Allows for the scene color to be the slice of an array between temporal upscaler and tonemaper.

|

||||

FScreenPassTextureSlice SceneColorSlice = FScreenPassTextureSlice::CreateFromScreenPassTexture(GraphBuilder, SceneColor);

|

||||

```

|

||||

## Viewport => TextureUV

|

||||

```c++

|

||||

PassParameters->SvPositionToInputTextureUV = (

|

||||

FScreenTransform::SvPositionToViewportUV(Output.ViewRect) *

|

||||

FScreenTransform::ChangeTextureBasisFromTo(FScreenPassTextureViewport(Inputs.SceneColorSlice), FScreenTransform::ETextureBasis::ViewportUV, FScreenTransform::ETextureBasis::TextureUV));

|

||||

```

|

||||

|

||||

在Shader中:

|

||||

```c++

|

||||

Texture2D SceneColorTexture;

|

||||

SamplerState SceneColorSampler;

|

||||

FScreenTransform SvPositionToInputTextureUV;

|

||||

|

||||

void MainPS(

|

||||

float4 SvPosition : SV_POSITION,

|

||||

out float4 OutColor : SV_Target0)

|

||||

{

|

||||

float2 SceneColorUV = ApplyScreenTransform(SvPosition.xy, SvPositionToInputTextureUV);

|

||||

OutColor.rgba = SceneColorTexture.SampleLevel(SceneColorSampler, UV, 0).rgba;

|

||||

}

|

||||

```

|

||||

@@ -0,0 +1,7 @@

|

||||

---

|

||||

title: Toon ToneMapping

|

||||

date: 2025-01-19 21:02:19

|

||||

excerpt:

|

||||

tags:

|

||||

rating: ⭐

|

||||

---

|

||||

378

03-UnrealEngine/卡通渲染相关资料/渲染功能/ShaderModel/BasePass C++.md

Normal file

378

03-UnrealEngine/卡通渲染相关资料/渲染功能/ShaderModel/BasePass C++.md

Normal file

@@ -0,0 +1,378 @@

|

||||

---

|

||||

title: Untitled

|

||||

date: 2024-09-26 18:41:24

|

||||

excerpt:

|

||||

tags:

|

||||

rating: ⭐

|

||||

---

|

||||

# RenderBasePass()

|

||||

传入RenderBasePass()的DepthStencil逻辑如下:

|

||||

```c++

|

||||

const FExclusiveDepthStencil::Type BasePassDepthStencilAccess =

|

||||

bAllowReadOnlyDepthBasePass

|

||||

? FExclusiveDepthStencil::DepthRead_StencilWrite

|

||||

: FExclusiveDepthStencil::DepthWrite_StencilWrite;

|

||||

```

|

||||

|

||||

|

||||

FDeferredShadingSceneRenderer::RenderBasePass() =>

|

||||

FDeferredShadingSceneRenderer::RenderBasePassInternal() =>

|

||||

|

||||

|

||||

FBasePassMeshProcessor::TryAddMeshBatch =>

|

||||

|

||||

## 大致流程

|

||||

1. 创建MRT并绑定、取得深度缓存。

|

||||

```c++

|

||||

const FExclusiveDepthStencil ExclusiveDepthStencil(BasePassDepthStencilAccess);

|

||||

|

||||

TStaticArray<FTextureRenderTargetBinding, MaxSimultaneousRenderTargets> BasePassTextures;

|

||||

uint32 BasePassTextureCount = SceneTextures.GetGBufferRenderTargets(BasePassTextures);

|

||||

Strata::AppendStrataMRTs(*this, BasePassTextureCount, BasePassTextures);

|

||||

TArrayView<FTextureRenderTargetBinding> BasePassTexturesView = MakeArrayView(BasePassTextures.GetData(), BasePassTextureCount);

|

||||

FRDGTextureRef BasePassDepthTexture = SceneTextures.Depth.Target;

|

||||

```

|

||||

2. GBuffer Clear

|

||||

```c++

|

||||

GraphBuilder.AddPass(RDG_EVENT_NAME("GBufferClear"), PassParameters, ERDGPassFlags::Raster,

|

||||

[PassParameters, ColorLoadAction, SceneColorClearValue](FRHICommandList& RHICmdList)

|

||||

{

|

||||

// If no fast-clear action was used, we need to do an MRT shader clear.

|

||||

if (ColorLoadAction == ERenderTargetLoadAction::ENoAction)

|

||||

{

|

||||

const FRenderTargetBindingSlots& RenderTargets = PassParameters->RenderTargets;

|

||||

FLinearColor ClearColors[MaxSimultaneousRenderTargets];

|

||||

FRHITexture* Textures[MaxSimultaneousRenderTargets];

|

||||

int32 TextureIndex = 0;

|

||||

|

||||

RenderTargets.Enumerate([&](const FRenderTargetBinding& RenderTarget)

|

||||

{

|

||||

FRHITexture* TextureRHI = RenderTarget.GetTexture()->GetRHI();

|

||||

ClearColors[TextureIndex] = TextureIndex == 0 ? SceneColorClearValue : TextureRHI->GetClearColor();

|

||||

Textures[TextureIndex] = TextureRHI;

|

||||

++TextureIndex;

|

||||

});

|

||||

|

||||

// Clear color only; depth-stencil is fast cleared.

|

||||

DrawClearQuadMRT(RHICmdList, true, TextureIndex, ClearColors, false, 0, false, 0);

|

||||

}

|

||||

});

|

||||

```

|

||||

3. RenderTargetBindingSlots

|

||||

```c++

|

||||

// Render targets bindings should remain constant at this point.

|

||||

FRenderTargetBindingSlots BasePassRenderTargets = GetRenderTargetBindings(ERenderTargetLoadAction::ELoad, BasePassTexturesView);

|

||||

BasePassRenderTargets.DepthStencil = FDepthStencilBinding(BasePassDepthTexture, ERenderTargetLoadAction::ELoad, ERenderTargetLoadAction::ELoad, ExclusiveDepthStencil);

|

||||

```

|

||||

4. RenderBasePassInternal()

|

||||

5. RenderAnisotropyPass()

|

||||

|

||||

# MeshDraw

|

||||

## RenderBasePassInternal()

|

||||

RenderNaniteBasePass()为一个Lambda,最终调用**Nanite::DrawBasePass()** 渲染Nanite物体的BasePass。其他相关渲染代码如下:

|

||||

```c++

|

||||

SCOPE_CYCLE_COUNTER(STAT_BasePassDrawTime);

|

||||

RDG_EVENT_SCOPE(GraphBuilder, "BasePass");

|

||||

RDG_GPU_STAT_SCOPE(GraphBuilder, Basepass);

|

||||

|

||||

const bool bDrawSceneViewsInOneNanitePass = Views.Num() > 1 && Nanite::ShouldDrawSceneViewsInOneNanitePass(Views[0]);

|

||||

if (bParallelBasePass)//并行渲染模式

|

||||

{

|

||||

RDG_WAIT_FOR_TASKS_CONDITIONAL(GraphBuilder, IsBasePassWaitForTasksEnabled());

|

||||

|

||||

for (int32 ViewIndex = 0; ViewIndex < Views.Num(); ++ViewIndex)

|

||||

{

|

||||

FViewInfo& View = Views[ViewIndex];

|

||||

RDG_GPU_MASK_SCOPE(GraphBuilder, View.GPUMask);

|

||||

RDG_EVENT_SCOPE_CONDITIONAL(GraphBuilder, Views.Num() > 1, "View%d", ViewIndex);

|

||||

View.BeginRenderView();

|

||||

|

||||

const bool bLumenGIEnabled = GetViewPipelineState(View).DiffuseIndirectMethod == EDiffuseIndirectMethod::Lumen;

|

||||

|

||||

FMeshPassProcessorRenderState DrawRenderState;

|

||||

SetupBasePassState(BasePassDepthStencilAccess, ViewFamily.EngineShowFlags.ShaderComplexity, DrawRenderState);

|

||||

|

||||

FOpaqueBasePassParameters* PassParameters = GraphBuilder.AllocParameters<FOpaqueBasePassParameters>();

|

||||

PassParameters->View = View.GetShaderParameters();

|

||||

PassParameters->ReflectionCapture = View.ReflectionCaptureUniformBuffer;

|

||||

PassParameters->BasePass = CreateOpaqueBasePassUniformBuffer(GraphBuilder, View, ViewIndex, ForwardBasePassTextures, DBufferTextures, bLumenGIEnabled);

|

||||

PassParameters->RenderTargets = BasePassRenderTargets;

|

||||

PassParameters->RenderTargets.ShadingRateTexture = GVRSImageManager.GetVariableRateShadingImage(GraphBuilder, View, FVariableRateShadingImageManager::EVRSPassType::BasePass);

|

||||

|

||||

const bool bShouldRenderView = View.ShouldRenderView();

|

||||

if (bShouldRenderView)

|

||||

{

|

||||

View.ParallelMeshDrawCommandPasses[EMeshPass::BasePass].BuildRenderingCommands(GraphBuilder, Scene->GPUScene, PassParameters->InstanceCullingDrawParams);

|

||||

|

||||

GraphBuilder.AddPass(

|

||||

RDG_EVENT_NAME("BasePassParallel"),

|

||||

PassParameters,

|

||||

ERDGPassFlags::Raster | ERDGPassFlags::SkipRenderPass,

|

||||

[this, &View, PassParameters](const FRDGPass* InPass, FRHICommandListImmediate& RHICmdList)

|

||||

{

|

||||

FRDGParallelCommandListSet ParallelCommandListSet(InPass, RHICmdList, GET_STATID(STAT_CLP_BasePass), View, FParallelCommandListBindings(PassParameters));

|

||||

View.ParallelMeshDrawCommandPasses[EMeshPass::BasePass].DispatchDraw(&ParallelCommandListSet, RHICmdList, &PassParameters->InstanceCullingDrawParams);

|

||||

});

|

||||

}

|

||||

|

||||

const bool bShouldRenderViewForNanite = bNaniteEnabled && (!bDrawSceneViewsInOneNanitePass || ViewIndex == 0); // when bDrawSceneViewsInOneNanitePass, the first view should cover all the other atlased ones

|

||||

if (bShouldRenderViewForNanite)

|

||||

{

|

||||

// Should always have a full Z prepass with Nanite

|

||||

check(ShouldRenderPrePass());

|

||||

//渲染Nanite物体BasePass

|

||||

RenderNaniteBasePass(View, ViewIndex);

|

||||

}

|

||||

|

||||

//渲染编辑器相关的图元物体

|

||||

RenderEditorPrimitives(GraphBuilder, PassParameters, View, DrawRenderState, InstanceCullingManager);

|

||||

|

||||

//渲染大气

|

||||

if (bShouldRenderView && View.Family->EngineShowFlags.Atmosphere)

|

||||

{

|

||||

FOpaqueBasePassParameters* SkyPassPassParameters = GraphBuilder.AllocParameters<FOpaqueBasePassParameters>();

|

||||

SkyPassPassParameters->BasePass = PassParameters->BasePass;

|

||||

SkyPassPassParameters->RenderTargets = BasePassRenderTargets;

|

||||

SkyPassPassParameters->View = View.GetShaderParameters();

|

||||

SkyPassPassParameters->ReflectionCapture = View.ReflectionCaptureUniformBuffer;

|

||||

|

||||

View.ParallelMeshDrawCommandPasses[EMeshPass::SkyPass].BuildRenderingCommands(GraphBuilder, Scene->GPUScene, SkyPassPassParameters->InstanceCullingDrawParams);

|

||||

|

||||

GraphBuilder.AddPass(

|

||||

RDG_EVENT_NAME("SkyPassParallel"),

|

||||

SkyPassPassParameters,

|

||||

ERDGPassFlags::Raster | ERDGPassFlags::SkipRenderPass,

|

||||

[this, &View, SkyPassPassParameters](const FRDGPass* InPass, FRHICommandListImmediate& RHICmdList)

|

||||

{

|

||||

FRDGParallelCommandListSet ParallelCommandListSet(InPass, RHICmdList, GET_STATID(STAT_CLP_BasePass), View, FParallelCommandListBindings(SkyPassPassParameters));

|

||||

View.ParallelMeshDrawCommandPasses[EMeshPass::SkyPass].DispatchDraw(&ParallelCommandListSet, RHICmdList, &SkyPassPassParameters->InstanceCullingDrawParams);

|

||||

});

|

||||

}

|

||||

}

|

||||

}

|

||||

else

|

||||

{

|

||||

for (int32 ViewIndex = 0; ViewIndex < Views.Num(); ++ViewIndex)

|

||||

{

|

||||

FViewInfo& View = Views[ViewIndex];

|

||||

RDG_GPU_MASK_SCOPE(GraphBuilder, View.GPUMask);

|

||||

RDG_EVENT_SCOPE_CONDITIONAL(GraphBuilder, Views.Num() > 1, "View%d", ViewIndex);

|

||||

View.BeginRenderView();

|

||||

|

||||

const bool bLumenGIEnabled = GetViewPipelineState(View).DiffuseIndirectMethod == EDiffuseIndirectMethod::Lumen;

|

||||

|

||||

FMeshPassProcessorRenderState DrawRenderState;

|

||||

SetupBasePassState(BasePassDepthStencilAccess, ViewFamily.EngineShowFlags.ShaderComplexity, DrawRenderState);

|

||||

|

||||

FOpaqueBasePassParameters* PassParameters = GraphBuilder.AllocParameters<FOpaqueBasePassParameters>();

|

||||

PassParameters->View = View.GetShaderParameters();

|

||||

PassParameters->ReflectionCapture = View.ReflectionCaptureUniformBuffer;

|

||||

PassParameters->BasePass = CreateOpaqueBasePassUniformBuffer(GraphBuilder, View, ViewIndex, ForwardBasePassTextures, DBufferTextures, bLumenGIEnabled);

|

||||

PassParameters->RenderTargets = BasePassRenderTargets;

|

||||

PassParameters->RenderTargets.ShadingRateTexture = GVRSImageManager.GetVariableRateShadingImage(GraphBuilder, View, FVariableRateShadingImageManager::EVRSPassType::BasePass);

|

||||

|

||||

const bool bShouldRenderView = View.ShouldRenderView();

|

||||

if (bShouldRenderView)

|

||||

{

|

||||

View.ParallelMeshDrawCommandPasses[EMeshPass::BasePass].BuildRenderingCommands(GraphBuilder, Scene->GPUScene, PassParameters->InstanceCullingDrawParams);

|

||||

|

||||

GraphBuilder.AddPass(

|

||||

RDG_EVENT_NAME("BasePass"),

|

||||

PassParameters,

|

||||

ERDGPassFlags::Raster,

|

||||

[this, &View, PassParameters](FRHICommandList& RHICmdList)

|

||||

{

|

||||

SetStereoViewport(RHICmdList, View, 1.0f);

|

||||

View.ParallelMeshDrawCommandPasses[EMeshPass::BasePass].DispatchDraw(nullptr, RHICmdList, &PassParameters->InstanceCullingDrawParams);

|

||||

}

|

||||

);

|

||||

}

|

||||

|

||||

const bool bShouldRenderViewForNanite = bNaniteEnabled && (!bDrawSceneViewsInOneNanitePass || ViewIndex == 0); // when bDrawSceneViewsInOneNanitePass, the first view should cover all the other atlased ones

|

||||

if (bShouldRenderViewForNanite)

|

||||

{

|

||||

// Should always have a full Z prepass with Nanite

|

||||

check(ShouldRenderPrePass());

|

||||

|

||||

RenderNaniteBasePass(View, ViewIndex);

|

||||

}

|

||||

|

||||

RenderEditorPrimitives(GraphBuilder, PassParameters, View, DrawRenderState, InstanceCullingManager);

|

||||

|

||||

if (bShouldRenderView && View.Family->EngineShowFlags.Atmosphere)

|

||||

{

|

||||

FOpaqueBasePassParameters* SkyPassParameters = GraphBuilder.AllocParameters<FOpaqueBasePassParameters>();

|

||||

SkyPassParameters->BasePass = PassParameters->BasePass;

|

||||

SkyPassParameters->RenderTargets = BasePassRenderTargets;

|

||||

SkyPassParameters->View = View.GetShaderParameters();

|

||||

SkyPassParameters->ReflectionCapture = View.ReflectionCaptureUniformBuffer;

|

||||

|

||||

View.ParallelMeshDrawCommandPasses[EMeshPass::SkyPass].BuildRenderingCommands(GraphBuilder, Scene->GPUScene, SkyPassParameters->InstanceCullingDrawParams);

|

||||

|

||||

GraphBuilder.AddPass(

|

||||

RDG_EVENT_NAME("SkyPass"),

|

||||

SkyPassParameters,

|

||||

ERDGPassFlags::Raster,

|

||||

[this, &View, SkyPassParameters](FRHICommandList& RHICmdList)

|

||||

{

|

||||

SetStereoViewport(RHICmdList, View, 1.0f);

|

||||

View.ParallelMeshDrawCommandPasses[EMeshPass::SkyPass].DispatchDraw(nullptr, RHICmdList, &SkyPassParameters->InstanceCullingDrawParams);

|

||||

}

|

||||

);

|

||||

}

|

||||

}

|

||||

}

|

||||

```

|

||||

|

||||

### SetDepthStencilStateForBasePass()

|

||||

```c++

|

||||

void SetDepthStencilStateForBasePass(

|

||||

FMeshPassProcessorRenderState& DrawRenderState,

|

||||

ERHIFeatureLevel::Type FeatureLevel,

|

||||

bool bDitheredLODTransition,

|

||||

const FMaterial& MaterialResource,

|

||||

bool bEnableReceiveDecalOutput,

|

||||

bool bForceEnableStencilDitherState)

|

||||

{

|

||||

const bool bMaskedInEarlyPass = (MaterialResource.IsMasked() || bDitheredLODTransition) && MaskedInEarlyPass(GShaderPlatformForFeatureLevel[FeatureLevel]);

|

||||

if (bEnableReceiveDecalOutput)

|

||||

{

|

||||

if (bMaskedInEarlyPass)

|

||||

{

|

||||

SetDepthStencilStateForBasePass_Internal<false, CF_Equal>(DrawRenderState, FeatureLevel);

|

||||

}

|

||||

else if (DrawRenderState.GetDepthStencilAccess() & FExclusiveDepthStencil::DepthWrite)

|

||||

{

|

||||

SetDepthStencilStateForBasePass_Internal<true, CF_GreaterEqual>(DrawRenderState, FeatureLevel);

|

||||

}

|

||||

else

|

||||

{

|

||||

SetDepthStencilStateForBasePass_Internal<false, CF_GreaterEqual>(DrawRenderState, FeatureLevel);

|

||||

}

|

||||

}

|

||||

else if (bMaskedInEarlyPass)

|

||||

{

|

||||

DrawRenderState.SetDepthStencilState(TStaticDepthStencilState<false, CF_Equal>::GetRHI());

|

||||

}

|

||||

|

||||

if (bForceEnableStencilDitherState)

|

||||

{

|

||||

SetDepthStencilStateForBasePass_Internal<false, CF_Equal>(DrawRenderState, FeatureLevel);

|

||||

}

|

||||

}

|

||||

```

|

||||

## AnisotropyPass

|

||||

Anisotropy的RT设置:

|

||||

- RenderTarget:SceneTextures.GBufferF。

|

||||

- DepthStencil:SceneTextures.Depth.Target。**ERenderTargetLoadAction::ELoad**、**FExclusiveDepthStencil::DepthRead_StencilNop**

|

||||

|

||||

### 管线状态

|

||||

在FAnisotropyMeshProcessor::CollectPSOInitializers()中:

|

||||

```c++

|

||||

ETextureCreateFlags GBufferFCreateFlags;

|

||||

EPixelFormat GBufferFPixelFormat = FSceneTextures::GetGBufferFFormatAndCreateFlags(GBufferFCreateFlags);

|

||||

AddRenderTargetInfo(GBufferFPixelFormat, GBufferFCreateFlags, RenderTargetsInfo);

|

||||

SetupDepthStencilInfo(PF_DepthStencil, SceneTexturesConfig.DepthCreateFlags, ERenderTargetLoadAction::ELoad,

|

||||

ERenderTargetLoadAction::ELoad, FExclusiveDepthStencil::DepthRead_StencilNop, RenderTargetsInfo);

|

||||

```

|

||||

|

||||

```c++

|

||||

void SetupDepthStencilInfo(

|

||||

EPixelFormat DepthStencilFormat,

|

||||

ETextureCreateFlags DepthStencilCreateFlags,

|

||||

ERenderTargetLoadAction DepthTargetLoadAction,

|

||||

ERenderTargetLoadAction StencilTargetLoadAction,

|

||||

FExclusiveDepthStencil DepthStencilAccess,

|

||||

FGraphicsPipelineRenderTargetsInfo& RenderTargetsInfo)

|

||||

{

|

||||

// Setup depth stencil state

|

||||

RenderTargetsInfo.DepthStencilTargetFormat = DepthStencilFormat;

|

||||

RenderTargetsInfo.DepthStencilTargetFlag = DepthStencilCreateFlags;

|

||||

|

||||

RenderTargetsInfo.DepthTargetLoadAction = DepthTargetLoadAction;

|

||||

RenderTargetsInfo.StencilTargetLoadAction = StencilTargetLoadAction;

|

||||

RenderTargetsInfo.DepthStencilAccess = DepthStencilAccess;

|

||||

|

||||

const ERenderTargetStoreAction StoreAction = EnumHasAnyFlags(RenderTargetsInfo.DepthStencilTargetFlag, TexCreate_Memoryless) ? ERenderTargetStoreAction::ENoAction : ERenderTargetStoreAction::EStore;

|

||||

RenderTargetsInfo.DepthTargetStoreAction = RenderTargetsInfo.DepthStencilAccess.IsUsingDepth() ? StoreAction : ERenderTargetStoreAction::ENoAction;

|

||||

RenderTargetsInfo.StencilTargetStoreAction = RenderTargetsInfo.DepthStencilAccess.IsUsingStencil() ? StoreAction : ERenderTargetStoreAction::ENoAction;

|

||||

}

|

||||

```

|

||||

|

||||

### ParallelRendering

|

||||

AnisotropyPass支持并行渲染,并行渲染的判断逻辑为

|

||||

```c++

|

||||

const bool bEnableParallelBasePasses = GRHICommandList.UseParallelAlgorithms() && CVarParallelBasePass.GetValueOnRenderThread();

|

||||

```

|

||||

看得出判断条件是:

|

||||

1. 显卡是否支持并行渲染。

|

||||

2. CVar(r.ParallelBasePass)是否开启并行渲染。

|

||||

|

||||

从AnisotropyPass可以看得出并行渲染与一般渲染的差别在于:

|

||||

1. FRenderTargetBinding绑定时的ERenderTargetLoadAction不同,**并行为ELoad**;**普通渲染为EClear**。

|

||||

2. 调用AddPass添加了**ERDGPassFlags::SkipRenderPass**标记。

|

||||

3. 并行渲染会在AddPass中构建**FRDGParallelCommandListSet ParallelCommandListSet**,并作为传入**DispatchDraw**;普通渲染传递nullptr。

|

||||

4. 普通渲染会额外调用**SetStereoViewport(RHICmdList, View);**,本质是调用RHICmdList.SetViewport来设置View。

|

||||

|

||||

### Code

|

||||

```c++

|

||||

RDG_CSV_STAT_EXCLUSIVE_SCOPE(GraphBuilder, RenderAnisotropyPass);

|

||||

SCOPED_NAMED_EVENT(FDeferredShadingSceneRenderer_RenderAnisotropyPass, FColor::Emerald);

|

||||

SCOPE_CYCLE_COUNTER(STAT_AnisotropyPassDrawTime);

|

||||

RDG_GPU_STAT_SCOPE(GraphBuilder, RenderAnisotropyPass);

|

||||

|

||||

for (int32 ViewIndex = 0; ViewIndex < Views.Num(); ViewIndex++)

|

||||

{

|

||||

FViewInfo& View = Views[ViewIndex];

|

||||

|

||||

if (View.ShouldRenderView())

|

||||

{

|

||||

FParallelMeshDrawCommandPass& ParallelMeshPass = View.ParallelMeshDrawCommandPasses[EMeshPass::AnisotropyPass];

|

||||

|

||||

if (!ParallelMeshPass.HasAnyDraw())

|

||||

{

|

||||

continue;

|

||||

}

|

||||

|

||||

View.BeginRenderView();

|

||||

|

||||

auto* PassParameters = GraphBuilder.AllocParameters<FAnisotropyPassParameters>();

|

||||

PassParameters->View = View.GetShaderParameters();

|

||||

PassParameters->RenderTargets.DepthStencil = FDepthStencilBinding(SceneTextures.Depth.Target, ERenderTargetLoadAction::ELoad, FExclusiveDepthStencil::DepthRead_StencilNop);

|

||||

|

||||

ParallelMeshPass.BuildRenderingCommands(GraphBuilder, Scene->GPUScene, PassParameters->InstanceCullingDrawParams);

|

||||

if (bDoParallelPass)

|

||||

{

|

||||

AddClearRenderTargetPass(GraphBuilder, SceneTextures.GBufferF);

|

||||

|

||||

PassParameters->RenderTargets[0] = FRenderTargetBinding(SceneTextures.GBufferF, ERenderTargetLoadAction::ELoad);

|

||||

|

||||

GraphBuilder.AddPass(

|

||||

RDG_EVENT_NAME("AnisotropyPassParallel"),

|

||||

PassParameters,

|

||||

ERDGPassFlags::Raster | ERDGPassFlags::SkipRenderPass,

|

||||

[this, &View, &ParallelMeshPass, PassParameters](const FRDGPass* InPass, FRHICommandListImmediate& RHICmdList)

|

||||

{

|

||||

FRDGParallelCommandListSet ParallelCommandListSet(InPass, RHICmdList, GET_STATID(STAT_CLP_AnisotropyPass), View, FParallelCommandListBindings(PassParameters));

|

||||

|

||||

ParallelMeshPass.DispatchDraw(&ParallelCommandListSet, RHICmdList, &PassParameters->InstanceCullingDrawParams);

|

||||

});

|

||||

}

|

||||

else

|

||||

{

|

||||

PassParameters->RenderTargets[0] = FRenderTargetBinding(SceneTextures.GBufferF, ERenderTargetLoadAction::EClear);

|

||||

|

||||

GraphBuilder.AddPass(

|

||||

RDG_EVENT_NAME("AnisotropyPass"),

|

||||

PassParameters,

|

||||

ERDGPassFlags::Raster,

|

||||

[this, &View, &ParallelMeshPass, PassParameters](FRHICommandList& RHICmdList)

|

||||

{

|

||||

SetStereoViewport(RHICmdList, View);

|

||||

|

||||

ParallelMeshPass.DispatchDraw(nullptr, RHICmdList, &PassParameters->InstanceCullingDrawParams);

|

||||

});

|

||||

}

|

||||

}

|

||||

}

|

||||

```

|

||||

@@ -0,0 +1,659 @@

|

||||

---

|

||||

title: GBuffer&Material&BasePass

|

||||

date: 2023-12-08 17:34:58

|

||||

excerpt:

|

||||

tags:

|

||||

rating: ⭐

|

||||

---

|

||||

|

||||

# # GBuffer

|

||||

目前UE5.3会调用

|

||||

- WriteGBufferInfoAutogen()

|

||||

- **EncodeGBufferToMRT()**

|

||||

动态生成BasePassPixelShader.usf中的**EncodeGBufferToMRT()** 的代码,并且会生成一个AutogenShaderHeaders.ush文件。其路径为:

|

||||

`Engine\Intermediate\ShaderAutogen\PCD3D_SM5`或者`Engine\Intermediate\ShaderAutogen\PCD3D_ES3_1`

|

||||

|

||||

1. ***给FGBufferData添加结构体数据时需要在此添加额外代码逻辑***

|

||||

2. GBuffer精度在FetchLegacyGBufferInfo()设置。

|

||||

3. 是否往GBuffer中写入Velocity,主要靠这个宏**WRITES_VELOCITY_TO_GBUFFER**。具体决定其数值的逻辑位于**FShaderGlobalDefines FetchShaderGlobalDefines**。主要还是靠**r.VelocityOutputPass**进行开启。

|

||||

1. PS. MSAA以及VR绝对不会开启Velocity输出选项。还有就是**r.Velocity.ForceOutput**,但经过测试不开启r.VelocityOutputPass依然无法输出。以及FPrimitiveSceneProxy的bAlwaysHasVelocity与bHasWorldPositionOffsetVelocity。

|

||||

2. 其他相关FSR、TSR?

|

||||

4. 如何添加GBuffer

|

||||

1. https://zhuanlan.zhihu.com/p/568775542

|

||||

2. https://zhuanlan.zhihu.com/p/677772284

|

||||

|

||||

## UE5 GBuffer内容:

|

||||

[[UE GBuffer存储数据]]

|

||||

```c#

|

||||

OutGBufferA(MRT1) = WorldNormal/PerObjectGBufferData (GBT_Float_16_16_16_16/GBT_Unorm_11_11_10/GBT_Unorm_8_8_8_8)

|

||||

OutGBufferB(MRT2) = Metallic/Specular/Roughness/EncodeShadingModelIdAndSelectiveOutputMask (GBT_Float_16_16_16_16/GBT_Unorm_8_8_8_8)

|

||||

OutGBufferC(MRT3) = BaseColor/GBufferAO (GBT_Unorm_8_8_8_8)

|

||||

OutGBufferD = GBuffer.CustomData (GBT_Unorm_8_8_8_8)

|

||||

OutGBufferE = GBuffer.PrecomputedShadowFactors (GBT_Unorm_8_8_8_8)

|

||||

TargetVelocity / OutGBufferF = velocity / tangent (默认不开启 带有深度<开启Lumen与距离场 或者 开启光线追踪> GBC_Raw_Float_16_16_16_16 不带深度 GBC_Raw_Float_16_16)

|

||||

TargetSeparatedMainDirLight = SingleLayerWater相关 (有SingleLayerWater才会开启 GBC_Raw_Float_11_11_10)

|

||||

OutGBufferF = Anisotropy

|

||||

|

||||

// 0..1, 2 bits, use CastContactShadow(GBuffer) or HasDynamicIndirectShadowCasterRepresentation(GBuffer) to extract

|

||||

half PerObjectGBufferData;

|

||||

```

|

||||

GBuffer相关信息(精度、顺序)可以参考FetchLegacyGBufferInfo()。

|

||||

- 不存在Velocity与Tangent:

|

||||

- OutGBufferD(MRT4)

|

||||

- OutGBufferD(MRT5)

|

||||

- TargetSeparatedMainDirLight(MRT6)

|

||||

- 存在Velocity:

|

||||

- TargetVelocity(MRT4)

|

||||

- OutGBufferD(MRT5)

|

||||

- OutGBufferE(MRT6)

|

||||

- TargetSeparatedMainDirLight(MRT7)

|

||||

- 存在Tangent:

|

||||

- OutGBufferF(MRT4)

|

||||

- OutGBufferD(MRT5)

|

||||

- OutGBufferE(MRT6)

|

||||

- TargetSeparatedMainDirLight(MRT7)

|

||||

|

||||

几个动态MRT的存在条件与Shader判断宏:

|

||||

- OutGBufferE(PrecomputedShadowFactors):r.AllowStaticLighting = 1

|

||||

- GBUFFER_HAS_PRECSHADOWFACTOR

|

||||

- WRITES_PRECSHADOWFACTOR_ZERO

|

||||

- WRITES_PRECSHADOWFACTOR_TO_GBUFFER

|

||||

- TargetVelocity:(IsUsingBasePassVelocity(Platform) || Layout == GBL_ForceVelocity) ? 1 : 0;//r.VelocityOutputPass = 1

|

||||

- r.VelocityOutputPass = 1时,会对骨骼物体以及WPO材质物体输出速度。因为大概率会使用距离场阴影以及VSM,所以会占用GBuffer Velocity所有通道。

|

||||

- GBUFFER_HAS_VELOCITY

|

||||

- WRITES_VELOCITY_TO_GBUFFER

|

||||

- SingleLayerWater

|

||||

- 默认不会写入GBuffer需要符合以下条件:const bool bNeedsSeparateMainDirLightTexture = IsWaterDistanceFieldShadowEnabled(Parameters.Platform) || IsWaterVirtualShadowMapFilteringEnabled(Parameters.Platform);

|

||||

- r.Water.SingleLayer.ShadersSupportDistanceFieldShadow = 1

|

||||

- r.Water.SingleLayer.ShadersSupportVSMFiltering = 1

|

||||

- const bool bIsSingleLayerWater = Parameters.MaterialParameters.ShadingModels.HasShadingModel(MSM_SingleLayerWater);

|

||||

- Tangent:false,目前单独使用另一组MRT来存储。

|

||||

- ~~GBUFFER_HAS_TANGENT~`

|

||||

|

||||

### ToonGBuffer修改&数据存储

|

||||

```c#

|

||||

OutGBufferA:PerObjectGBufferData => 可以存储额外的有关Tonn渲染功能参数。

|

||||

OutGBufferB:Metallic/Specular/Roughness =>

|

||||

? / SpcularPower(控制高光亮度与Mask) / ? / ?

|

||||

//ToonHairMask OffsetShadowMask/SpcularMask/SpecularValue

|

||||

OutGBufferC:GBufferAO =>

|

||||

ToonAO

|

||||

OutGBufferD:CustomData.xyzw =>

|

||||

ShadowColor.rgb / NoLOffset //ShadowColor这里可以在Material里通过主光向量、ShadowStep、Shadow羽化计算多层阴影效果。

|

||||

OutGBufferE:GBuffer.PrecomputedShadowFactors.xyzw =>

|

||||

ToonDataID/ ToonOutlineDataID / OutlineMask(控制Outline绘制以及Outline强度) / ToonObjectID(判断是否是一个物体)

|

||||

TargetVelocity / OutGBufferF = velocity / tangent //目前先不考虑输出Velocity的情况

|

||||

? / ? / ? / ?

|

||||

```

|

||||

|

||||

ToonDataID在材质编辑器中会存在SubsurfaceColor.a中,ToonOutlineDataID在材质编辑器中会存在CustomData1(引脚名为ToonBufferB,考虑到Subsurface有一个CurvatureMap需要使用CustomData0,所以这里使用了CustomData1)。

|

||||

|

||||

|

||||

蓝色协议的方案

|

||||

![[蓝色协议的方案#GBuffer]]

|

||||

|

||||

***额外添加相关宏(逻辑位于ShaderCompiler.cpp)***

|

||||

- **GBUFFER_HAS_TOONDATA**

|

||||

|

||||

### 修改GBuffer格式

|

||||

- [[#ShaderMaterialDerivedHelpers.cpp中的CalculateDerivedMaterialParameters()]]控制在BasePassPixelShader.usf中的MRT宏是否为true。

|

||||

- [[#BasePassRendering.cpp中ModifyBasePassCSPSCompilationEnvironment()]]控制Velocity与SingleLayerWater相关的RT精度。

|

||||

- [[#GBufferInfo.cpp中的FetchLegacyGBufferInfo()]]控制GBuffer精度以及数据打包情况。

|

||||

|

||||

#### BasePassRendering.cpp中ModifyBasePassCSPSCompilationEnvironment()

|

||||

```c++

|

||||

void ModifyBasePassCSPSCompilationEnvironment()

|

||||

{

|

||||

...

|

||||

const bool bOutputVelocity = (GBufferLayout == GBL_ForceVelocity) ||

|

||||

FVelocityRendering::BasePassCanOutputVelocity(Parameters.Platform);

|

||||

if (bOutputVelocity)

|

||||

{

|

||||

// As defined in BasePassPixelShader.usf. Also account for Strata setting velocity in slot 1 as described in FetchLegacyGBufferInfo.

|

||||

const int32 VelocityIndex = Strata::IsStrataEnabled() ? 1 : (IsForwardShadingEnabled(Parameters.Platform) ? 1 : 4);

|

||||

OutEnvironment.SetRenderTargetOutputFormat(VelocityIndex, PF_G16R16);

|

||||

}

|

||||

...

|

||||

const bool bNeedsSeparateMainDirLightTexture = IsWaterDistanceFieldShadowEnabled(Parameters.Platform) || IsWaterVirtualShadowMapFilteringEnabled(Parameters.Platform);

|

||||

if (bIsSingleLayerWater && bNeedsSeparateMainDirLightTexture)

|

||||

{

|

||||

// See FShaderCompileUtilities::FetchGBufferParamsRuntime for the details

|

||||

const bool bHasTangent = false;

|

||||

static const auto CVar = IConsoleManager::Get().FindTConsoleVariableDataInt(TEXT("r.AllowStaticLighting"));

|

||||

bool bHasPrecShadowFactor = (CVar ? (CVar->GetValueOnAnyThread() != 0) : 1);

|

||||

|

||||

uint32 TargetSeparatedMainDirLight = 5;

|

||||

if (bOutputVelocity == false && bHasTangent == false)

|

||||

{

|

||||

TargetSeparatedMainDirLight = 5;

|

||||

if (bHasPrecShadowFactor)

|

||||

{

|

||||

TargetSeparatedMainDirLight = 6;

|

||||

}

|

||||

}

|

||||

else if (bOutputVelocity)

|

||||

{

|

||||

TargetSeparatedMainDirLight = 6;

|

||||

if (bHasPrecShadowFactor)

|

||||

{

|

||||

TargetSeparatedMainDirLight = 7;

|

||||

}

|

||||

}

|

||||

else if (bHasTangent)

|

||||

{

|

||||

TargetSeparatedMainDirLight = 6;

|

||||

if (bHasPrecShadowFactor)

|

||||

{

|

||||

TargetSeparatedMainDirLight = 7;

|

||||

}

|

||||

}

|

||||

OutEnvironment.SetRenderTargetOutputFormat(TargetSeparatedMainDirLight, PF_FloatR11G11B10);

|

||||

...

|

||||

}

|

||||

```

|

||||

|

||||

#### GBufferInfo.cpp中的FetchLegacyGBufferInfo()

|

||||

控制GBuffer精度以及数据打包情况。

|

||||

|

||||

#### ShaderMaterialDerivedHelpers.cpp中的CalculateDerivedMaterialParameters()

|

||||

```c++

|

||||

else if (Mat.IS_BASE_PASS)

|

||||

{

|

||||

Dst.PIXELSHADEROUTPUT_BASEPASS = 1;

|

||||

if (Dst.USES_GBUFFER)

|

||||

{

|

||||

Dst.PIXELSHADEROUTPUT_MRT0 = (!SrcGlobal.SELECTIVE_BASEPASS_OUTPUTS || Dst.NEEDS_BASEPASS_VERTEX_FOGGING || Mat.USES_EMISSIVE_COLOR || SrcGlobal.ALLOW_STATIC_LIGHTING || Mat.MATERIAL_SHADINGMODEL_SINGLELAYERWATER);

|

||||

Dst.PIXELSHADEROUTPUT_MRT1 = ((!SrcGlobal.SELECTIVE_BASEPASS_OUTPUTS || !Mat.MATERIAL_SHADINGMODEL_UNLIT));

|

||||

Dst.PIXELSHADEROUTPUT_MRT2 = ((!SrcGlobal.SELECTIVE_BASEPASS_OUTPUTS || !Mat.MATERIAL_SHADINGMODEL_UNLIT));

|

||||

Dst.PIXELSHADEROUTPUT_MRT3 = ((!SrcGlobal.SELECTIVE_BASEPASS_OUTPUTS || !Mat.MATERIAL_SHADINGMODEL_UNLIT));

|

||||

if (SrcGlobal.GBUFFER_HAS_VELOCITY || SrcGlobal.GBUFFER_HAS_TANGENT)

|

||||

{

|

||||

Dst.PIXELSHADEROUTPUT_MRT4 = Dst.WRITES_VELOCITY_TO_GBUFFER || SrcGlobal.GBUFFER_HAS_TANGENT;

|

||||

Dst.PIXELSHADEROUTPUT_MRT5 = (!SrcGlobal.SELECTIVE_BASEPASS_OUTPUTS || Dst.WRITES_CUSTOMDATA_TO_GBUFFER);

|

||||

Dst.PIXELSHADEROUTPUT_MRT6 = (Dst.GBUFFER_HAS_PRECSHADOWFACTOR && (!SrcGlobal.SELECTIVE_BASEPASS_OUTPUTS || (Dst.WRITES_PRECSHADOWFACTOR_TO_GBUFFER && !Mat.MATERIAL_SHADINGMODEL_UNLIT)));

|

||||

}

|

||||

else

|

||||

{

|

||||

Dst.PIXELSHADEROUTPUT_MRT4 = (!SrcGlobal.SELECTIVE_BASEPASS_OUTPUTS || Dst.WRITES_CUSTOMDATA_TO_GBUFFER);

|

||||

Dst.PIXELSHADEROUTPUT_MRT5 = (Dst.GBUFFER_HAS_PRECSHADOWFACTOR && (!SrcGlobal.SELECTIVE_BASEPASS_OUTPUTS || (Dst.WRITES_PRECSHADOWFACTOR_TO_GBUFFER && !Mat.MATERIAL_SHADINGMODEL_UNLIT)));

|

||||

}

|

||||

}

|

||||

else

|

||||

{

|

||||

Dst.PIXELSHADEROUTPUT_MRT0 = true;

|

||||

// we also need MRT for thin translucency due to dual blending if we are not on the fallback path

|

||||

Dst.PIXELSHADEROUTPUT_MRT1 = (Dst.WRITES_VELOCITY_TO_GBUFFER || (Mat.DUAL_SOURCE_COLOR_BLENDING_ENABLED && Dst.MATERIAL_WORKS_WITH_DUAL_SOURCE_COLOR_BLENDING));

|

||||

}

|

||||

}

|

||||

}

|

||||

```

|

||||

|

||||

位于FShaderCompileUtilities::ApplyDerivedDefines(),新版本逻辑遍历数据由GBufferInfo.cpp中的FetchLegacyGBufferInfo()处理。

|

||||

```c++

|

||||

#if 1

|

||||

static bool bTestNewVersion = true;

|

||||

if (bTestNewVersion)

|

||||

{

|

||||

//if (DerivedDefines.USES_GBUFFER)

|

||||

{

|

||||

for (int32 Iter = 0; Iter < FGBufferInfo::MaxTargets; Iter++)

|

||||

{

|

||||

if (bTargetUsage[Iter])

|

||||

{

|

||||

FString TargetName = FString::Printf(TEXT("PIXELSHADEROUTPUT_MRT%d"), Iter);

|

||||

OutEnvironment.SetDefine(TargetName.GetCharArray().GetData(), TEXT("1"));

|

||||

}

|

||||

}

|

||||

}

|

||||

}

|

||||

else

|

||||

{

|

||||

// This uses the legacy logic from CalculateDerivedMaterialParameters(); Just keeping it around momentarily for testing during the transition.

|

||||

SET_COMPILE_BOOL_IF_TRUE(PIXELSHADEROUTPUT_MRT0)

|

||||

SET_COMPILE_BOOL_IF_TRUE(PIXELSHADEROUTPUT_MRT1)

|

||||

SET_COMPILE_BOOL_IF_TRUE(PIXELSHADEROUTPUT_MRT2)

|

||||

SET_COMPILE_BOOL_IF_TRUE(PIXELSHADEROUTPUT_MRT3)

|

||||

SET_COMPILE_BOOL_IF_TRUE(PIXELSHADEROUTPUT_MRT4)

|

||||

SET_COMPILE_BOOL_IF_TRUE(PIXELSHADEROUTPUT_MRT5)

|

||||

SET_COMPILE_BOOL_IF_TRUE(PIXELSHADEROUTPUT_MRT6)

|

||||

}

|

||||

#endif

|

||||

```

|

||||

|

||||

### MaterialTemplate.ush

|

||||

MaterialTemplate.ush中定义许多模版函数,里面的具体内容会在HLSLMaterialTranslator.h中的**GetMaterialShaderCode()** 中添加。最后这些函数会在BassPassPixelShader.usf中调用。

|

||||

|

||||

bool bEnableExecutionFlow的作用为是否使用新的材质HLSL生成器,默认为0。

|

||||

```c++

|

||||

static TAutoConsoleVariable<int32> CVarMaterialEnableNewHLSLGenerator(

|

||||

TEXT("r.MaterialEnableNewHLSLGenerator"),

|

||||

0,

|

||||

TEXT("Enables the new (WIP) material HLSL generator.\n")

|

||||

TEXT("0 - Don't allow\n")

|

||||

TEXT("1 - Allow if enabled by material\n")

|

||||

TEXT("2 - Force all materials to use new generator\n"),

|

||||

ECVF_RenderThreadSafe | ECVF_ReadOnly);

|

||||

```

|

||||

这个和新版材质HLSL生成器有关,相关生成代码为**MaterialEmitHLSL()**=>调用**GenerateMaterialTemplateHLSL()**

|

||||

|

||||

bCompileForComputeShader = Material->IsLightFunction();

|

||||

GetPerInstanceCustomDataX分为Vertex与Pixel版本。

|

||||

|

||||

#### FMaterialAttributes

|

||||

MaterialTemplate.ush有一处`/** Material declarations */`之后会生成对应FMaterialAttributes结构体,可以在材质编辑器的HLSL中查看生成结果。这与

|

||||

- MaterialAttributeDefinitionMap.cpp:FMaterialAttributeDefinitionMap::InitializeAttributeMap()中定义属性。

|

||||

- HLSLMaterialTranslator.cpp:GetMaterialShaderCode()中的`for (const FGuid& AttributeID : OrderedVisibleAttributes)`:生成对应属性结构体以及属性获取函数。

|

||||

|

||||

#### DerivativeAutogen.GenerateUsedFunctions()

|

||||

```c++

|

||||

{

|

||||

FString DerivativeHelpers = DerivativeAutogen.GenerateUsedFunctions(*this);

|

||||

FString DerivativeHelpersAndResources = DerivativeHelpers + ResourcesString;

|

||||

//LazyPrintf.PushParam(*ResourcesString);

|

||||

LazyPrintf.PushParam(*DerivativeHelpersAndResources);

|

||||

}

|

||||

```

|

||||

|

||||

#### GetMaterialEmissiveForCS()以及其他函数

|

||||

```c++

|

||||

if (bCompileForComputeShader)

|

||||

{

|

||||

LazyPrintf.PushParam(*GenerateFunctionCode(CompiledMP_EmissiveColorCS, BaseDerivativeVariation));

|

||||

}

|

||||

else

|

||||

{

|

||||

LazyPrintf.PushParam(TEXT("return 0"));

|

||||

}

|

||||

|

||||

{

|

||||

FLinearColor Extinction = Material->GetTranslucentMultipleScatteringExtinction();

|

||||

LazyPrintf.PushParam(*FString::Printf(TEXT("return MaterialFloat3(%.5f, %.5f, %.5f)"), Extinction.R, Extinction.G, Extinction.B));

|

||||

}

|

||||

LazyPrintf.PushParam(*FString::Printf(TEXT("return %.5f"), Material->GetOpacityMaskClipValue()));

|

||||

{

|

||||

const FDisplacementScaling DisplacementScaling = Material->GetDisplacementScaling();

|

||||

LazyPrintf.PushParam(*FString::Printf(TEXT("return %.5f"), FMath::Max(0.0f, DisplacementScaling.Magnitude)));

|

||||

LazyPrintf.PushParam(*FString::Printf(TEXT("return %.5f"), FMath::Clamp(DisplacementScaling.Center, 0.0f, 1.0f)));

|

||||

}

|

||||

|

||||

LazyPrintf.PushParam(!bEnableExecutionFlow ? *GenerateFunctionCode(MP_WorldPositionOffset, BaseDerivativeVariation) : TEXT("return Parameters.MaterialAttributes.WorldPositionOffset"));

|

||||

LazyPrintf.PushParam(!bEnableExecutionFlow ? *GenerateFunctionCode(CompiledMP_PrevWorldPositionOffset, BaseDerivativeVariation) : TEXT("return 0.0f"));

|

||||

LazyPrintf.PushParam(!bEnableExecutionFlow ? *GenerateFunctionCode(MP_CustomData0, BaseDerivativeVariation) : TEXT("return 0.0f"));

|

||||

LazyPrintf.PushParam(!bEnableExecutionFlow ? *GenerateFunctionCode(MP_CustomData1, BaseDerivativeVariation) : TEXT("return 0.0f"));

|

||||

```

|

||||

%.5f:表示按浮点数输出,小数点后面取5位其余的舍弃;例如:5/2 “%.5f”输出为:2.50000

|

||||

|

||||

#### MaterialCustomizedUVs & CustomInterpolators

|

||||

- `for (uint32 CustomUVIndex = 0; CustomUVIndex < NumUserTexCoords; CustomUVIndex++)`

|

||||

- `for (UMaterialExpressionVertexInterpolator* Interpolator : CustomVertexInterpolators`

|

||||

|

||||

### 添加ToonDataAssetID 与 ToonOutlineDataAssetID笔记

|

||||

1. FMaterialRenderProxy::UpdateDeferredCachedUniformExpressions()

|

||||

2. FMaterialRenderProxy::EvaluateUniformExpressions()

|

||||

3. FUniformExpressionSet::FillUniformBuffer()

|

||||

4. EvaluatePreshader()

|

||||

5. EvaluateParameter()

|

||||

6. Context.MaterialRenderProxy->GetParameterValue()

|

||||

|

||||

可以看得出关键数据在UniformExpressionSet中,这里的ParameterIndex则通过`EvaluateParameter(Stack, UniformExpressionSet, ReadPreshaderValue<uint16>(Data), Context);`进行计算。

|

||||

```c++

|

||||

const FMaterialNumericParameterInfo& Parameter = UniformExpressionSet->GetNumericParameter(ParameterIndex);

|

||||

bool bFoundParameter = false;

|

||||

|

||||

// First allow proxy the chance to override parameter

|

||||

if (Context.MaterialRenderProxy)

|

||||

{

|

||||

FMaterialParameterValue ParameterValue;

|

||||

if (Context.MaterialRenderProxy->GetParameterValue(Parameter.ParameterType, Parameter.ParameterInfo, ParameterValue, Context))

|

||||

{

|

||||

Stack.PushValue(ParameterValue.AsShaderValue());

|

||||

bFoundParameter = true;

|

||||

}

|

||||

}

|

||||

|

||||

bool FMaterialInstanceResource::GetParameterValue(EMaterialParameterType Type, const FHashedMaterialParameterInfo& ParameterInfo, FMaterialParameterValue& OutValue, const FMaterialRenderContext& Context) const

|

||||

{

|

||||

checkSlow(IsInParallelRenderingThread());

|

||||

|

||||

bool bResult = false;

|

||||

|

||||

// Check for hard-coded parameters

|

||||

if (Type == EMaterialParameterType::Scalar && ParameterInfo.Name == GetSubsurfaceProfileParameterName())

|

||||

{

|

||||

check(ParameterInfo.Association == EMaterialParameterAssociation::GlobalParameter);

|

||||

const USubsurfaceProfile* MySubsurfaceProfileRT = GetSubsurfaceProfileRT();

|

||||