Init

This commit is contained in:

@@ -0,0 +1,10 @@

|

||||

---

|

||||

title: 未命名

|

||||

date: 2025-03-20 18:07:58

|

||||

excerpt:

|

||||

tags:

|

||||

rating: ⭐

|

||||

---

|

||||

# 前言

|

||||

- [UE5 MeshShader初探](https://zhuanlan.zhihu.com/p/30320015352)

|

||||

- [UE5 MeshShader完整实践](https://zhuanlan.zhihu.com/p/30893647975)

|

||||

77

03-UnrealEngine/Rendering/RenderingPipeline/GPUScene.md

Normal file

77

03-UnrealEngine/Rendering/RenderingPipeline/GPUScene.md

Normal file

@@ -0,0 +1,77 @@

|

||||

---

|

||||

title: Untitled

|

||||

date: 2024-08-15 12:02:42

|

||||

excerpt:

|

||||

tags:

|

||||

rating: ⭐

|

||||

---

|

||||

# 前言

|

||||

- [UE5渲染--GPUScene与合并绘制](https://zhuanlan.zhihu.com/p/614758211)

|

||||

|

||||

# 相关类型

|

||||

- Primitive:

|

||||

- C++的数据类型_FPrimitiveUniformShaderParameters_(PrimitiveUniformShaderParameters.h)

|

||||

- Shader的数据FPrimitiveSceneData(SceneData.ush);

|

||||

- Instance:

|

||||

- C++的数据类型FInstanceSceneShaderData(InstanceUniformShaderParameters.h)

|

||||

- Shader的数据FInstanceSceneData(SceneData.ush);

|

||||

- Payload:

|

||||

- C++数据类型FPackedBatch、FPackedItem(InstanceCullingLoadBalancer.h)

|

||||

- Shader的数据类型FPackedInstanceBatch、FPackedInstanceBatchItem(InstanceCullingLoadBalancer.ush)

|

||||

|

||||

# DeferredShadingRenderer.cpp

|

||||

```c++

|

||||

Scene->GPUScene.Update(GraphBuilder, GetSceneUniforms(), *Scene, ExternalAccessQueue);

|

||||

|

||||

for (int32 ViewIndex = 0; ViewIndex < Views.Num(); ViewIndex++)

|

||||

{

|

||||

FViewInfo& View = Views[ViewIndex];

|

||||

RDG_GPU_MASK_SCOPE(GraphBuilder, View.GPUMask);

|

||||

|

||||

Scene->GPUScene.UploadDynamicPrimitiveShaderDataForView(GraphBuilder, *Scene, View, ExternalAccessQueue);

|

||||

|

||||

Scene->GPUScene.DebugRender(GraphBuilder, *Scene, GetSceneUniforms(), View);

|

||||

}

|

||||

```

|

||||

|

||||

## GPUScene数据更新

|

||||

```c++

|

||||

// 函数调用关系

|

||||

void FDeferredShadingSceneRenderer::Render(FRDGBuilder& GraphBuilder)

|

||||

{

|

||||

void FGPUScene::Update(FRDGBuilder& GraphBuilder, FScene& Scene, FRDGExternalAccessQueue& ExternalAccessQueue)

|

||||

{

|

||||

void FGPUScene::UpdateInternal(FRDGBuilder& GraphBuilder, FScene& Scene, FRDGExternalAccessQueue& ExternalAccessQueue)

|

||||

}

|

||||

}

|

||||

```

|

||||

|

||||

### 2.2、更新PrimitiveData

|

||||

|

||||

|

||||

|

||||

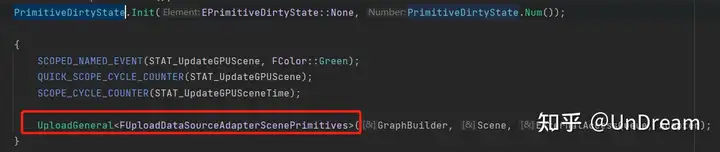

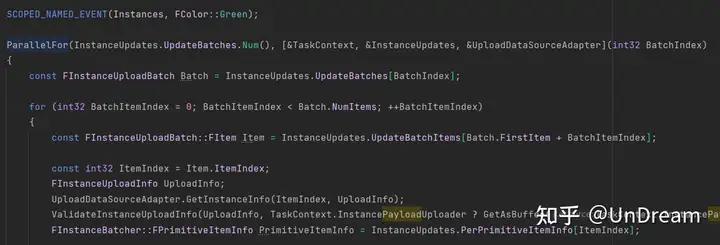

接下来,对标记为dirty的Primitive进行更新,更新逻辑为找到该Primitive在PrimitiveData Buffer里对应offset位置,对其进行更新。使用模板FUploadDataSourceAdapterScenePrimitives调用UploadGeneral()。

|

||||

|

||||

|

||||

|

||||

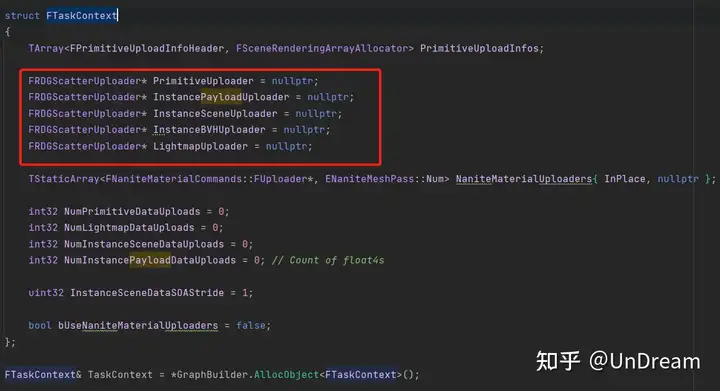

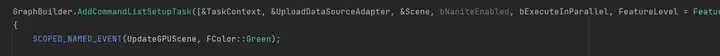

初始化5类Buffer的上传任务TaskContext

|

||||

|

||||

后面代码都是在对这个TaskContext进行初始化,然后启动任务,进行数据的上传。

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

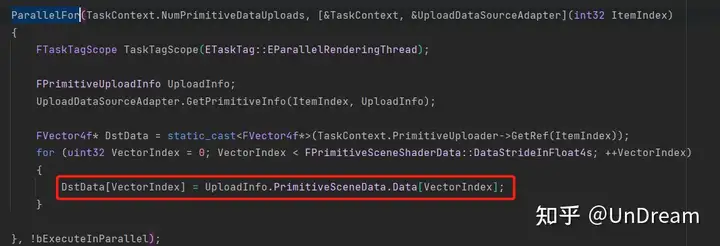

2.2.1、并行更新Primitive数据,将需要更新的PrimitiveCopy到Upload Buffer里,后续通过ComputeShader进行显存的上传然后更新到目标Buffer里;

|

||||

|

||||

|

||||

|

||||

2.2.2、同理InstanceSceneData以及InstancePayloadData数据的处理。

|

||||

|

||||

2.2.3、同理InstanceBVHUploader,LightmapUploader。

|

||||

|

||||

2.2.4、最后都会调用每个Uploader的***End()方法进行GPU显存的更新***。

|

||||

|

||||

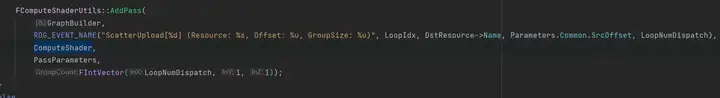

总结:数据的上传都一样,将需要更新的数据Copy到对应的UploadBuffer对应的位置里,然后通过对应的ComputeShader进行更新到目标Buffer,在函数FRDGAsyncScatterUploadBuffer::End()里实现,截图:

|

||||

|

||||

|

||||

@@ -0,0 +1,33 @@

|

||||

---

|

||||

title: Untitled

|

||||

date: 2024-10-16 14:39:40

|

||||

excerpt:

|

||||

tags:

|

||||

rating: ⭐

|

||||

---

|

||||

# 前言

|

||||

|

||||

# Stencil相关

|

||||

BasePass:绘制Stencil

|

||||

=>

|

||||

CopyStencilToLightingChannels

|

||||

=>

|

||||

ClearStencil (SceneDepthZ) :清空深度缓存中的Stencil

|

||||

|

||||

## BasePass

|

||||

BasePass中进行LIGHTING_CHANNELS、DISTANCE_FIELD_REPRESENTATION、贴花方面的Mask Bit计算,设置到深度缓存的Stencil上。

|

||||

```c++

|

||||

template<bool bDepthTest, ECompareFunction CompareFunction>

|

||||

void SetDepthStencilStateForBasePass_Internal(FMeshPassProcessorRenderState& InDrawRenderState, ERHIFeatureLevel::Type FeatureLevel)

|

||||

{

|

||||

const static bool bStrataDufferPassEnabled = Strata::IsStrataEnabled() && Strata::IsDBufferPassEnabled(GShaderPlatformForFeatureLevel[FeatureLevel]);

|

||||

if (bStrataDufferPassEnabled)

|

||||

{

|

||||

SetDepthStencilStateForBasePass_Internal<bDepthTest, CompareFunction, GET_STENCIL_BIT_MASK(STRATA_RECEIVE_DBUFFER_NORMAL, 1) | GET_STENCIL_BIT_MASK(STRATA_RECEIVE_DBUFFER_DIFFUSE, 1) | GET_STENCIL_BIT_MASK(STRATA_RECEIVE_DBUFFER_ROUGHNESS, 1) | GET_STENCIL_BIT_MASK(DISTANCE_FIELD_REPRESENTATION, 1) | STENCIL_LIGHTING_CHANNELS_MASK(0x7)>(InDrawRenderState);

|

||||

}

|

||||

else

|

||||

{

|

||||

SetDepthStencilStateForBasePass_Internal<bDepthTest, CompareFunction, GET_STENCIL_BIT_MASK(RECEIVE_DECAL, 1) | GET_STENCIL_BIT_MASK(DISTANCE_FIELD_REPRESENTATION, 1) | STENCIL_LIGHTING_CHANNELS_MASK(0x7)>(InDrawRenderState);

|

||||

}

|

||||

}

|

||||

```

|

||||

816

03-UnrealEngine/Rendering/RenderingPipeline/Lighting/Lighting.md

Normal file

816

03-UnrealEngine/Rendering/RenderingPipeline/Lighting/Lighting.md

Normal file

@@ -0,0 +1,816 @@

|

||||

---

|

||||

title: Untitled

|

||||

date: 2025-02-11 11:30:34

|

||||

excerpt:

|

||||

tags:

|

||||

rating: ⭐

|

||||

---

|

||||

|

||||

# FSortedLightSetSceneInfo

|

||||

有序的光源集合相关定义:

|

||||

```c++

|

||||

/** Data for a simple dynamic light. */

|

||||

class FSimpleLightEntry

|

||||

{

|

||||

public:

|

||||

FVector3f Color;

|

||||

float Radius;

|

||||

float Exponent;

|

||||

float InverseExposureBlend = 0.0f;

|

||||

float VolumetricScatteringIntensity;

|

||||

bool bAffectTranslucency;

|

||||

};

|

||||

|

||||

struct FSortedLightSceneInfo

|

||||

{

|

||||

union

|

||||

{

|

||||

struct

|

||||

{

|

||||

// Note: the order of these members controls the light sort order!

|

||||

// Currently bHandledByLumen is the MSB and LightType is LSB /** The type of light. */ uint32 LightType : LightType_NumBits;

|

||||

/** Whether the light has a texture profile. */

|

||||

uint32 bTextureProfile : 1;

|

||||

/** Whether the light uses a light function. */

|

||||

uint32 bLightFunction : 1;

|

||||

/** Whether the light uses lighting channels. */

|

||||

uint32 bUsesLightingChannels : 1;

|

||||

/** Whether the light casts shadows. */

|

||||

uint32 bShadowed : 1;

|

||||

/** Whether the light is NOT a simple light - they always support tiled/clustered but may want to be selected separately. */

|

||||

uint32 bIsNotSimpleLight : 1;

|

||||

/* We want to sort the lights that write into the packed shadow mask (when enabled) to the front of the list so we don't waste slots in the packed shadow mask. */

|

||||

uint32 bDoesNotWriteIntoPackedShadowMask : 1;

|

||||

/**

|

||||

* True if the light doesn't support clustered deferred, logic is inverted so that lights that DO support clustered deferred will sort first in list

|

||||

* Super-set of lights supporting tiled, so the tiled lights will end up in the first part of this range.

|

||||

*/

|

||||

uint32 bClusteredDeferredNotSupported : 1;

|

||||

/** Whether the light should be handled by Lumen's Final Gather, these will be sorted to the end so they can be skipped */

|

||||

uint32 bHandledByLumen : 1;

|

||||

} Fields;

|

||||

/** Sort key bits packed into an integer. */

|

||||

int32 Packed;

|

||||

} SortKey;

|

||||

|

||||

const FLightSceneInfo* LightSceneInfo;

|

||||

int32 SimpleLightIndex;

|

||||

|

||||

/** Initialization constructor. */

|

||||

explicit FSortedLightSceneInfo(const FLightSceneInfo* InLightSceneInfo)

|

||||

: LightSceneInfo(InLightSceneInfo),

|

||||

SimpleLightIndex(-1)

|

||||

{

|

||||

SortKey.Packed = 0;

|

||||

SortKey.Fields.bIsNotSimpleLight = 1;

|

||||

}

|

||||

explicit FSortedLightSceneInfo(int32 InSimpleLightIndex)

|

||||

: LightSceneInfo(nullptr),

|

||||

SimpleLightIndex(InSimpleLightIndex)

|

||||

{

|

||||

SortKey.Packed = 0;

|

||||

SortKey.Fields.bIsNotSimpleLight = 0;

|

||||

}};

|

||||

|

||||

/**

|

||||

* Stores info about sorted lights and ranges.

|

||||

* The sort-key in FSortedLightSceneInfo gives rise to the following order:

|

||||

* [SimpleLights,Clustered,UnbatchedLights,LumenLights] * Note that some shadowed lights can be included in the clustered pass when virtual shadow maps and one pass projection are used. */struct FSortedLightSetSceneInfo

|

||||

{

|

||||

int32 SimpleLightsEnd;

|

||||

int32 ClusteredSupportedEnd;

|

||||

|

||||

/** First light with shadow map or */

|

||||

int32 UnbatchedLightStart;

|

||||

|

||||

int32 LumenLightStart;

|

||||

|

||||

FSimpleLightArray SimpleLights;

|

||||

TArray<FSortedLightSceneInfo, SceneRenderingAllocator> SortedLights;

|

||||

};

|

||||

```

|

||||

|

||||

## 开始获取有序光源集合

|

||||

UE的光源分配由`FDeferredShadingSceneRenderer::Render`内的`bComputeLightGrid`变量决定的,bComputeLightGrid的赋值逻辑如下:

|

||||

```c++

|

||||

void FDeferredShadingSceneRenderer::Render(FRHICommandListImmediate& RHICmdList) {

|

||||

...

|

||||

bool bComputeLightGrid = false;

|

||||

|

||||

if (RendererOutput == ERendererOutput::FinalSceneColor)

|

||||

{

|

||||

if (bUseVirtualTexturing)

|

||||

{

|

||||

// Note, should happen after the GPU-Scene update to ensure rendering to runtime virtual textures is using the correctly updated scene

|

||||

FVirtualTextureSystem::Get().EndUpdate(GraphBuilder, MoveTemp(VirtualTextureUpdater), FeatureLevel);

|

||||

}

|

||||

|

||||

#if RHI_RAYTRACING

|

||||

GatherRayTracingWorldInstancesForView(GraphBuilder, ReferenceView, RayTracingScene, InitViewTaskDatas.RayTracingRelevantPrimitives);

|

||||

#endif // RHI_RAYTRACING

|

||||

|

||||

bool bAnyLumenEnabled = false;

|

||||

|

||||

{

|

||||

if (bUseGBuffer)

|

||||

{

|

||||

bComputeLightGrid = bRenderDeferredLighting;

|

||||

}

|

||||

else

|

||||

{

|

||||

bComputeLightGrid = ViewFamily.EngineShowFlags.Lighting;

|

||||

}

|

||||

|

||||

for (int32 ViewIndex = 0; ViewIndex < Views.Num(); ViewIndex++)

|

||||

{

|

||||

FViewInfo& View = Views[ViewIndex];

|

||||

bAnyLumenEnabled = bAnyLumenEnabled

|

||||

|| GetViewPipelineState(View).DiffuseIndirectMethod == EDiffuseIndirectMethod::Lumen

|

||||

|| GetViewPipelineState(View).ReflectionsMethod == EReflectionsMethod::Lumen;

|

||||

}

|

||||

|

||||

bComputeLightGrid |= (

|

||||

ShouldRenderVolumetricFog() ||

|

||||

VolumetricCloudWantsToSampleLocalLights(Scene, ViewFamily.EngineShowFlags) ||

|

||||

ViewFamily.ViewMode != VMI_Lit ||

|

||||

bAnyLumenEnabled ||

|

||||

VirtualShadowMapArray.IsEnabled() ||

|

||||

ShouldVisualizeLightGrid());

|

||||

}

|

||||

}

|

||||

...

|

||||

}

|

||||

```

|

||||

|

||||

获取有序的光源集合

|

||||

```c++

|

||||

void FDeferredShadingSceneRenderer::Render(FRHICommandListImmediate& RHICmdList) {

|

||||

...

|

||||

// 有序的光源集合.

|

||||

FSortedLightSetSceneInfo& SortedLightSet = *GraphBuilder.AllocObject<FSortedLightSetSceneInfo>();

|

||||

{

|

||||

RDG_CSV_STAT_EXCLUSIVE_SCOPE(GraphBuilder, SortLights);

|

||||

RDG_GPU_STAT_SCOPE(GraphBuilder, SortLights);

|

||||

ComputeLightGridOutput = GatherLightsAndComputeLightGrid(GraphBuilder, bComputeLightGrid, SortedLightSet);

|

||||

}

|

||||

...

|

||||

}

|

||||

```

|

||||

|

||||

PS. 简单光源都可以被分块或分簇渲染,但对于非简单光源,只有满足以下条件的光源才可被分块或分簇渲染:

|

||||

- 没有使用光源的附加特性(TextureProfile、LightFunction、LightingChannel)。

|

||||

- 没有开启阴影。

|

||||

- 非平行光或矩形光。

|

||||

|

||||

另外,是否支持分块渲染,还需要光源场景代理的`IsTiledDeferredLightingSupported`返回true,长度为0的点光源才支持分块渲染。

|

||||

|

||||

## GatherLightsAndComputeLightGrid

|

||||

```c++

|

||||

FComputeLightGridOutput FDeferredShadingSceneRenderer::GatherLightsAndComputeLightGrid(FRDGBuilder& GraphBuilder, bool bNeedLightGrid, FSortedLightSetSceneInfo& SortedLightSet)

|

||||

{

|

||||

SCOPED_NAMED_EVENT(GatherLightsAndComputeLightGrid, FColor::Emerald);

|

||||

FComputeLightGridOutput Result = {};

|

||||

|

||||

bool bShadowedLightsInClustered = ShouldUseClusteredDeferredShading()

|

||||

&& CVarVirtualShadowOnePassProjection.GetValueOnRenderThread()

|

||||

&& VirtualShadowMapArray.IsEnabled();

|

||||

|

||||

const bool bUseLumenDirectLighting = ShouldRenderLumenDirectLighting(Scene, Views[0]);

|

||||

|

||||

GatherAndSortLights(SortedLightSet, bShadowedLightsInClustered, bUseLumenDirectLighting);

|

||||

|

||||

if (!bNeedLightGrid)

|

||||

{

|

||||

SetDummyForwardLightUniformBufferOnViews(GraphBuilder, ShaderPlatform, Views);

|

||||

return Result;

|

||||

}

|

||||

|

||||

bool bAnyViewUsesForwardLighting = false;

|

||||

bool bAnyViewUsesLumen = false;

|

||||

for (int32 ViewIndex = 0; ViewIndex < Views.Num(); ViewIndex++)

|

||||

{

|

||||

const FViewInfo& View = Views[ViewIndex];

|

||||

bAnyViewUsesForwardLighting |= View.bTranslucentSurfaceLighting || ShouldRenderVolumetricFog() || View.bHasSingleLayerWaterMaterial || VolumetricCloudWantsToSampleLocalLights(Scene, ViewFamily.EngineShowFlags) || ShouldVisualizeLightGrid();

|

||||

bAnyViewUsesLumen |= GetViewPipelineState(View).DiffuseIndirectMethod == EDiffuseIndirectMethod::Lumen || GetViewPipelineState(View).ReflectionsMethod == EReflectionsMethod::Lumen;

|

||||

}

|

||||

|

||||

const bool bCullLightsToGrid = GLightCullingQuality

|

||||

&& (IsForwardShadingEnabled(ShaderPlatform) || bAnyViewUsesForwardLighting || IsRayTracingEnabled() || ShouldUseClusteredDeferredShading() ||

|

||||

bAnyViewUsesLumen || ViewFamily.EngineShowFlags.VisualizeMeshDistanceFields || VirtualShadowMapArray.IsEnabled());

|

||||

|

||||

// Store this flag if lights are injected in the grids, check with 'AreLightsInLightGrid()'

|

||||

bAreLightsInLightGrid = bCullLightsToGrid;

|

||||

|

||||

Result = ComputeLightGrid(GraphBuilder, bCullLightsToGrid, SortedLightSet);

|

||||

|

||||

return Result;

|

||||

}

|

||||

```

|

||||

|

||||

- GatherAndSortLights:收集与排序当前场景中所有的可见光源(当前View)。

|

||||

- ComputeLightGrid:是在锥体空间(frustum space)裁剪局部光源和反射探针到3D格子中,构建每个视图相关的光源列表和格子。

|

||||

|

||||

# RenderLights() -> RenderLight()

|

||||

|

||||

## InternalRenderLight()

|

||||

|

||||

## DeferredLightVertexShaders

|

||||

```c++

|

||||

// 输入参数.

|

||||

struct FInputParams

|

||||

{

|

||||

float2 PixelPos;

|

||||

float4 ScreenPosition;

|

||||

float2 ScreenUV;

|

||||

float3 ScreenVector;

|

||||

};

|

||||

|

||||

// 派生参数.

|

||||

struct FDerivedParams

|

||||

{

|

||||

float3 CameraVector;

|

||||

float3 WorldPosition;

|

||||

};

|

||||

|

||||

// 获取派生参数.

|

||||

FDerivedParams GetDerivedParams(in FInputParams Input, in float SceneDepth)

|

||||

{

|

||||

FDerivedParams Out;

|

||||

#if LIGHT_SOURCE_SHAPE > 0

|

||||

// With a perspective projection, the clip space position is NDC * Clip.w

|

||||

// With an orthographic projection, clip space is the same as NDC

|

||||

float2 ClipPosition = Input.ScreenPosition.xy / Input.ScreenPosition.w * (View.ViewToClip[3][3] < 1.0f ? SceneDepth : 1.0f);

|

||||

Out.WorldPosition = mul(float4(ClipPosition, SceneDepth, 1), View.ScreenToWorld).xyz;

|

||||

Out.CameraVector = normalize(Out.WorldPosition - View.WorldCameraOrigin);

|

||||

#else

|

||||

Out.WorldPosition = Input.ScreenVector * SceneDepth + View.WorldCameraOrigin;

|

||||

Out.CameraVector = normalize(Input.ScreenVector);

|

||||

#endif

|

||||

return Out;

|

||||

}

|

||||

|

||||

Texture2D<uint> LightingChannelsTexture;

|

||||

|

||||

uint GetLightingChannelMask(float2 UV)

|

||||

{

|

||||

uint2 IntegerUV = UV * View.BufferSizeAndInvSize.xy;

|

||||

return LightingChannelsTexture.Load(uint3(IntegerUV, 0)).x;

|

||||

}

|

||||

|

||||

float GetExposure()

|

||||

{

|

||||

return View.PreExposure;

|

||||

}

|

||||

```

|

||||

|

||||

向往文章中的SetupLightDataForStandardDeferred()变为InitDeferredLightFromUniforms()。位于LightDataUniform.ush。

|

||||

```c++

|

||||

FDeferredLightData InitDeferredLightFromUniforms(uint InLightType)

|

||||

{

|

||||

const bool bIsRadial = InLightType != LIGHT_TYPE_DIRECTIONAL;

|

||||

|

||||

FDeferredLightData Out;

|

||||

Out.TranslatedWorldPosition = GetDeferredLightTranslatedWorldPosition();

|

||||

Out.InvRadius = DeferredLightUniforms.InvRadius;

|

||||

Out.Color = DeferredLightUniforms.Color;

|

||||

Out.FalloffExponent = DeferredLightUniforms.FalloffExponent;

|

||||

Out.Direction = DeferredLightUniforms.Direction;

|

||||

Out.Tangent = DeferredLightUniforms.Tangent;

|

||||

Out.SpotAngles = DeferredLightUniforms.SpotAngles;

|

||||

Out.SourceRadius = DeferredLightUniforms.SourceRadius;

|

||||

Out.SourceLength = bIsRadial ? DeferredLightUniforms.SourceLength : 0;

|

||||

Out.SoftSourceRadius = DeferredLightUniforms.SoftSourceRadius;

|

||||

Out.SpecularScale = DeferredLightUniforms.SpecularScale;

|

||||

Out.ContactShadowLength = abs(DeferredLightUniforms.ContactShadowLength);

|

||||

Out.ContactShadowLengthInWS = DeferredLightUniforms.ContactShadowLength < 0.0f;

|

||||

Out.ContactShadowCastingIntensity = DeferredLightUniforms.ContactShadowCastingIntensity;

|

||||

Out.ContactShadowNonCastingIntensity = DeferredLightUniforms.ContactShadowNonCastingIntensity;

|

||||

Out.DistanceFadeMAD = DeferredLightUniforms.DistanceFadeMAD;

|

||||

Out.ShadowMapChannelMask = DeferredLightUniforms.ShadowMapChannelMask;

|

||||

Out.ShadowedBits = DeferredLightUniforms.ShadowedBits;

|

||||

Out.bInverseSquared = bIsRadial && DeferredLightUniforms.FalloffExponent == 0; // Directional lights don't use 'inverse squared attenuation'

|

||||

Out.bRadialLight = bIsRadial;

|

||||

Out.bSpotLight = InLightType == LIGHT_TYPE_SPOT;

|

||||

Out.bRectLight = InLightType == LIGHT_TYPE_RECT;

|

||||

|

||||

Out.RectLightData.BarnCosAngle = DeferredLightUniforms.RectLightBarnCosAngle;

|

||||

Out.RectLightData.BarnLength = DeferredLightUniforms.RectLightBarnLength;

|

||||

Out.RectLightData.AtlasData.AtlasMaxLevel = DeferredLightUniforms.RectLightAtlasMaxLevel;

|

||||

Out.RectLightData.AtlasData.AtlasUVOffset = DeferredLightUniforms.RectLightAtlasUVOffset;

|

||||

Out.RectLightData.AtlasData.AtlasUVScale = DeferredLightUniforms.RectLightAtlasUVScale;

|

||||

|

||||

Out.HairTransmittance = InitHairTransmittanceData();

|

||||

return Out;

|

||||

}

|

||||

```

|

||||

|

||||

### DeferredLightPixelMain

|

||||

```c++

|

||||

void DeferredLightPixelMain(

|

||||

#if LIGHT_SOURCE_SHAPE > 0

|

||||

float4 InScreenPosition : TEXCOORD0,

|

||||

#else

|

||||

float2 ScreenUV : TEXCOORD0,

|

||||

float3 ScreenVector : TEXCOORD1,

|

||||

#endif

|

||||

float4 SVPos : SV_POSITION,

|

||||

out float4 OutColor : SV_Target0

|

||||

#if STRATA_OPAQUE_ROUGH_REFRACTION_ENABLED

|

||||

, out float3 OutOpaqueRoughRefractionSceneColor : SV_Target1

|

||||

, out float3 OutSubSurfaceSceneColor : SV_Target2

|

||||

#endif

|

||||

)

|

||||

{

|

||||

const float2 PixelPos = SVPos.xy;

|

||||

OutColor = 0;

|

||||

#if STRATA_OPAQUE_ROUGH_REFRACTION_ENABLED

|

||||

OutOpaqueRoughRefractionSceneColor = 0;

|

||||

OutSubSurfaceSceneColor = 0;

|

||||

#endif

|

||||

|

||||

// Convert input data (directional/local light)

|

||||

// 计算屏幕UV

|

||||

FInputParams InputParams = (FInputParams)0;

|

||||

InputParams.PixelPos = SVPos.xy;

|

||||

#if LIGHT_SOURCE_SHAPE > 0

|

||||

InputParams.ScreenPosition = InScreenPosition;

|

||||

InputParams.ScreenUV = InScreenPosition.xy / InScreenPosition.w * View.ScreenPositionScaleBias.xy + View.ScreenPositionScaleBias.wz;

|

||||

InputParams.ScreenVector = 0;

|

||||

#else

|

||||

InputParams.ScreenPosition = 0;

|

||||

InputParams.ScreenUV = ScreenUV;

|

||||

InputParams.ScreenVector = ScreenVector;

|

||||

#endif

|

||||

|

||||

#if STRATA_ENABLED

|

||||

|

||||

FStrataAddressing StrataAddressing = GetStrataPixelDataByteOffset(PixelPos, uint2(View.BufferSizeAndInvSize.xy), Strata.MaxBytesPerPixel);

|

||||

FStrataPixelHeader StrataPixelHeader = UnpackStrataHeaderIn(Strata.MaterialTextureArray, StrataAddressing, Strata.TopLayerTexture);

|

||||

|

||||

BRANCH

|

||||

if (StrataPixelHeader.BSDFCount > 0 // This test is also enough to exclude sky pixels

|

||||

#if USE_LIGHTING_CHANNELS

|

||||

//灯光通道逻辑

|

||||

&& (GetLightingChannelMask(InputParams.ScreenUV) & DeferredLightUniforms.LightingChannelMask)

|

||||

#endif

|

||||

)

|

||||

{

|

||||

//通过SceneDepth获取的CameraVector以及当前像素的世界坐标

|

||||

const float SceneDepth = CalcSceneDepth(InputParams.ScreenUV);

|

||||

const FDerivedParams DerivedParams = GetDerivedParams(InputParams, SceneDepth);

|

||||

|

||||

//设置获取光源各种信息

|

||||

FDeferredLightData LightData = InitDeferredLightFromUniforms(CURRENT_LIGHT_TYPE);

|

||||

UpdateLightDataColor(LightData, InputParams, DerivedParams);//根据当前世界坐标计算LightData.Color *= 大气&云&阴影的衰减值 * IES灯亮度(非IES灯数值为1)

|

||||

|

||||

float3 V =-DerivedParams.CameraVector;

|

||||

float3 L = LightData.Direction; // Already normalized

|

||||

float3 ToLight = L;

|

||||

float LightMask = 1;

|

||||

if (LightData.bRadialLight)

|

||||

{

|

||||

LightMask = GetLocalLightAttenuation(DerivedParams.TranslatedWorldPosition, LightData, ToLight, L);

|

||||

}

|

||||

|

||||

if (LightMask > 0)

|

||||

{

|

||||

FShadowTerms ShadowTerms = { StrataGetAO(StrataPixelHeader), 1.0, 1.0, InitHairTransmittanceData() };

|

||||

float4 LightAttenuation = GetLightAttenuationFromShadow(InputParams, SceneDepth);

|

||||

|

||||

float Dither = InterleavedGradientNoise(InputParams.PixelPos, View.StateFrameIndexMod8);

|

||||

const uint FakeShadingModelID = 0;

|

||||

const float FakeContactShadowOpacity = 1.0f;

|

||||

float4 PrecomputedShadowFactors = StrataReadPrecomputedShadowFactors(StrataPixelHeader, PixelPos, SceneTexturesStruct.GBufferETexture);

|

||||

GetShadowTerms(SceneDepth, PrecomputedShadowFactors, FakeShadingModelID, FakeContactShadowOpacity,

|

||||

LightData, DerivedParams.TranslatedWorldPosition, L, LightAttenuation, Dither, ShadowTerms);

|

||||

|

||||

FStrataDeferredLighting StrataLighting = StrataDeferredLighting(

|

||||

LightData,

|

||||

V,

|

||||

L,

|

||||

ToLight,

|

||||

LightMask,

|

||||

ShadowTerms,

|

||||

Strata.MaterialTextureArray,

|

||||

StrataAddressing,

|

||||

StrataPixelHeader);

|

||||

|

||||

OutColor += StrataLighting.SceneColor;

|

||||

#if STRATA_OPAQUE_ROUGH_REFRACTION_ENABLED

|

||||

OutOpaqueRoughRefractionSceneColor += StrataLighting.OpaqueRoughRefractionSceneColor;

|

||||

OutSubSurfaceSceneColor += StrataLighting.SubSurfaceSceneColor;

|

||||

#endif

|

||||

}

|

||||

}

|

||||

|

||||

#else // STRATA_ENABLED

|

||||

//取得屏幕空间数据(FGbufferData、AO)

|

||||

FScreenSpaceData ScreenSpaceData = GetScreenSpaceData(InputParams.ScreenUV);

|

||||

// Only light pixels marked as using deferred shading

|

||||

BRANCH if (ScreenSpaceData.GBuffer.ShadingModelID > 0

|

||||

#if USE_LIGHTING_CHANNELS

|

||||

&& (GetLightingChannelMask(InputParams.ScreenUV) & DeferredLightUniforms.LightingChannelMask)

|

||||

#endif

|

||||

)

|

||||

{

|

||||

//通过SceneDepth获取的CameraVector以及当前像素的世界坐标

|

||||

const float SceneDepth = CalcSceneDepth(InputParams.ScreenUV);

|

||||

const FDerivedParams DerivedParams = GetDerivedParams(InputParams, SceneDepth);

|

||||

|

||||

//设置获取光源各种信息

|

||||

FDeferredLightData LightData = InitDeferredLightFromUniforms(CURRENT_LIGHT_TYPE);

|

||||

UpdateLightDataColor(LightData, InputParams, DerivedParams);//根据当前世界坐标计算LightData.Color *= 大气&云&阴影的衰减值 * IES灯亮度(非IES灯数值为1)

|

||||

|

||||

|

||||

#if USE_HAIR_COMPLEX_TRANSMITTANCE

|

||||

//针对ShadingModel Hair(同时需要CustomData.a > 0)计算头发散射结果

|

||||

if (ScreenSpaceData.GBuffer.ShadingModelID == SHADINGMODELID_HAIR && ShouldUseHairComplexTransmittance(ScreenSpaceData.GBuffer))

|

||||

{

|

||||

LightData.HairTransmittance = EvaluateDualScattering(ScreenSpaceData.GBuffer, DerivedParams.CameraVector, -DeferredLightUniforms.Direction);

|

||||

}

|

||||

#endif

|

||||

//计算当前像素的抖动值

|

||||

float Dither = InterleavedGradientNoise(InputParams.PixelPos, View.StateFrameIndexMod8);

|

||||

|

||||

float SurfaceShadow = 1.0f;

|

||||

|

||||

float4 LightAttenuation = GetLightAttenuationFromShadow(InputParams, SceneDepth);//根绝是否开启VSM 分别从VirtualShadowMap 或者 LightAttenuationTexture(上一阶段渲染的ShadowProjction) 获取灯光衰减值。

|

||||

float4 Radiance = GetDynamicLighting(DerivedParams.TranslatedWorldPosition, DerivedParams.CameraVector, ScreenSpaceData.GBuffer, ScreenSpaceData.AmbientOcclusion, ScreenSpaceData.GBuffer.ShadingModelID, LightData, LightAttenuation, Dither, uint2(InputParams.PixelPos), SurfaceShadow);

|

||||

|

||||

OutColor += Radiance;

|

||||

}

|

||||

|

||||

#endif // STRATA_ENABLED

|

||||

|

||||

// RGB:SceneColor Specular and Diffuse

|

||||

// A:Non Specular SceneColor Luminance

|

||||

// So we need PreExposure for both color and alpha

|

||||

OutColor.rgba *= GetExposure();

|

||||

#if STRATA_OPAQUE_ROUGH_REFRACTION_ENABLED

|

||||

// Idem

|

||||

OutOpaqueRoughRefractionSceneColor *= GetExposure();

|

||||

OutSubSurfaceSceneColor *= GetExposure();

|

||||

#endif

|

||||

}

|

||||

#endif

|

||||

```

|

||||

|

||||

#### GetLightAttenuationFromShadow() => GetPerPixelLightAttenuation()

|

||||

原文:https://zhuanlan.zhihu.com/p/23216110797

|

||||

有提到阴影模糊问题。

|

||||

FDeferredLightPS::FParameters GetDeferredLightPSParameters()可以看到该Sampler的模式是Point模式。

|

||||

```c++

|

||||

float4 GetPerPixelLightAttenuation(float2 UV)

|

||||

{

|

||||

return DecodeLightAttenuation(Texture2DSampleLevel(LightAttenuationTexture, LightAttenuationTextureSampler, UV, 0));

|

||||

}

|

||||

```

|

||||

|

||||

之后可以仿照GetPerPixelLightAttenuation写一个针对ToonShadow的函数:

|

||||

```c++

|

||||

//对卡通阴影进行降采样抗锯齿

|

||||

float4 GetPerPixelLightAttenuationToonAA(float2 UV)

|

||||

{

|

||||

int texture_x, texture_y;

|

||||

LightAttenuationTexture.GetDimensions(texture_x, texture_y);

|

||||

|

||||

float2 texelSize = float2(1.0 / texture_x, 1.0 / texture_y);

|

||||

|

||||

float2 sampleOffsets[4] = {

|

||||

float2(-1.5, 0.5),

|

||||

float2( 0.5, 0.5),

|

||||

float2(-1.5, -1.5),

|

||||

float2( 0.5, -1.5)

|

||||

};

|

||||

|

||||

float4 shadowSum = float4(0,0,0,0);

|

||||

for (int i = 0; i < 4; i++)

|

||||

{

|

||||

float2 sampleUV = UV + sampleOffsets[i] * texelSize;

|

||||

shadowSum += DecodeLightAttenuation(Texture2DSampleLevel(LightAttenuationTexture, LightAttenuationTextureSampler_Toon, sampleUV, 0));

|

||||

}

|

||||

return shadowSum * 0.25;

|

||||

}

|

||||

|

||||

//获取卡通灯光衰减

|

||||

float4 GetLightAttenuationFromShadowToonAA(in FInputParams InputParams, float SceneDepth, float3 TranslatedWorldPosition)

|

||||

{

|

||||

float4 LightAttenuation = float4(1, 1, 1, 1);

|

||||

|

||||

#if USE_VIRTUAL_SHADOW_MAP_MASK

|

||||

if (VirtualShadowMapId != INDEX_NONE)

|

||||

{

|

||||

float ShadowMask = GetVirtualShadowMapMaskForLight(ShadowMaskBits[InputParams.PixelPos], uint2(InputParams.PixelPos), SceneDepth, VirtualShadowMapId, TranslatedWorldPosition);

|

||||

return ShadowMask.xxxx;

|

||||

}else

|

||||

#endif

|

||||

{

|

||||

return GetPerPixelLightAttenuationToonAA(InputParams.ScreenUV);

|

||||

}

|

||||

}

|

||||

```

|

||||

|

||||

### GetDynamicLighting() => GetDynamicLightingSplit()

|

||||

```c++

|

||||

FDeferredLightingSplit GetDynamicLightingSplit(

|

||||

float3 TranslatedWorldPosition, float3 CameraVector, FGBufferData GBuffer, float AmbientOcclusion, uint ShadingModelID,

|

||||

FDeferredLightData LightData, float4 LightAttenuation, float Dither, uint2 SVPos,

|

||||

inout float SurfaceShadow)

|

||||

{

|

||||

FLightAccumulator LightAccumulator = AccumulateDynamicLighting(TranslatedWorldPosition, CameraVector, GBuffer, AmbientOcclusion, ShadingModelID, LightData, LightAttenuation, Dither, SVPos, SurfaceShadow);

|

||||

return LightAccumulator_GetResultSplit(LightAccumulator);

|

||||

}

|

||||

```

|

||||

|

||||

LightAccumulator_GetResultSplit():针对Subsurface,`RetDiffuse.a = In.ScatterableLightLuma;` 或者 `RetDiffuse.a = Luminance(In.ScatterableLight);`

|

||||

```c++

|

||||

FDeferredLightingSplit LightAccumulator_GetResultSplit(FLightAccumulator In)

|

||||

{

|

||||

float4 RetDiffuse;

|

||||

float4 RetSpecular;

|

||||

|

||||

if (VISUALIZE_LIGHT_CULLING == 1)

|

||||

{

|

||||

// a soft gradient from dark red to bright white, can be changed to be different

|

||||

RetDiffuse = 0.1f * float4(1.0f, 0.25f, 0.075f, 0) * In.EstimatedCost;

|

||||

RetSpecular = 0.1f * float4(1.0f, 0.25f, 0.075f, 0) * In.EstimatedCost;

|

||||

}

|

||||

else

|

||||

{

|

||||

RetDiffuse = float4(In.TotalLightDiffuse, 0);

|

||||

RetSpecular = float4(In.TotalLightSpecular, 0);

|

||||

|

||||

//针对Subsurface会额外对RetDiffuse的Alpha设置数值 ScatterableLight的亮度数值

|

||||

if (SUBSURFACE_CHANNEL_MODE == 1 )

|

||||

{

|

||||

if (View.bCheckerboardSubsurfaceProfileRendering == 0)

|

||||

{

|

||||

// RGB accumulated RGB HDR color, A: specular luminance for screenspace subsurface scattering

|

||||

RetDiffuse.a = In.ScatterableLightLuma;

|

||||

}

|

||||

}

|

||||

else if (SUBSURFACE_CHANNEL_MODE == 2)

|

||||

{

|

||||

// RGB accumulated RGB HDR color, A: view independent (diffuse) luminance for screenspace subsurface scattering

|

||||

// 3 add, 1 mul, 2 mad, can be optimized to use 2 less temporary during accumulation and remove the 3 add

|

||||

RetDiffuse.a = Luminance(In.ScatterableLight);

|

||||

// todo, need second MRT for SUBSURFACE_CHANNEL_MODE==2

|

||||

}

|

||||

}

|

||||

|

||||

FDeferredLightingSplit Ret;

|

||||

Ret.DiffuseLighting = RetDiffuse;

|

||||

Ret.SpecularLighting = RetSpecular;

|

||||

|

||||

return Ret;

|

||||

}

|

||||

```

|

||||

#### AccumulateDynamicLighting

|

||||

```c++

|

||||

FLightAccumulator AccumulateDynamicLighting(

|

||||

float3 TranslatedWorldPosition, half3 CameraVector, FGBufferData GBuffer, half AmbientOcclusion, uint ShadingModelID,

|

||||

FDeferredLightData LightData, half4 LightAttenuation, float Dither, uint2 SVPos,

|

||||

inout float SurfaceShadow)

|

||||

{

|

||||

FLightAccumulator LightAccumulator = (FLightAccumulator)0;

|

||||

|

||||

half3 V = -CameraVector;

|

||||

half3 N = GBuffer.WorldNormal;

|

||||

//针对开启CLEAR_COAT_BOTTOM_NORMAL的清漆ShadingModel进行Normal处理

|

||||

BRANCH if( GBuffer.ShadingModelID == SHADINGMODELID_CLEAR_COAT && CLEAR_COAT_BOTTOM_NORMAL)

|

||||

{

|

||||

const float2 oct1 = ((float2(GBuffer.CustomData.a, GBuffer.CustomData.z) * 4) - (512.0/255.0)) + UnitVectorToOctahedron(GBuffer.WorldNormal);

|

||||

N = OctahedronToUnitVector(oct1);

|

||||

}

|

||||

|

||||

float3 L = LightData.Direction; // Already normalized

|

||||

float3 ToLight = L;

|

||||

float3 MaskedLightColor = LightData.Color;//灯光颜色

|

||||

float LightMask = 1;

|

||||

// 获取辐射光源的衰减值,衰减方法根据LightData.bInverseSquared,会分别使用新版衰减方法InverseSquared 或者 旧方法。如果是SpotLight与RectLight就乘以SpotLight、RectLight对应的形状衰减数值。

|

||||

if (LightData.bRadialLight)

|

||||

{

|

||||

LightMask = GetLocalLightAttenuation( TranslatedWorldPosition, LightData, ToLight, L );

|

||||

MaskedLightColor *= LightMask;

|

||||

}

|

||||

|

||||

LightAccumulator.EstimatedCost += 0.3f; // running the PixelShader at all has a cost

|

||||

|

||||

BRANCH

|

||||

if( LightMask > 0 )//如果不是完全死黑就计算阴影部分逻辑

|

||||

{

|

||||

FShadowTerms Shadow;

|

||||

Shadow.SurfaceShadow = AmbientOcclusion;//GBuffer中的AO

|

||||

Shadow.TransmissionShadow = 1;

|

||||

Shadow.TransmissionThickness = 1;

|

||||

Shadow.HairTransmittance.OpaqueVisibility = 1;

|

||||

const float ContactShadowOpacity = GBuffer.CustomData.a;//TODO:修正ToonStandard对应的逻辑

|

||||

//

|

||||

GetShadowTerms(GBuffer.Depth, GBuffer.PrecomputedShadowFactors, GBuffer.ShadingModelID, ContactShadowOpacity,

|

||||

LightData, TranslatedWorldPosition, L, LightAttenuation, Dither, Shadow);

|

||||

SurfaceShadow = Shadow.SurfaceShadow;

|

||||

|

||||

LightAccumulator.EstimatedCost += 0.3f; // add the cost of getting the shadow terms

|

||||

|

||||

#if SHADING_PATH_MOBILE

|

||||

const bool bNeedsSeparateSubsurfaceLightAccumulation = UseSubsurfaceProfile(GBuffer.ShadingModelID);

|

||||

|

||||

FDirectLighting Lighting = (FDirectLighting)0;

|

||||

|

||||

half NoL = max(0, dot(GBuffer.WorldNormal, L));

|

||||

#if TRANSLUCENCY_NON_DIRECTIONAL

|

||||

NoL = 1.0f;

|

||||

#endif

|

||||

Lighting = EvaluateBxDF(GBuffer, N, V, L, NoL, Shadow);

|

||||

|

||||

Lighting.Specular *= LightData.SpecularScale;

|

||||

|

||||

LightAccumulator_AddSplit( LightAccumulator, Lighting.Diffuse, Lighting.Specular, Lighting.Diffuse, MaskedLightColor * Shadow.SurfaceShadow, bNeedsSeparateSubsurfaceLightAccumulation );

|

||||

LightAccumulator_AddSplit( LightAccumulator, Lighting.Transmission, 0.0f, Lighting.Transmission, MaskedLightColor * Shadow.TransmissionShadow, bNeedsSeparateSubsurfaceLightAccumulation );

|

||||

#else // SHADING_PATH_MOBILE

|

||||

BRANCH

|

||||

if( Shadow.SurfaceShadow + Shadow.TransmissionShadow > 0 )

|

||||

{

|

||||

const bool bNeedsSeparateSubsurfaceLightAccumulation = UseSubsurfaceProfile(GBuffer.ShadingModelID);//判断是否需要SubsurfaceProfile计算

|

||||

#if NON_DIRECTIONAL_DIRECT_LIGHTING // 非平行直接光

|

||||

float Lighting;

|

||||

if( LightData.bRectLight )//面光源

|

||||

{

|

||||

FRect Rect = GetRect( ToLight, LightData );

|

||||

Lighting = IntegrateLight( Rect );

|

||||

}

|

||||

else //点光源以及胶囊光源

|

||||

{

|

||||

FCapsuleLight Capsule = GetCapsule( ToLight, LightData );

|

||||

Lighting = IntegrateLight( Capsule, LightData.bInverseSquared );

|

||||

}

|

||||

|

||||

float3 LightingDiffuse = Diffuse_Lambert( GBuffer.DiffuseColor ) * Lighting;//Lambert照明 * 积分结果

|

||||

LightAccumulator_AddSplit(LightAccumulator, LightingDiffuse, 0.0f, 0, MaskedLightColor * Shadow.SurfaceShadow, bNeedsSeparateSubsurfaceLightAccumulation);

|

||||

#else

|

||||

FDirectLighting Lighting;

|

||||

if (LightData.bRectLight)//面光源

|

||||

{

|

||||

FRect Rect = GetRect( ToLight, LightData );

|

||||

const FRectTexture SourceTexture = ConvertToRectTexture(LightData);

|

||||

|

||||

#if REFERENCE_QUALITY

|

||||

Lighting = IntegrateBxDF( GBuffer, N, V, Rect, Shadow, SourceTexture, SVPos );

|

||||

#else

|

||||

Lighting = IntegrateBxDF( GBuffer, N, V, Rect, Shadow, SourceTexture);

|

||||

#endif

|

||||

}

|

||||

else //点光源以及胶囊光源

|

||||

{

|

||||

FCapsuleLight Capsule = GetCapsule( ToLight, LightData );

|

||||

|

||||

#if REFERENCE_QUALITY

|

||||

Lighting = IntegrateBxDF( GBuffer, N, V, Capsule, Shadow, SVPos );

|

||||

#else

|

||||

Lighting = IntegrateBxDF( GBuffer, N, V, Capsule, Shadow, LightData.bInverseSquared );

|

||||

#endif

|

||||

}

|

||||

|

||||

Lighting.Specular *= LightData.SpecularScale;

|

||||

|

||||

//累加Diffuse + Specular光照结果(Diffuse项还会作为散射进行计算,根绝散射模式不同赋予 FLightAccumulator.ScatterableLightLuma 或者 FLightAccumulator.ScatterableLight)

|

||||

LightAccumulator_AddSplit( LightAccumulator, Lighting.Diffuse, Lighting.Specular, Lighting.Diffuse, MaskedLightColor * Shadow.SurfaceShadow, bNeedsSeparateSubsurfaceLightAccumulation );

|

||||

//散射项计算

|

||||

LightAccumulator_AddSplit( LightAccumulator, Lighting.Transmission, 0.0f, Lighting.Transmission, MaskedLightColor * Shadow.TransmissionShadow, bNeedsSeparateSubsurfaceLightAccumulation );

|

||||

|

||||

LightAccumulator.EstimatedCost += 0.4f; // add the cost of the lighting computations (should sum up to 1 form one light)

|

||||

#endif

|

||||

}

|

||||

#endif // SHADING_PATH_MOBILE

|

||||

}

|

||||

return LightAccumulator;

|

||||

}

|

||||

```

|

||||

|

||||

光源新衰减公式,相关计算位于`GetLocalLightAttenuation()`:

|

||||

$$Falloff = \frac{saturate(1-(distance/lightRadius)^4)^2}{distance^2 + 1}$$

|

||||

|

||||

光源旧衰减公式,相关函数位于DynamicLightingCommon.ush中的`RadialAttenuation()`

|

||||

$$Falloff = (1 - saturate(length(WorldLightVector)))^ {FalloffExponent}$$

|

||||

##### GetShadowTerms()

|

||||

```c++

|

||||

void GetShadowTerms(float SceneDepth, half4 PrecomputedShadowFactors, uint ShadingModelID, float ContactShadowOpacity, FDeferredLightData LightData, float3 TranslatedWorldPosition, half3 L, half4 LightAttenuation, float Dither, inout FShadowTerms Shadow)

|

||||

{

|

||||

float ContactShadowLength = 0.0f;

|

||||

const float ContactShadowLengthScreenScale = GetTanHalfFieldOfView().y * SceneDepth;

|

||||

|

||||

BRANCH

|

||||

if (LightData.ShadowedBits)

|

||||

{

|

||||

// 重新映射ShadowProjection结果

|

||||

// Remapping the light attenuation buffer (see ShadowRendering.cpp)

|

||||

|

||||

// LightAttenuation: Light function + per-object shadows in z, per-object SSS shadowing in w,

|

||||

// Whole scene directional light shadows in x, whole scene directional light SSS shadows in y

|

||||

// Get static shadowing from the appropriate GBuffer channel

|

||||

#if ALLOW_STATIC_LIGHTING

|

||||

half UsesStaticShadowMap = dot(LightData.ShadowMapChannelMask, half4(1, 1, 1, 1));

|

||||

half StaticShadowing = lerp(1, dot(PrecomputedShadowFactors, LightData.ShadowMapChannelMask), UsesStaticShadowMap);

|

||||

#else

|

||||

half StaticShadowing = 1.0f;

|

||||

#endif

|

||||

|

||||

if (LightData.bRadialLight || SHADING_PATH_MOBILE)//RadialLight或者是移动端使用以下逻辑。bRadialLight一般是 PointLight or SpotLight。径向衰减(radial attenuation):指光照强度随距离光源的远近而衰减的特性(通常遵循平方反比定律)。

|

||||

{

|

||||

// Remapping the light attenuation buffer (see ShadowRendering.cpp)

|

||||

|

||||

Shadow.SurfaceShadow = LightAttenuation.z * StaticShadowing;//RadialLight灯光的阴影项计算不受AO影响,赋值Light function + per-object的ShadowProjection

|

||||

// SSS uses a separate shadowing term that allows light to penetrate the surface

|

||||

//@todo - how to do static shadowing of SSS correctly?

|

||||

Shadow.TransmissionShadow = LightAttenuation.w * StaticShadowing;//per-object SSS shadowing

|

||||

|

||||

Shadow.TransmissionThickness = LightAttenuation.w;//per-object SSS shadowing

|

||||

}

|

||||

else

|

||||

{

|

||||

// Remapping the light attenuation buffer (see ShadowRendering.cpp)

|

||||

// Also fix up the fade between dynamic and static shadows

|

||||

// to work with plane splits rather than spheres.

|

||||

|

||||

float DynamicShadowFraction = DistanceFromCameraFade(SceneDepth, LightData);

|

||||

// For a directional light, fade between static shadowing and the whole scene dynamic shadowing based on distance + per object shadows

|

||||

Shadow.SurfaceShadow = lerp(LightAttenuation.x, StaticShadowing, DynamicShadowFraction);//根据计算出动态阴影的衰减值来插值ShadowProject与静态阴影。x:方向光阴影

|

||||

// Fade between SSS dynamic shadowing and static shadowing based on distance

|

||||

Shadow.TransmissionShadow = min(lerp(LightAttenuation.y, StaticShadowing, DynamicShadowFraction), LightAttenuation.w);// w:per-object SSS shadowing

|

||||

|

||||

Shadow.SurfaceShadow *= LightAttenuation.z;//Light function + per-object shadows in z

|

||||

Shadow.TransmissionShadow *= LightAttenuation.z;

|

||||

|

||||

// Need this min or backscattering will leak when in shadow which cast by non perobject shadow(Only for directional light)

|

||||

Shadow.TransmissionThickness = min(LightAttenuation.y, LightAttenuation.w);

|

||||

}

|

||||

|

||||

FLATTEN

|

||||

if (LightData.ShadowedBits > 1 && LightData.ContactShadowLength > 0)

|

||||

{

|

||||

ContactShadowLength = LightData.ContactShadowLength * (LightData.ContactShadowLengthInWS ? 1.0f : ContactShadowLengthScreenScale);

|

||||

}

|

||||

}

|

||||

|

||||

#if SUPPORT_CONTACT_SHADOWS //接触阴影相关逻辑

|

||||

|

||||

#if STRATA_ENABLED == 0

|

||||

if (LightData.ShadowedBits < 2 && (ShadingModelID == SHADINGMODELID_HAIR))

|

||||

{

|

||||

ContactShadowLength = 0.2 * ContactShadowLengthScreenScale;

|

||||

}

|

||||

// World space distance to cover eyelids and eyelashes but not beyond

|

||||

if (ShadingModelID == SHADINGMODELID_EYE)

|

||||

{

|

||||

ContactShadowLength = 0.5;

|

||||

|

||||

}

|

||||

#endif

|

||||

|

||||

#if MATERIAL_CONTACT_SHADOWS

|

||||

ContactShadowLength = 0.2 * ContactShadowLengthScreenScale;

|

||||

#endif

|

||||

|

||||

BRANCH

|

||||

if (ContactShadowLength > 0.0)

|

||||

{

|

||||

float StepOffset = Dither - 0.5;

|

||||

bool bHitCastContactShadow = false;

|

||||

bool bHairNoShadowLight = ShadingModelID == SHADINGMODELID_HAIR && !LightData.ShadowedBits;

|

||||

float HitDistance = ShadowRayCast( TranslatedWorldPosition, L, ContactShadowLength, 8, StepOffset, bHairNoShadowLight, bHitCastContactShadow );//通过RayMarching来计算是否HitContactShadow以及HitDistance。

|

||||

|

||||

if ( HitDistance > 0.0 )

|

||||

{

|

||||

float ContactShadowOcclusion = bHitCastContactShadow ? LightData.ContactShadowCastingIntensity : LightData.ContactShadowNonCastingIntensity;

|

||||

|

||||

#if STRATA_ENABLED == 0

|

||||

// Exponential attenuation is not applied on hair/eye/SSS-profile here, as the hit distance (shading-point to blocker) is different from the estimated

|

||||

// thickness (closest-point-from-light to shading-point), and this creates light leaks. Instead we consider first hit as a blocker (old behavior)

|

||||

BRANCH

|

||||

if (ContactShadowOcclusion > 0.0 &&

|

||||

IsSubsurfaceModel(ShadingModelID) &&

|

||||

ShadingModelID != SHADINGMODELID_HAIR &&

|

||||

ShadingModelID != SHADINGMODELID_EYE &&

|

||||

ShadingModelID != SHADINGMODELID_SUBSURFACE_PROFILE)

|

||||

{

|

||||

// Reduce the intensity of the shadow similar to the subsurface approximation used by the shadow maps path

|

||||

// Note that this is imperfect as we don't really have the "nearest occluder to the light", but this should at least

|

||||

// ensure that we don't darken-out the subsurface term with the contact shadows

|

||||

float Density = SubsurfaceDensityFromOpacity(ContactShadowOpacity);

|

||||

ContactShadowOcclusion *= 1.0 - saturate( exp( -Density * HitDistance ) );

|

||||

}

|

||||

#endif

|

||||

|

||||

float ContactShadow = 1.0 - ContactShadowOcclusion;

|

||||

//根据是否命中赋予对应的ContactShadow亮度数值,之后乘以Shadow.SurfaceShadow与Shadow.TransmissionShadow。

|

||||

Shadow.SurfaceShadow *= ContactShadow;

|

||||

Shadow.TransmissionShadow *= ContactShadow;

|

||||

}

|

||||

|

||||

}

|

||||

#endif

|

||||

|

||||

Shadow.HairTransmittance = LightData.HairTransmittance;

|

||||

Shadow.HairTransmittance.OpaqueVisibility = Shadow.SurfaceShadow;

|

||||

}

|

||||

```

|

||||

@@ -0,0 +1,44 @@

|

||||

---

|

||||

title: RenderLights

|

||||

date: 2023-04-09 10:23:21

|

||||

excerpt:

|

||||

tags:

|

||||

rating: ⭐

|

||||

---

|

||||

# 关键函数

|

||||

取得所有ShadowMap的投影信息

|

||||

```c++

|

||||

const FVisibleLightInfo& VisibleLightInfo = VisibleLightInfos[LightSceneInfo->Id];

|

||||

const TArray<FProjectedShadowInfo*, SceneRenderingAllocator>& ShadowMaps = VisibleLightInfo.ShadowsToProject;

|

||||

for (int32 ShadowIndex = 0; ShadowIndex < ShadowMaps.Num(); ShadowIndex++)

|

||||

{

|

||||

const FProjectedShadowInfo* ProjectedShadowInfo = ShadowMaps[ShadowIndex];

|

||||

}

|

||||

```

|

||||

|

||||

# 透明体积图元渲染

|

||||

## InjectSimpleTranslucencyLightingVolumeArray

|

||||

插入简单透明体积物体渲染。应该是根据3D贴图渲染体积效果。默认状态下不运行。

|

||||

- InjectSimpleLightsTranslucentLighting

|

||||

- InjectSimpleTranslucentLightArray

|

||||

|

||||

## InjectTranslucencyLightingVolume

|

||||

在收集用于渲染透明体积的灯光代理信息后进行渲染,主要用于云的渲染。

|

||||

- InjectTranslucencyLightingVolume

|

||||

|

||||

# 直接光照

|

||||

## RenderVirtualShadowMapProjectionMaskBits

|

||||

- VirtualShadowMapProjectionMaskBits

|

||||

- VirtualShadowMapProjection(RayCount:%u(%s),SamplesPerRay:%u,Input:%s%s)

|

||||

|

||||

输出到名为`Shadow.Virtual.MaskBits`与`Shadow.Virtual.MaskBits(HairStrands)`的UAV。

|

||||

|

||||

## AddClusteredDeferredShadingPass

|

||||

|

||||

## RenderSimpleLightsStandardDeferred

|

||||

|

||||

## RenderLight

|

||||

针对每个灯在ShadowProjectionOnOpaque渲染ShadowMask

|

||||

- VirualShadowMapProjection

|

||||

- CompositeVirtualShadowMapMask

|

||||

|

||||

1334

03-UnrealEngine/Rendering/RenderingPipeline/Lighting/Shadow.md

Normal file

1334

03-UnrealEngine/Rendering/RenderingPipeline/Lighting/Shadow.md

Normal file

File diff suppressed because it is too large

Load Diff

@@ -0,0 +1,413 @@

|

||||

---

|

||||

title: Untitled

|

||||

date: 2024-09-25 14:59:32

|

||||

excerpt:

|

||||

tags:

|

||||

rating: ⭐

|

||||

---

|

||||

# 前言

|

||||

可以使用DrawDynamicMeshPass(),实现在插件中使用MeshDraw绘制Pass。

|

||||

|

||||

参考文章:

|

||||

- ***UE5,为HairStrands添加自定义深度与模板***:https://zhuanlan.zhihu.com/p/689578355

|

||||

|

||||

# MeshDraw

|

||||

推荐学习:

|

||||

- CustomDepth

|

||||

- RenderBasePassInternal()

|

||||

- RenderAnisotropyPass()

|

||||

|

||||

Shader推荐:

|

||||

- DepthOnlyVertexShader.usf

|

||||

- DepthOnlyPixelShader.usf

|

||||

|

||||

## BasePass

|

||||

### DrawBasePass()

|

||||

该函数在FDeferredShadingSceneRenderer::RenderBasePassInternal()中调用。

|

||||

|

||||

DrawNaniteMaterialPass() => SubmitNaniteIndirectMaterial()

|

||||

## PSO

|

||||

- RDG 04 Graphics Pipeline State Initializer https://zhuanlan.zhihu.com/p/582020846

|

||||

|

||||

- FGraphicsPipelineStateInitializer

|

||||

- FRHIDepthStencilState* DepthStencilState

|

||||

- FRHIBlendState* BlendState

|

||||

- FRHIRasterizerState* RasterizerState

|

||||

- EPrimitiveType PrimitiveType

|

||||

- FBoundShaderStateInput BoundShaderState.VertexDeclarationRHI

|

||||

- FBoundShaderStateInput BoundShaderState.VertexShaderRHI

|

||||

- FBoundShaderStateInput BoundShaderState.PixelShaderRHI

|

||||

- ……

|

||||

|

||||

// 应用

|

||||

SetGraphicsPipelineState(RHICmdList, GraphicsPSOInit,0);

|

||||

|

||||

## FMeshPassProcessorRenderState

|

||||

- FMeshPassProcessorRenderState

|

||||

- FRHIBlendState* BlendState

|

||||

- FRHIDepthStencilState* DepthStencilState

|

||||

- FExclusiveDepthStencil::Type DepthStencilAccess

|

||||

- FRHIUniformBuffer* ViewUniformBuffer

|

||||

- FRHIUniformBuffer* InstancedViewUniformBuffer

|

||||

- FRHIUniformBuffer* PassUniformBuffer

|

||||

- FRHIUniformBuffer* NaniteUniformBuffer

|

||||

- uint32 StencilRef = 0;

|

||||

|

||||

### FRHIBlendState

|

||||

使用***FBlendStateInitializerRHI()*** 进行初始化。

|

||||

它定义了8个渲染对象,一般我们只用第一组,它的七个参数分别是:

|

||||

- Color

|

||||

- Color Write Mask

|

||||

- Color Blend 混合类型

|

||||

- Color Src 混合因子

|

||||

- Color Dest 混合因子

|

||||

- Alpha

|

||||

- Alpha Blend 混合类型

|

||||

- Alpha Src 混合因子

|

||||

- Alpha Dest 混合因子

|

||||

|

||||

```c++

|

||||

FRHIBlendState* CopyBlendState = TStaticBlendState<CW_RGB, BO_Add, BF_SourceAlpha, BF_InverseSourceAlpha, BO_Add, BF_Zero, BF_One>::GetRHI();

|

||||

```

|

||||

|

||||

颜色写入蒙版:

|

||||

```c++

|

||||

enum EColorWriteMask

|

||||

{

|

||||

CW_RED = 0x01,

|

||||

CW_GREEN = 0x02,

|

||||

CW_BLUE = 0x04,

|

||||

CW_ALPHA = 0x08,

|

||||

|

||||

CW_NONE = 0,

|

||||

CW_RGB = CW_RED | CW_GREEN | CW_BLUE,

|

||||

CW_RGBA = CW_RED | CW_GREEN | CW_BLUE | CW_ALPHA,

|

||||

CW_RG = CW_RED | CW_GREEN,

|

||||

CW_BA = CW_BLUE | CW_ALPHA,

|

||||

|

||||

EColorWriteMask_NumBits = 4,

|

||||

};

|

||||

```

|

||||

|

||||

#### 混合运算

|

||||

混合运算符对于颜色混合方程和Alpha混合方程效果是一样的,这里就只用颜色混合方程来做讲解。

|

||||

|

||||

| BlendOperation | 颜色混合方程 |

|

||||

| -------------------- | ---------------------------------------------------------------------------------- |

|

||||

| BO_Add | $$C=C_{src} \otimes F_{src} + C_{dst} \otimes F_{dst}Csrc⊗Fsrc+Cdst⊗Fdst$$ |

|

||||

| BO_Subtract | $$C = C_{src} \otimes F_{src} - C_{dst} \otimes F_{dst}C=Csrc⊗Fsrc−Cdst⊗Fdst$$ |

|

||||

| BO_ReverseSubtract | $$C = C_{dst} \otimes F_{dst} - C_{src} \otimes F_{src}C=Cdst⊗Fdst−Csrc⊗Fsrc$$ |

|

||||

| BO_Min | $$C = Min(C_{src} , C_{dst} )C=Min(Csrc,Cdst)$$ |

|

||||

| BO_Max | $$C = Max(C_{src} , C_{dst} )C=Max(Csrc,Cdst)$$ |

|

||||

| BO_Min和BO_Max忽略了混合因子 | |

|

||||

|

||||

#### 混合因子

|

||||

|

||||

| BlendFactor | 颜色混合因子 | Alpha混合因子 |

|

||||

| ----------------------------- | ---------------------------------------------------------------- | ------------------------ |

|

||||

| BF_Zero | $$F = (0,0,0)F=(0,0,0)$$ | $$F=0F=0$$ |

|

||||

| BF_One | $$F=(1,1,1)F=(1,1,1)$$ | $$F=1F=1$$ |

|

||||

| BF_SourceColor | $$F=(r_{src},g_{src},b_{src})F=(rsrc,gsrc,bsrc)$$ | – |

|

||||

| BF_InverseSourceColor | $$F=(1-r_{src},1-g_{src},1-b_{src})F=(1−rsrc,1−gsrc,1−bsrc)$$ | – |

|

||||

| BF_SourceAlpha | $$F=(a_{src},a_{src},a_{src})F=(asrc,asrc,asrc)$$ | $$F=a_{src}F=asrc$$ |

|

||||

| BF_InverseSourceAlpha | $$F=(1-a_{src},1-a_{src},1-a_{src})F=(1−asrc,1−asrc,1−asrc)$$ | $$F=1-a_{src}F=1−asrc$$ |

|

||||

| BF_DestAlpha | $$F=(a_{dst},a_{dst},a_{dst})F=(adst,adst,adst)$$ | $$F=a_{dst}F=adst$$ |

|

||||

| BF_InverseDestAlpha | $$F=(1-a_{dst},1-a_{dst},1-a_{dst})F=(1−adst,1−adst,1−adst)$$ | $$F=1-a_{dst}F=1−adst$$ |

|

||||

| BF_DestColor | $$F=(r_{dst},g_{dst},b_{dst})F=(rdst,gdst,bdst)$$ | – |

|

||||

| BF_InverseDestColor | $$F=(1-r_{dst},1-g_{dst},1-b_{dst})F=(1−rdst,1−gdst,1−bdst)$$ | – |

|

||||

| BF_ConstantBlendFactor | F=(r,g,b)F=(r,g,b) | F=aF=a |

|

||||

| BF_InverseConstantBlendFactor | F=(1-r,1-g,1-b)F=(1−r,1−g,1−b) | F=1-aF=1−a |

|

||||

| BF_Source1Color | 未知 | 未知 |

|

||||

| BF_InverseSource1Color | 未知 | 未知 |

|

||||

| BF_Source1Alpha | 未知 | 未知 |

|

||||

| BF_InverseSource1Alpha | 未知 | 未知 |

|

||||

|

||||

最后四个选项没有在DirectX中找到对应的选项,没有继续探究,前面的应该足够一般使用了。

|

||||

### FRHIDepthStencilState

|

||||

```c++

|

||||

TStaticDepthStencilState<

|

||||

bEnableDepthWrite, // 是否启用深度写入

|

||||

DepthTest, // 深度测试比较函数

|

||||

bEnableFrontFaceStencil, // (正面)启用模板

|

||||

FrontFaceStencilTest, // (正面)模板测试操作

|

||||

FrontFaceStencilFailStencilOp, //(正面)模板测试失败时如何更新模板缓冲区

|

||||

FrontFaceDepthFailStencilOp, //(正面)深度测试失败时如何更新模板缓冲区

|

||||

FrontFacePassStencilOp, //(正面)通过模板测试时如何更新模板缓冲区

|

||||

bEnableBackFaceStencil, // (背面)启用模板

|

||||

BackFaceStencilTest, // (背面)模板失败操作

|

||||

BackFaceStencilFailStencilOp, //(背面)模板测试失败时如何更新模板缓冲区

|

||||

BackFaceDepthFailStencilOp, //(背面)深度测试失败时如何更新模板缓冲区

|

||||

BackFacePassStencilOp, //(背面)通过模板测试时如何更新模板红冲去

|

||||

StencilReadMask, // 模板读取Mask

|

||||

StencilWriteMask // 模板写入Mask

|

||||

>

|

||||

```

|

||||

|

||||

```c++

|

||||

//一般使用这个

|

||||

TStaticDepthStencilState<true, CF_DepthNearOrEqual>::GetRHI();

|

||||

//CustomStencil中使用的

|

||||

TStaticDepthStencilState<true, CF_DepthNearOrEqual, true, CF_Always, SO_Keep, SO_Keep, SO_Replace, false, CF_Always, SO_Keep, SO_Keep, SO_Keep, 255, 255>::GetRHI()

|

||||

```

|

||||

|

||||

#### DepthTest

|

||||

深度测试比较函数。

|

||||

```c++

|

||||

enum ECompareFunction

|

||||

{

|

||||

CF_Less,

|

||||

CF_LessEqual,

|

||||

CF_Greater,

|

||||

CF_GreaterEqual,

|

||||

CF_Equal,

|

||||

CF_NotEqual,

|

||||

CF_Never, // 总是返回false

|

||||

CF_Always, // 总是返回true

|

||||

|

||||

ECompareFunction_Num,

|

||||

ECompareFunction_NumBits = 3,

|

||||

|

||||

// Utility enumerations

|

||||

CF_DepthNearOrEqual = (((int32)ERHIZBuffer::IsInverted != 0) ? CF_GreaterEqual : CF_LessEqual),

|

||||

CF_DepthNear = (((int32)ERHIZBuffer::IsInverted != 0) ? CF_Greater : CF_Less),

|

||||

CF_DepthFartherOrEqual = (((int32)ERHIZBuffer::IsInverted != 0) ? CF_LessEqual : CF_GreaterEqual),

|

||||

CF_DepthFarther = (((int32)ERHIZBuffer::IsInverted != 0) ? CF_Less : CF_Greater),

|

||||

};

|

||||

```

|

||||

|

||||

```c++

|

||||

enum EStencilOp

|

||||

{

|

||||

SO_Keep,

|

||||

SO_Zero,

|

||||

SO_Replace,

|

||||

SO_SaturatedIncrement,

|

||||

SO_SaturatedDecrement,

|

||||

SO_Invert,

|

||||

SO_Increment,

|

||||

SO_Decrement,

|

||||

|

||||

EStencilOp_Num,

|

||||

EStencilOp_NumBits = 3,

|

||||

};

|

||||

```

|

||||

|

||||

### CustomStencil

|

||||

#### InitCustomDepthStencilContext()

|

||||

根据当前平台是否支持使用ComputeShader直接输出结果(bComputeExport)、以及是否写入Stencil缓存,以此来创建不同的资源。最终输出FCustomDepthContext。

|

||||

```c++

|

||||

struct FCustomDepthContext

|

||||

{

|

||||

FRDGTextureRef InputDepth = nullptr;

|

||||

FRDGTextureSRVRef InputStencilSRV = nullptr;

|

||||

FRDGTextureRef DepthTarget = nullptr;

|

||||

FRDGTextureRef StencilTarget = nullptr;

|

||||

bool bComputeExport = true;

|

||||

};

|

||||

```

|

||||

|

||||

#### EmitCustomDepthStencilTargets()

|

||||

根据bComputeExport,分别使用RDG的ComputeShader与PixelShader输出DepthStencil。

|

||||

- CS使用FComputeShaderUtils::AddPass()

|

||||

- PS使用NaniteExportGBuffer.usf的**EmitCustomDepthStencilPS()**,FPixelShaderUtils::AddFullscreenPass()

|

||||

|

||||

以**FEmitCustomDepthStencilPS**(NaniteExportGBuffer.usf)为例,额外输入的Nanite相关变量:

|

||||

- FSceneUniformParameters Scene

|

||||

- StructuredBuffer`<`FPackedView`>` InViews

|

||||

- ByteAddressBuffer VisibleClustersSWHW?

|

||||

- FIntVector4, PageConstants

|

||||

- Texture2D`<`UlongType`>`, VisBuffer64

|

||||

- ByteAddressBuffer MaterialSlotTable

|

||||

|

||||

#### FinalizeCustomDepthStencil()

|

||||

替换输出的Depth&Stencil。

|

||||

|

||||

# FViewInfo

|

||||

FViewInfo& ViewInfo

|

||||

- WriteView.bSceneHasSkyMaterial |= bSceneHasSkyMaterial;

|

||||

- WriteView.bHasSingleLayerWaterMaterial |= bHasSingleLayerWaterMaterial;

|

||||

- WriteView.bHasCustomDepthPrimitives |= bHasCustomDepthPrimitives;

|

||||

- WriteView.bHasDistortionPrimitives |= bHasDistortionPrimitives;

|

||||

- WriteView.bUsesCustomDepth |= bUsesCustomDepth;

|

||||

- WriteView.bUsesCustomStencil |= bUsesCustomStencil;

|

||||

|

||||

- FRelevancePacket::Finalize()

|

||||

|

||||

相关性:

|

||||

- 相关性定义

|

||||

- FStaticMeshBatchRelevance

|

||||

- FMaterialRelevance

|

||||

- View相关计算

|

||||

- FViewInfo::Init()

|

||||

- FRelevancePacket

|

||||

- FRelevancePacket::Finalize()

|

||||

|

||||

# 相关宏定义

|

||||

- SCOPE_CYCLE_COUNTER(STAT_BasePassDrawTime);:

|

||||

- DECLARE_CYCLE_STAT_EXTERN(TEXT("Base pass drawing"),STAT_BasePassDrawTime,STATGROUP_SceneRendering, RENDERCORE_API);

|

||||

- DEFINE_STAT(STAT_BasePassDrawTime);

|

||||

- DEFINE_GPU_STAT(NaniteBasePass);

|

||||

- DECLARE_GPU_STAT_NAMED_EXTERN(NaniteBasePass, TEXT("Nanite BasePass"));

|

||||

- GET_STATID(STAT_CLP_BasePass)

|

||||

- FRDGParallelCommandListSet ParallelCommandListSet(InPass, RHICmdList, GET_STATID(STAT_CLP_BasePass), View, FParallelCommandListBindings(PassParameters));

|

||||

- DECLARE_CYCLE_STAT(TEXT("BasePass"), STAT_CLP_BasePass, STATGROUP_ParallelCommandListMarkers);

|

||||

|

||||

# NaniteMeshDraw

|

||||

`Engine\Source\Runtime\Renderer\Private\Nanite\`NaniteMaterials.h & NaniteMaterials.cpp

|

||||

|

||||

PS.使用的Shader必须是`FNaniteGlobalShader`的子类。

|

||||

|

||||

以下是Nanite物体的CustomDepth绘制过程:

|

||||

```c++

|

||||

bool FSceneRenderer::RenderCustomDepthPass(

|

||||

FRDGBuilder& GraphBuilder,

|

||||

FCustomDepthTextures& CustomDepthTextures,

|

||||

const FSceneTextureShaderParameters& SceneTextures,

|

||||

TConstArrayView<Nanite::FRasterResults> PrimaryNaniteRasterResults,

|

||||

TConstArrayView<Nanite::FPackedView> PrimaryNaniteViews)

|

||||

{

|

||||

if (!CustomDepthTextures.IsValid())

|

||||

{

|

||||

return false;

|

||||

}

|

||||

|

||||

// Determine if any of the views have custom depth and if any of them have Nanite that is rendering custom depth

|

||||

// 构建NaniteDrawLists,用于后面的绘制

|

||||

bool bAnyCustomDepth = false;

|

||||

TArray<FNaniteCustomDepthDrawList, SceneRenderingAllocator> NaniteDrawLists;

|

||||

NaniteDrawLists.AddDefaulted(Views.Num());

|

||||

uint32 TotalNaniteInstances = 0;

|

||||

for (int32 ViewIndex = 0; ViewIndex < Views.Num(); ++ViewIndex)

|

||||

{

|

||||

FViewInfo& View = Views[ViewIndex];

|

||||

if (View.ShouldRenderView() && View.bHasCustomDepthPrimitives)

|

||||

{

|

||||

if (PrimaryNaniteRasterResults.IsValidIndex(ViewIndex))

|

||||

{

|

||||

const FNaniteVisibilityResults& VisibilityResults = PrimaryNaniteRasterResults[ViewIndex].VisibilityResults;

|

||||

|

||||

// Get the Nanite instance draw list for this view. (NOTE: Always use view index 0 for now because we're not doing

|

||||

// multi-view yet).

|

||||

NaniteDrawLists[ViewIndex] = BuildNaniteCustomDepthDrawList(View, 0u, VisibilityResults);

|

||||

|

||||

TotalNaniteInstances += NaniteDrawLists[ViewIndex].Num();

|

||||

}

|

||||

bAnyCustomDepth = true;

|

||||

}

|

||||

}

|

||||

|

||||

SET_DWORD_STAT(STAT_NaniteCustomDepthInstances, TotalNaniteInstances);

|

||||

..

|

||||

if (TotalNaniteInstances > 0)

|

||||

{

|

||||

RDG_EVENT_SCOPE(GraphBuilder, "Nanite CustomDepth");

|

||||

|

||||

const FIntPoint RasterTextureSize = CustomDepthTextures.Depth->Desc.Extent;

|

||||

FIntRect RasterTextureRect(0, 0, RasterTextureSize.X, RasterTextureSize.Y);

|

||||

if (Views.Num() == 1)

|

||||

{

|

||||

const FViewInfo& View = Views[0];

|

||||

if (View.ViewRect.Min.X == 0 && View.ViewRect.Min.Y == 0)

|

||||

{

|

||||

RasterTextureRect = View.ViewRect;

|

||||

}

|

||||

}

|

||||

|

||||

const bool bWriteCustomStencil = IsCustomDepthPassWritingStencil();

|

||||

|

||||

Nanite::FSharedContext SharedContext{};

|

||||

SharedContext.FeatureLevel = Scene->GetFeatureLevel();

|

||||

SharedContext.ShaderMap = GetGlobalShaderMap(SharedContext.FeatureLevel);

|

||||

SharedContext.Pipeline = Nanite::EPipeline::Primary;

|

||||

|

||||