96 KiB

title, date, excerpt, tags, rating

| title | date | excerpt | tags | rating |

|---|---|---|---|---|

| 剖析虚幻渲染体系(04)- 延迟渲染管线 | 2024-02-07 22:29:32 | ⭐ |

前言

https://www.cnblogs.com/timlly/p/14732412.html

延迟渲染管线

由于最耗时的光照计算延迟到后处理阶段,所以跟场景的物体数量解耦,只跟Render Targe尺寸相关,复杂度是O(Nlight×WRT×HRT)。所以,延迟渲染在应对复杂的场景和光源数量的场景比较得心应手,往往能得到非常好的性能提升。 但是,也存在一些缺点,如需多一个通道来绘制几何信息,需要多渲染纹理(MRT)的支持,更多的CPU和GPU显存占用,更高的带宽要求,有限的材质呈现类型,难以使用MSAA等硬件抗锯齿,存在画面较糊的情况等等。此外,应对简单场景时,可能反而得不到渲染性能方面的提升。延迟渲染可以针对不同的平台和API使用不同的优化改进技术,从而产生了诸多变种。下面是其中部分变种:

Deferred Lighting(Light Pre-Pass)

又被称为Light Pre-Pass,它和Deferred Shading的不同在于需要三个Pass:

- 第一个Pass叫Geometry Pass:只输出每个像素光照计算所需的几何属性(法线、深度)到GBuffer中。

- 第二个Pass叫Lighting Pass:存储光源属性(如Normal * LightDir、LightColor、Specular)到LBuffer(Light Buffer,光源缓冲区)。

- 第三个Pass叫Secondary Geometry Pass:获取GBuffer和LBuffer的数据,重建每个像素计算光照所需的数据,执行光照计算。

与Deferred Shading相比,Deferred lighting的优势在于G-Buffer所需的尺寸急剧减少,允许更多的材质类型呈现,较好第支持MSAA等。劣势是需要绘制场景两次,增加了Draw Call。 另外,Deferred lighting还有个优化版本,做法与上面所述略有不同,具体参见文献Light Pre-Pass。

Tiled-Based Deferred Rendering(TBDR)

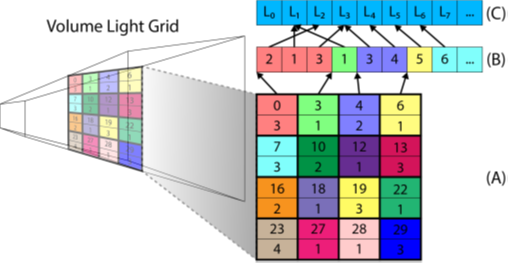

Tiled-Based Deferred Rendering译名是基于瓦片的渲染,简称TBDR,它的核心思想在于将渲染纹理分成规则的一个个四边形(称为Tile),然后利用四边形的包围盒剔除该Tile内无用的光源,只保留有作用的光源列表,从而减少了实际光照计算中的无效光源的计算量。

!

- 将渲染纹理分成一个个均等面积的小块(Tile)。参见上图(b)。

Tile没有统一的固定大小,在不同的平台架构和渲染器中有所不同,不过一般是2的N次方,且长宽不一定相等,可以是16x16、32x32、64x64等等,不宜太小或太大,否则优化效果不明显。PowerVR GPU通常取32x32,而ARM Mali GPU取16x16。 !

-

根据Tile内的Depth范围计算出其Bounding Box。 !

TBDR中的每个Tile内的深度范围可能不一样,由此可得到不同大小的Bounding Box。

TBDR中的每个Tile内的深度范围可能不一样,由此可得到不同大小的Bounding Box。 -

根据Tile的Bounding Box和Light的Bounding Box,执行求交。

除了无衰减的方向光,其它类型的光源都可以根据位置和衰减计算得到其Bounding Box。

- 摒弃不相交的Light,得到对Tile有作用的Light列表。参见上图(c)。

- 遍历所有Tile,获取每个Tile的有作业的光源索引列表,计算该Tile内所有像素的光照结果。

由于TBDR可以摒弃很多无作用的光源,能够避免很多无效的光照计算,目前已被广泛采用与移动端GPU架构中,形成了基于硬件加速的TBDR:! PowerVR的TBDR架构,和立即模式的架构相比,在裁剪之后光栅化之前增加了Tiling阶段,增加了On-Chip Depth Buffer和Color Buffer,以更快地存取深度和颜色。

PowerVR的TBDR架构,和立即模式的架构相比,在裁剪之后光栅化之前增加了Tiling阶段,增加了On-Chip Depth Buffer和Color Buffer,以更快地存取深度和颜色。

下图是PowerVR Rogue家族的Series7XT系列GPU和的硬件架构示意图:

!

Clustered Deferred Rendering

Clustered Deferred Rendering是分簇延迟渲染,与TBDR的不同在于对深度进行了更细粒度的划分,从而避免TBDR在深度范围跳变很大(中间无任何有效像素)时产生的光源裁剪效率降低的问题。

! Clustered Deferred Rendering的核心思想是将深度按某种方式细分为若干份,从而更加精确地计算每个簇的包围盒,进而更精准地裁剪光源,避免深度不连续时的光源裁剪效率降低。

Clustered Deferred Rendering的核心思想是将深度按某种方式细分为若干份,从而更加精确地计算每个簇的包围盒,进而更精准地裁剪光源,避免深度不连续时的光源裁剪效率降低。

上图的分簇方法被称为隐式(Implicit)分簇法,实际上存在显式(Explicit)分簇法,可以进一步精确深度细分,以实际的深度范围计算每个族的包围盒:! 显式(Explicit)的深度分簇更加精确地定位到每簇的包围盒。

显式(Explicit)的深度分簇更加精确地定位到每簇的包围盒。

下图是Tiled、Implicit、Explicit深度划分法的对比图:!

VisibilityBuffer

Visibility Buffer与Deferred Texturing非常类似,是Deferred Lighting更加大胆的改进方案,核心思路是:为了减少GBuffer占用(GBuffer占用大意味着带宽大能耗大),不渲染GBuffer,改成渲染Visibility Buffer。Visibility Buffer上只存三角形和实例id,有了这些属性,在计算光照阶段(shading)分别从UAV和bindless texture里面读取真正需要的vertex attributes和贴图的属性,根据uv的差分自行计算mip-map(下图)。! GBuffer和Visibility Buffer渲染管线对比示意图。后者在由Visiblity阶段取代前者的Gemotry Pass,此阶段只记录三角形和实例id,可将它们压缩进4bytes的Buffer中,从而极大地减少了显存的占用。

GBuffer和Visibility Buffer渲染管线对比示意图。后者在由Visiblity阶段取代前者的Gemotry Pass,此阶段只记录三角形和实例id,可将它们压缩进4bytes的Buffer中,从而极大地减少了显存的占用。

此方法虽然可以减少对Buffer的占用,但需要bindless texture的支持,对GPU Cache并不友好(相邻像素的三角形和实例id跳变大,降低Cache的空间局部性)

Deferred Adaptive Compute Shading

Deferred Adaptive Compute Shading的核心思想在于将屏幕像素按照某种方式划分为5个Level的不同粒度的像素块,以便决定是直接从相邻Level插值还是重新着色。! 此法在渲染UE4的不同场景时,在均方误差(RMSE)、峰值信噪比(PSNR)、平均结构相似性(MSSIM)都能获得良好的指标。(下图)!

此法在渲染UE4的不同场景时,在均方误差(RMSE)、峰值信噪比(PSNR)、平均结构相似性(MSSIM)都能获得良好的指标。(下图)! 渲染同一场景和画面时,对比Checkerboard(棋盘)着色方法,相同时间内,DACS的均方误差(RMSE)只是前者的21.5%,相同图像质量(MSSIM)下,DACS的时间快了4.22倍。

渲染同一场景和画面时,对比Checkerboard(棋盘)着色方法,相同时间内,DACS的均方误差(RMSE)只是前者的21.5%,相同图像质量(MSSIM)下,DACS的时间快了4.22倍。

ForwardRendering

Forward+ Rendering

Forward+ 也被称为Tiled Forward Rendering,为了提升前向渲染光源的数量,它增加了光源剔除阶段,有3个Pass:depth prepass,light culling pass,shading pass。

light culling pass和瓦片的延迟渲染类似,将屏幕划分成若干个Tile,将每个Tile和光源求交,有效的光源写入到Tile的光源列表,以减少shading阶段的光源数量。! +存在由于街头锥体拉长后在几何边界产生误报(False positives,可以通过separating axis theorem (SAT)改善)的情况。

+存在由于街头锥体拉长后在几何边界产生误报(False positives,可以通过separating axis theorem (SAT)改善)的情况。

-

Cluster Forward Rendering Cluster Forward Rendering和Cluster Deferred Rendering类似,将屏幕空间划分成均等Tile,深度细分成一个个簇,进而更加细粒度地裁剪光源。算法类似,这里就不累述了。

-

Volume Tiled Forward Rendering Volume Tiled Forward Rendering在Tiled和Clusterer的前向渲染基础上扩展的一种技术,旨在提升场景的光源支持数量,论文作者认为可以达到400万个光源的场景以30FPS实时渲染。它由以下步骤组成:

1.1 计算Grid(Volume Tile)的尺寸。给定Tile尺寸(tx,ty)(<28><>,<2C><>)和屏幕分辨率(w,h)(<28>,ℎ),可以算出屏幕的细分数量(Sx,Sy)(<28><>,<2C><>):

(Sx,Sy)=(∣∣∣wtx∣∣∣, ∣∣∣hty∣∣∣)(<28><>,<2C><>)=(|<7C><><EFBFBD>|, |ℎ<><E2848E>|)

深度方向的细分数量为:

SZ=∣∣∣log(Zfar/Znear)log(1+2tanθSy)∣∣∣<E288A3><E288A3>=|log(<28><><EFBFBD><EFBFBD>/<2F><><EFBFBD><EFBFBD><EFBFBD>)log(1+2tan<6E><E281A1><EFBFBD>)|

1.2 计算每个Volume Tile的AABB。结合下图,每个Tile的AABB边界计算如下:

knear=Znear(1+2tan(θ)Sy)kkfar=Znear(1+2tan(θ)Sy)k+1pmin=(Sx⋅i, Sy⋅j)pmax=(Sx⋅(i+1), Sy⋅(j+1))<29><><EFBFBD><EFBFBD><EFBFBD>=<3D><><EFBFBD><EFBFBD><EFBFBD>(1+2tan(<28>)<29><>)<29><><EFBFBD><EFBFBD><EFBFBD>=<3D><><EFBFBD><EFBFBD><EFBFBD>(1+2tan(<28>)<29><>)<29>+1<><31><EFBFBD><EFBFBD>=(<28><>⋅<EFBFBD>, <><C2A0>⋅<EFBFBD>)<29><><EFBFBD><EFBFBD>=(<28><>⋅(<28>+1), <><C2A0>⋅(<28>+1))

2、更新阶段:

2.1 深度Pre-pass。只记录非半透明物体的深度。

2.2 标记激活的Tile。

2.3 创建和压缩Tile列表。

2.4 将光源赋给Tile。每个线程组执行一个激活的Volume Tile,利用Tile的AABB和场景中所有的光源求交(可用BVH减少求交次数),将相交的光源索引记录到对应Tile的光源列表(每个Tile的光源数据是光源列表的起始位置和光源的数量): 2.5 着色。此阶段与前述方法无特别差异。

2.5 着色。此阶段与前述方法无特别差异。

基于体素分块的渲染虽然能够满足海量光源的渲染,但也存在Draw Call数量攀升和自相似体素瓦片(Self-Similar Volume Tiles,离摄像机近的体素很小而远的又相当大)的问题。

UE渲染相关

FSceneRenderer

FSceneRenderer是UE场景渲染器父类,是UE渲染体系的大脑和发动机,在整个渲染体系拥有举足轻重的位置,主要用于处理和渲染场景,生成RHI层的渲染指令。

// Engine\Source\Runtime\Renderer\Private\SceneRendering.h

// 场景渲染器

class FSceneRenderer

{

public:

FScene* Scene; // 被渲染的场景

FSceneViewFamily ViewFamily; // 被渲染的场景视图族(保存了需要渲染的所有view)。

TArray<FViewInfo> Views; // 需要被渲染的view实例。

FMeshElementCollector MeshCollector; // 网格收集器

FMeshElementCollector RayTracingCollector; // 光追网格收集器

// 可见光源信息

TArray<FVisibleLightInfo,SceneRenderingAllocator> VisibleLightInfos;

// 阴影相关的数据

TArray<FParallelMeshDrawCommandPass*, SceneRenderingAllocator> DispatchedShadowDepthPasses;

FSortedShadowMaps SortedShadowsForShadowDepthPass;

// 特殊标记

bool bHasRequestedToggleFreeze;

bool bUsedPrecomputedVisibility;

// 使用全场景阴影的点光源列表(可通过r.SupportPointLightWholeSceneShadows开关)

TArray<FName, SceneRenderingAllocator> UsedWholeScenePointLightNames;

// 平台Level信息

ERHIFeatureLevel::Type FeatureLevel;

EShaderPlatform ShaderPlatform;

(......)

public:

FSceneRenderer(const FSceneViewFamily* InViewFamily,FHitProxyConsumer* HitProxyConsumer);

virtual ~FSceneRenderer();

// FSceneRenderer接口(注意部分是空实现体和抽象接口)

// 渲染入口

virtual void Render(FRHICommandListImmediate& RHICmdList) = 0;

virtual void RenderHitProxies(FRHICommandListImmediate& RHICmdList) {}

// 场景渲染器实例

static FSceneRenderer* CreateSceneRenderer(const FSceneViewFamily* InViewFamily, FHitProxyConsumer* HitProxyConsumer);

void PrepareViewRectsForRendering();

#if WITH_MGPU // 多GPU支持

void ComputeViewGPUMasks(FRHIGPUMask RenderTargetGPUMask);

#endif

// 更新每个view所在的渲染纹理的结果

void DoCrossGPUTransfers(FRHICommandListImmediate& RHICmdList, FRHIGPUMask RenderTargetGPUMask);

// 遮挡查询接口和数据

bool DoOcclusionQueries(ERHIFeatureLevel::Type InFeatureLevel) const;

void BeginOcclusionTests(FRHICommandListImmediate& RHICmdList, bool bRenderQueries);

static FGraphEventRef OcclusionSubmittedFence[FOcclusionQueryHelpers::MaxBufferedOcclusionFrames];

void FenceOcclusionTests(FRHICommandListImmediate& RHICmdList);

void WaitOcclusionTests(FRHICommandListImmediate& RHICmdList);

bool ShouldDumpMeshDrawCommandInstancingStats() const { return bDumpMeshDrawCommandInstancingStats; }

static FGlobalBoundShaderState OcclusionTestBoundShaderState;

static bool ShouldCompositeEditorPrimitives(const FViewInfo& View);

// 等待场景渲染器执行完成和清理工作以及最终删除

static void WaitForTasksClearSnapshotsAndDeleteSceneRenderer(FRHICommandListImmediate& RHICmdList, FSceneRenderer* SceneRenderer, bool bWaitForTasks = true);

static void DelayWaitForTasksClearSnapshotsAndDeleteSceneRenderer(FRHICommandListImmediate& RHICmdList, FSceneRenderer* SceneRenderer);

// 其它接口

static FIntPoint ApplyResolutionFraction(...);

static FIntPoint QuantizeViewRectMin(const FIntPoint& ViewRectMin);

static FIntPoint GetDesiredInternalBufferSize(const FSceneViewFamily& ViewFamily);

static ISceneViewFamilyScreenPercentage* ForkScreenPercentageInterface(...);

static int32 GetRefractionQuality(const FSceneViewFamily& ViewFamily);

protected:

(......)

#if WITH_MGPU // 多GPU支持

FRHIGPUMask AllViewsGPUMask;

FRHIGPUMask GetGPUMaskForShadow(FProjectedShadowInfo* ProjectedShadowInfo) const;

#endif

// ----可在所有渲染器共享的接口----

// --渲染流程和MeshPass相关接口--

void OnStartRender(FRHICommandListImmediate& RHICmdList);

void RenderFinish(FRHICommandListImmediate& RHICmdList);

void SetupMeshPass(FViewInfo& View, FExclusiveDepthStencil::Type BasePassDepthStencilAccess, FViewCommands& ViewCommands);

void GatherDynamicMeshElements(...);

void RenderDistortion(FRHICommandListImmediate& RHICmdList);

void InitFogConstants();

bool ShouldRenderTranslucency(ETranslucencyPass::Type TranslucencyPass) const;

void RenderCustomDepthPassAtLocation(FRHICommandListImmediate& RHICmdList, int32 Location);

void RenderCustomDepthPass(FRHICommandListImmediate& RHICmdList);

void RenderPlanarReflection(class FPlanarReflectionSceneProxy* ReflectionSceneProxy);

void InitSkyAtmosphereForViews(FRHICommandListImmediate& RHICmdList);

void RenderSkyAtmosphereLookUpTables(FRHICommandListImmediate& RHICmdList);

void RenderSkyAtmosphere(FRHICommandListImmediate& RHICmdList);

void RenderSkyAtmosphereEditorNotifications(FRHICommandListImmediate& RHICmdList);

// ---阴影相关接口---

void InitDynamicShadows(FRHICommandListImmediate& RHICmdList, FGlobalDynamicIndexBuffer& DynamicIndexBuffer, FGlobalDynamicVertexBuffer& DynamicVertexBuffer, FGlobalDynamicReadBuffer& DynamicReadBuffer);

bool RenderShadowProjections(FRHICommandListImmediate& RHICmdList, const FLightSceneInfo* LightSceneInfo, IPooledRenderTarget* ScreenShadowMaskTexture, IPooledRenderTarget* ScreenShadowMaskSubPixelTexture, bool bProjectingForForwardShading, bool bMobileModulatedProjections, const struct FHairStrandsVisibilityViews* InHairVisibilityViews);

TRefCountPtr<FProjectedShadowInfo> GetCachedPreshadow(...);

void CreatePerObjectProjectedShadow(...);

void SetupInteractionShadows(...);

void AddViewDependentWholeSceneShadowsForView(...);

void AllocateShadowDepthTargets(FRHICommandListImmediate& RHICmdList);

void AllocatePerObjectShadowDepthTargets(FRHICommandListImmediate& RHICmdList, ...);

void AllocateCachedSpotlightShadowDepthTargets(FRHICommandListImmediate& RHICmdList, ...);

void AllocateCSMDepthTargets(FRHICommandListImmediate& RHICmdList, ...);

void AllocateRSMDepthTargets(FRHICommandListImmediate& RHICmdList, ...);

void AllocateOnePassPointLightDepthTargets(FRHICommandListImmediate& RHICmdList, ...);

void AllocateTranslucentShadowDepthTargets(FRHICommandListImmediate& RHICmdList, ...);

bool CheckForProjectedShadows(const FLightSceneInfo* LightSceneInfo) const;

void GatherShadowPrimitives(...);

void RenderShadowDepthMaps(FRHICommandListImmediate& RHICmdList);

void RenderShadowDepthMapAtlases(FRHICommandListImmediate& RHICmdList);

void CreateWholeSceneProjectedShadow(FLightSceneInfo* LightSceneInfo, ...);

void UpdatePreshadowCache(FSceneRenderTargets& SceneContext);

void InitProjectedShadowVisibility(FRHICommandListImmediate& RHICmdList);

void GatherShadowDynamicMeshElements(FGlobalDynamicIndexBuffer& DynamicIndexBuffer, FGlobalDynamicVertexBuffer& DynamicVertexBuffer, FGlobalDynamicReadBuffer& DynamicReadBuffer);

// --光源接口--

static void GetLightNameForDrawEvent(const FLightSceneProxy* LightProxy, FString& LightNameWithLevel);

static void GatherSimpleLights(const FSceneViewFamily& ViewFamily, ...);

static void SplitSimpleLightsByView(const FSceneViewFamily& ViewFamily, ...);

// --可见性接口--

void PreVisibilityFrameSetup(FRHICommandListImmediate& RHICmdList);

void ComputeViewVisibility(FRHICommandListImmediate& RHICmdList, ...);

void PostVisibilityFrameSetup(FILCUpdatePrimTaskData& OutILCTaskData);

// --其它接口--

void GammaCorrectToViewportRenderTarget(FRHICommandList& RHICmdList, const FViewInfo* View, float OverrideGamma);

FRHITexture* GetMultiViewSceneColor(const FSceneRenderTargets& SceneContext) const;

void UpdatePrimitiveIndirectLightingCacheBuffers();

bool ShouldRenderSkyAtmosphereEditorNotifications();

void ResolveSceneColor(FRHICommandList& RHICmdList);

(......)

};

FSceneRenderer由游戏线程的FRendererModule::BeginRenderingViewFamily负责创建和初始化,然后传递给渲染线程。渲染线程会调用FSceneRenderer::Render(),渲染完返回后,会删除FSceneRenderer的实例。也就是说,FSceneRenderer会被每帧创建和销毁。

FSceneRenderer拥有两个子类:FMobileSceneRenderer和FDeferredShadingSceneRenderer。

FMobileSceneRenderer是用于移动平台的场景渲染器,默认采用了前向渲染的流程。

FDeferredShadingSceneRenderer虽然名字叫做延迟着色场景渲染器,但其实集成了包含前向渲染和延迟渲染的两种渲染路径,是PC和主机平台的默认场景渲染器(笔者刚接触伊始也被这蜜汁取名迷惑过)。

FDeferredShadingSceneRenderer

FDeferredShadingSceneRenderer主要包含了MeshPass、光源、阴影、光线追踪、反射、可见性等几大类接口。其中最重要的接口非FDeferredShadingSceneRenderer::Render()莫属,它是FDeferredShadingSceneRenderer的渲染主入口,主流程和重要接口的调用都直接或间接发生它内部。

则可以划分成以下主要阶段:

- FScene::UpdateAllPrimitiveSceneInfos:更新所有图元的信息到GPU,若启用了GPUScene,将会用二维纹理或StructureBuffer来存储图元的信息。

- FSceneRenderTargets::Allocate:若有需要(分辨率改变、API触发),重新分配场景的渲染纹理,以保证足够大的尺寸渲染对应的view。

- InitViews:采用裁剪若干方式初始化图元的可见性,设置可见的动态阴影,有必要时会对阴影平截头体和世界求交(全场阴影和逐物体阴影)。

- PrePass / Depth only pass:提前深度Pass,用来渲染不透明物体的深度。此Pass只会写入深度而不会写入颜色,写入深度时有disabled、occlusion only、complete depths三种模式,视不同的平台和Feature Level决定。通常用来建立Hierarchical-Z,以便能够开启硬件的Early-Z技术,提升Base Pass的渲染效率。

- Base pass:也就是前面章节所说的几何通道。 用来渲染不透明物体(Opaque和Masked材质)的几何信息,包含法线、深度、颜色、AO、粗糙度、金属度等等。这些几何信息会写入若干张GBuffer中。此阶段不会计算动态光源的贡献,但会计算Lightmap和天空光的贡献。

- Issue Occlusion Queries / BeginOcclusionTests:开启遮挡渲染,此帧的渲染遮挡数据用于下一帧

InitViews阶段的遮挡剔除。此阶段主要使用物体的包围盒来渲染,也可能会打包相近物体的包围盒以减少Draw Call。 - Lighting:此阶段也就是前面章节所说的光照通道,是标准延迟着色和分块延迟着色的混合体。会计算开启阴影的光源的阴影图,也会计算每个灯光对屏幕空间像素的贡献量,并累计到Scene Color中。此外,还会计算光源也对translucency lighting volumes的贡献量。

- Fog在屏幕空间计算雾和大气对不透明物体表面像素的影响。

- Translucency:渲染半透明物体阶段。所有半透明物体由远到近(视图空间)逐个绘制到离屏渲染纹理(offscreen render target,代码中叫separate translucent render target)中,接着用单独的pass以正确计算和混合光照结果。

- Post Processing:后处理阶段。包含了不需要GBuffer的Bloom、色调映射、Gamma校正等以及需要GBuffer的SSR、SSAO、SSGI等。此阶段会将半透明的渲染纹理混合到最终的场景颜色中。

FScene::UpdateAllPrimitiveSceneInfos

FScene::UpdateAllPrimitiveSceneInfos的调用发生在FDeferredShadingSceneRenderer::Render的第一行:

// Engine\Source\Runtime\Renderer\Private\DeferredShadingRenderer.cpp

void FDeferredShadingSceneRenderer::Render(FRHICommandListImmediate& RHICmdList)

{

Scene->UpdateAllPrimitiveSceneInfos(RHICmdList, true);

(......)

}

FScene::UpdateAllPrimitiveSceneInfos的主要作用是删除、增加、更新CPU侧的图元数据,且同步到GPU端。其中GPU的图元数据存在两种方式:

- 每个图元独有一个Uniform Buffer。在shader中需要访问图元的数据时从该图元的Uniform Buffer中获取即可。这种结构简单易理解,兼容所有FeatureLevel的设备。但是会增加CPU和GPU的IO,降低GPU的Cache命中率。

- 使用Texture2D或StructuredBuffer的GPU Scene,所有图元的数据按规律放置到此。在shader中需要访问图元的数据时需要从GPU Scene中对应的位置读取数据。需要SM5支持,实现难度高,不易理解,但可减少CPU和GPU的IO,提升GPU Cache命中率,可更好地支持光线追踪和GPU Driven Pipeline。 虽然以上访问的方式不一样,但shader中已经做了封装,使用者不需要区分是哪种形式的Buffer,只需使用以下方式:

// Engine\Shaders\Private\SceneData.ush

struct FPrimitiveSceneData

{

float4x4 LocalToWorld;

float4 InvNonUniformScaleAndDeterminantSign;

float4 ObjectWorldPositionAndRadius;

float4x4 WorldToLocal;

float4x4 PreviousLocalToWorld;

float4x4 PreviousWorldToLocal;

float3 ActorWorldPosition;

float UseSingleSampleShadowFromStationaryLights;

float3 ObjectBounds;

float LpvBiasMultiplier;

float DecalReceiverMask;

float PerObjectGBufferData;

float UseVolumetricLightmapShadowFromStationaryLights;

float DrawsVelocity;

float4 ObjectOrientation;

float4 NonUniformScale;

float3 LocalObjectBoundsMin;

uint LightingChannelMask;

float3 LocalObjectBoundsMax;

uint LightmapDataIndex;

float3 PreSkinnedLocalBoundsMin;

int SingleCaptureIndex;

float3 PreSkinnedLocalBoundsMax;

uint OutputVelocity;

float4 CustomPrimitiveData[NUM_CUSTOM_PRIMITIVE_DATA];

};

由此可见,每个图元可访问的数据还是很多的,占用的显存也相当可观,每个图元大约占用576字节,如果场景存在10000个图元(游戏场景很常见),则忽略Padding情况下,这些图元数据总量达到约5.5M。

言归正传,回到C++层看看GPU Scene的定义:

// Engine\Source\Runtime\Renderer\Private\ScenePrivate.h

class FGPUScene

{

public:

// 是否更新全部图元数据,通常用于调试,运行期会导致性能下降。

bool bUpdateAllPrimitives;

// 需要更新数据的图元索引.

TArray<int32> PrimitivesToUpdate;

// 所有图元的bit,当对应索引的bit为1时表示需要更新(同时在PrimitivesToUpdate中).

TBitArray<> PrimitivesMarkedToUpdate;

// 存放图元的GPU数据结构, 可以是TextureBuffer或Texture2D, 但只有其中一种会被创建和生效, 移动端可由Mobile.UseGPUSceneTexture控制台变量设定.

FRWBufferStructured PrimitiveBuffer;

FTextureRWBuffer2D PrimitiveTexture;

// 上传的buffer

FScatterUploadBuffer PrimitiveUploadBuffer;

FScatterUploadBuffer PrimitiveUploadViewBuffer;

// 光照图

FGrowOnlySpanAllocator LightmapDataAllocator;

FRWBufferStructured LightmapDataBuffer;

FScatterUploadBuffer LightmapUploadBuffer;

};

代码略……

总结起来,FScene::UpdateAllPrimitiveSceneInfos的作用是删除、增加图元,以及更新图元的所有数据,包含变换矩阵、自定义数据、距离场数据等。

GPUScene的PrimitivesToUpdate和PrimitivesMarkedToUpdate收集好需要更新的所有图元索引后,会在FDeferredShadingSceneRenderer::Render的InitViews之后同步给GPU:

void FDeferredShadingSceneRenderer::Render(FRHICommandListImmediate& RHICmdList)

{

// 更新GPUScene的数据

Scene->UpdateAllPrimitiveSceneInfos(RHICmdList, true);

(......)

// 初始化View的数据

bDoInitViewAftersPrepass = InitViews(RHICmdList, BasePassDepthStencilAccess, ILCTaskData, UpdateViewCustomDataEvents);

(......)

// 同步CPU端的GPUScene到GPU.

UpdateGPUScene(RHICmdList, *Scene);//5.3 => Scene->GPUScene.Update(GraphBuilder, GetSceneUniforms(), *Scene, ExternalAccessQueue);

(......)

}

UpdateGPUScene()代码略……

InitViews(5.3已经变成了BeginInitViews() & EndInitViews())

- InitViews()(BeginInitViews())

- PreVisibilityFrameSetup():做了大量的初始化工作,如静态网格、Groom、SkinCache、特效、TAA、ViewState等等。

- 初始化特效系统(FXSystem)。

- ComputeViewVisibility():最重要的功能就是处理图元的可见性,包含视椎体裁剪、遮挡剔除,以及收集动态网格信息、创建光源信息等。

- FPrimitiveSceneInfo::UpdateStaticMeshes:更新静态网格数据。

- ViewState::GetPrecomputedVisibilityData:获取预计算的可见性数据。

- FrustumCull:视锥体裁剪。

- ComputeAndMarkRelevanceForViewParallel:计算和标记视图并行处理的关联数据。

- GatherDynamicMeshElements:收集view的动态可见元素,上一篇中已经解析过。

- SetupMeshPass:设置网格Pass的数据,将FMeshBatch转换成FMeshDrawCommand,上一篇中已经解析过。

- CreateIndirectCapsuleShadows:创建胶囊体阴影。

- UpdateSkyIrradianceGpuBuffer:更新天空体环境光照的GPU数据。

- InitSkyAtmosphereForViews:初始化大气效果。

- PostVisibilityFrameSetup():利用view的视锥裁剪光源,防止视线外或屏幕占比很小或没有光照强度的光源进入shader计算。此外,还会处理贴花排序、调整之前帧的RT和雾效、光束等。

- FViewInfo::InitRHIResources():初始化每个View的UniformBuffer。

- SetupVolumetricFog:初始化和设置体积雾。

- FSceneRenderer::OnStartRender():通知RHI已经开启了渲染,以初始化视图相关的数据和资源。

以下是旧版本代码:

// Engine\Source\Runtime\Renderer\Private\SceneVisibility.cpp

bool FDeferredShadingSceneRenderer::InitViews(FRHICommandListImmediate& RHICmdList, FExclusiveDepthStencil::Type BasePassDepthStencilAccess, struct FILCUpdatePrimTaskData& ILCTaskData, FGraphEventArray& UpdateViewCustomDataEvents)

{

// 创建可见性帧设置预备阶段.

PreVisibilityFrameSetup(RHICmdList);

RHICmdList.ImmediateFlush(EImmediateFlushType::DispatchToRHIThread);

// 特效系统初始化

{

if (Scene->FXSystem && Scene->FXSystem->RequiresEarlyViewUniformBuffer() && Views.IsValidIndex(0))

{

// 保证第一个view的RHI资源已被初始化.

Views[0].InitRHIResources();

Scene->FXSystem->PostInitViews(RHICmdList, Views[0].ViewUniformBuffer, Views[0].AllowGPUParticleUpdate() && !ViewFamily.EngineShowFlags.HitProxies);

}

}

// 创建可见性网格指令.

FViewVisibleCommandsPerView ViewCommandsPerView;

ViewCommandsPerView.SetNum(Views.Num());

// 计算可见性.

ComputeViewVisibility(RHICmdList, BasePassDepthStencilAccess, ViewCommandsPerView, DynamicIndexBufferForInitViews, DynamicVertexBufferForInitViews, DynamicReadBufferForInitViews);

RHICmdList.ImmediateFlush(EImmediateFlushType::DispatchToRHIThread);

// 胶囊阴影

CreateIndirectCapsuleShadows();

RHICmdList.ImmediateFlush(EImmediateFlushType::DispatchToRHIThread);

// 初始化大气效果.

if (ShouldRenderSkyAtmosphere(Scene, ViewFamily.EngineShowFlags))

{

InitSkyAtmosphereForViews(RHICmdList);

}

// 创建可见性帧设置后置阶段.

PostVisibilityFrameSetup(ILCTaskData);

RHICmdList.ImmediateFlush(EImmediateFlushType::DispatchToRHIThread);

(......)

// 是否可能在Prepass之后初始化view,由GDoInitViewsLightingAfterPrepass决定,而GDoInitViewsLightingAfterPrepass又可通过控制台命令r.DoInitViewsLightingAfterPrepass设定。

bool bDoInitViewAftersPrepass = !!GDoInitViewsLightingAfterPrepass;

if (!bDoInitViewAftersPrepass)

{

InitViewsPossiblyAfterPrepass(RHICmdList, ILCTaskData, UpdateViewCustomDataEvents);

}

{

// 初始化所有view的uniform buffer和RHI资源.

for (int32 ViewIndex = 0; ViewIndex < Views.Num(); ViewIndex++)

{

FViewInfo& View = Views[ViewIndex];

if (View.ViewState)

{

if (!View.ViewState->ForwardLightingResources)

{

View.ViewState->ForwardLightingResources.Reset(new FForwardLightingViewResources());

}

View.ForwardLightingResources = View.ViewState->ForwardLightingResources.Get();

}

else

{

View.ForwardLightingResourcesStorage.Reset(new FForwardLightingViewResources());

View.ForwardLightingResources = View.ForwardLightingResourcesStorage.Get();

}

#if RHI_RAYTRACING

View.IESLightProfileResource = View.ViewState ? &View.ViewState->IESLightProfileResources : nullptr;

#endif

// Set the pre-exposure before initializing the constant buffers.

if (View.ViewState)

{

View.ViewState->UpdatePreExposure(View);

}

// Initialize the view's RHI resources.

View.InitRHIResources();

}

}

// 体积雾

SetupVolumetricFog();

// 发送开始渲染事件.

OnStartRender(RHICmdList);

return bDoInitViewAftersPrepass;

}

上面的代码似乎没有做太多逻辑,然而实际上很多逻辑分散在了上面的一些重要接口中,先分析PreVisibilityFrameSetup:

// Engine\Source\Runtime\Renderer\Private\SceneVisibility.cpp

void FDeferredShadingSceneRenderer::PreVisibilityFrameSetup(FRHICommandListImmediate& RHICmdList)

{

// Possible stencil dither optimization approach

for (int32 ViewIndex = 0; ViewIndex < Views.Num(); ViewIndex++)

{

FViewInfo& View = Views[ViewIndex];

View.bAllowStencilDither = bDitheredLODTransitionsUseStencil;

}

FSceneRenderer::PreVisibilityFrameSetup(RHICmdList);

}

void FSceneRenderer::PreVisibilityFrameSetup(FRHICommandListImmediate& RHICmdList)

{

// 通知RHI已经开始渲染场景了.

RHICmdList.BeginScene();

{

static auto CVar = IConsoleManager::Get().FindConsoleVariable(TEXT("r.DoLazyStaticMeshUpdate"));

const bool DoLazyStaticMeshUpdate = (CVar->GetInt() && !GIsEditor);

// 延迟的静态网格更新.

if (DoLazyStaticMeshUpdate)

{

QUICK_SCOPE_CYCLE_COUNTER(STAT_PreVisibilityFrameSetup_EvictionForLazyStaticMeshUpdate);

static int32 RollingRemoveIndex = 0;

static int32 RollingPassShrinkIndex = 0;

if (RollingRemoveIndex >= Scene->Primitives.Num())

{

RollingRemoveIndex = 0;

RollingPassShrinkIndex++;

if (RollingPassShrinkIndex >= UE_ARRAY_COUNT(Scene->CachedDrawLists))

{

RollingPassShrinkIndex = 0;

}

// Periodically shrink the SparseArray containing cached mesh draw commands which we are causing to be regenerated with UpdateStaticMeshes

Scene->CachedDrawLists[RollingPassShrinkIndex].MeshDrawCommands.Shrink();

}

const int32 NumRemovedPerFrame = 10;

TArray<FPrimitiveSceneInfo*, TInlineAllocator<10>> SceneInfos;

for (int32 NumRemoved = 0; NumRemoved < NumRemovedPerFrame && RollingRemoveIndex < Scene->Primitives.Num(); NumRemoved++, RollingRemoveIndex++)

{

SceneInfos.Add(Scene->Primitives[RollingRemoveIndex]);

}

FPrimitiveSceneInfo::UpdateStaticMeshes(RHICmdList, Scene, SceneInfos, false);

}

}

// 转换Skin Cache

RunGPUSkinCacheTransition(RHICmdList, Scene, EGPUSkinCacheTransition::FrameSetup);

// 初始化Groom头发

if (IsHairStrandsEnable(Scene->GetShaderPlatform()) && Views.Num() > 0)

{

const EWorldType::Type WorldType = Views[0].Family->Scene->GetWorld()->WorldType;

const FShaderDrawDebugData* ShaderDrawData = &Views[0].ShaderDrawData;

auto ShaderMap = GetGlobalShaderMap(FeatureLevel);

RunHairStrandsInterpolation(RHICmdList, WorldType, Scene->GetGPUSkinCache(), ShaderDrawData, ShaderMap, EHairStrandsInterpolationType::SimulationStrands, nullptr);

}

// 特效系统

if (Scene->FXSystem && Views.IsValidIndex(0))

{

Scene->FXSystem->PreInitViews(RHICmdList, Views[0].AllowGPUParticleUpdate() && !ViewFamily.EngineShowFlags.HitProxies);

}

(......)

// 设置运动模糊参数(包含TAA的处理)

for(int32 ViewIndex = 0;ViewIndex < Views.Num();ViewIndex++)

{

FViewInfo& View = Views[ViewIndex];

FSceneViewState* ViewState = View.ViewState;

check(View.VerifyMembersChecks());

// Once per render increment the occlusion frame counter.

if (ViewState)

{

ViewState->OcclusionFrameCounter++;

}

// HighResScreenshot should get best results so we don't do the occlusion optimization based on the former frame

extern bool GIsHighResScreenshot;

const bool bIsHitTesting = ViewFamily.EngineShowFlags.HitProxies;

// Don't test occlusion queries in collision viewmode as they can be bigger then the rendering bounds.

const bool bCollisionView = ViewFamily.EngineShowFlags.CollisionVisibility || ViewFamily.EngineShowFlags.CollisionPawn;

if (GIsHighResScreenshot || !DoOcclusionQueries(FeatureLevel) || bIsHitTesting || bCollisionView)

{

View.bDisableQuerySubmissions = true;

View.bIgnoreExistingQueries = true;

}

FSceneRenderTargets& SceneContext = FSceneRenderTargets::Get(RHICmdList);

// set up the screen area for occlusion

float NumPossiblePixels = SceneContext.UseDownsizedOcclusionQueries() && IsValidRef(SceneContext.GetSmallDepthSurface()) ?

(float)View.ViewRect.Width() / SceneContext.GetSmallColorDepthDownsampleFactor() * (float)View.ViewRect.Height() / SceneContext.GetSmallColorDepthDownsampleFactor() :

View.ViewRect.Width() * View.ViewRect.Height();

View.OneOverNumPossiblePixels = NumPossiblePixels > 0.0 ? 1.0f / NumPossiblePixels : 0.0f;

// Still need no jitter to be set for temporal feedback on SSR (it is enabled even when temporal AA is off).

check(View.TemporalJitterPixels.X == 0.0f);

check(View.TemporalJitterPixels.Y == 0.0f);

// Cache the projection matrix b

// Cache the projection matrix before AA is applied

View.ViewMatrices.SaveProjectionNoAAMatrix();

if (ViewState)

{

check(View.bStatePrevViewInfoIsReadOnly);

View.bStatePrevViewInfoIsReadOnly = ViewFamily.bWorldIsPaused || ViewFamily.EngineShowFlags.HitProxies || bFreezeTemporalHistories;

ViewState->SetupDistanceFieldTemporalOffset(ViewFamily);

if (!View.bStatePrevViewInfoIsReadOnly && !bFreezeTemporalSequences)

{

ViewState->FrameIndex++;

}

if (View.OverrideFrameIndexValue.IsSet())

{

ViewState->FrameIndex = View.OverrideFrameIndexValue.GetValue();

}

}

// Subpixel jitter for temporal AA

int32 CVarTemporalAASamplesValue = CVarTemporalAASamples.GetValueOnRenderThread();

bool bTemporalUpsampling = View.PrimaryScreenPercentageMethod == EPrimaryScreenPercentageMethod::TemporalUpscale;

// Apply a sub pixel offset to the view.

if (View.AntiAliasingMethod == AAM_TemporalAA && ViewState && (CVarTemporalAASamplesValue > 0 || bTemporalUpsampling) && View.bAllowTemporalJitter)

{

float EffectivePrimaryResolutionFraction = float(View.ViewRect.Width()) / float(View.GetSecondaryViewRectSize().X);

// Compute number of TAA samples.

int32 TemporalAASamples = CVarTemporalAASamplesValue;

{

if (Scene->GetFeatureLevel() < ERHIFeatureLevel::SM5)

{

// Only support 2 samples for mobile temporal AA.

TemporalAASamples = 2;

}

else if (bTemporalUpsampling)

{

// When doing TAA upsample with screen percentage < 100%, we need extra temporal samples to have a

// constant temporal sample density for final output pixels to avoid output pixel aligned converging issues.

TemporalAASamples = float(TemporalAASamples) * FMath::Max(1.f, 1.f / (EffectivePrimaryResolutionFraction * EffectivePrimaryResolutionFraction));

}

else if (CVarTemporalAASamplesValue == 5)

{

TemporalAASamples = 4;

}

TemporalAASamples = FMath::Clamp(TemporalAASamples, 1, 255);

}

// Compute the new sample index in the temporal sequence.

int32 TemporalSampleIndex = ViewState->TemporalAASampleIndex + 1;

if(TemporalSampleIndex >= TemporalAASamples || View.bCameraCut)

{

TemporalSampleIndex = 0;

}

// Updates view state.

if (!View.bStatePrevViewInfoIsReadOnly && !bFreezeTemporalSequences)

{

ViewState->TemporalAASampleIndex = TemporalSampleIndex;

ViewState->TemporalAASampleIndexUnclamped = ViewState->TemporalAASampleIndexUnclamped+1;

}

// 根据不同的TAA采样策略和参数, 选择和计算对应的采样参数.

float SampleX, SampleY;

if (Scene->GetFeatureLevel() < ERHIFeatureLevel::SM5)

{

float SamplesX[] = { -8.0f/16.0f, 0.0/16.0f };

float SamplesY[] = { /* - */ 0.0f/16.0f, 8.0/16.0f };

check(TemporalAASamples == UE_ARRAY_COUNT(SamplesX));

SampleX = SamplesX[ TemporalSampleIndex ];

SampleY = SamplesY[ TemporalSampleIndex ];

}

else if (View.PrimaryScreenPercentageMethod == EPrimaryScreenPercentageMethod::TemporalUpscale)

{

// Uniformly distribute temporal jittering in [-.5; .5], because there is no longer any alignement of input and output pixels.

SampleX = Halton(TemporalSampleIndex + 1, 2) - 0.5f;

SampleY = Halton(TemporalSampleIndex + 1, 3) - 0.5f;

View.MaterialTextureMipBias = -(FMath::Max(-FMath::Log2(EffectivePrimaryResolutionFraction), 0.0f) ) + CVarMinAutomaticViewMipBiasOffset.GetValueOnRenderThread();

View.MaterialTextureMipBias = FMath::Max(View.MaterialTextureMipBias, CVarMinAutomaticViewMipBias.GetValueOnRenderThread());

}

else if( CVarTemporalAASamplesValue == 2 )

{

// 2xMSAA

// Pattern docs: http://msdn.microsoft.com/en-us/library/windows/desktop/ff476218(v=vs.85).aspx

// N.

// .S

float SamplesX[] = { -4.0f/16.0f, 4.0/16.0f };

float SamplesY[] = { -4.0f/16.0f, 4.0/16.0f };

check(TemporalAASamples == UE_ARRAY_COUNT(SamplesX));

SampleX = SamplesX[ TemporalSampleIndex ];

SampleY = SamplesY[ TemporalSampleIndex ];

}

else if( CVarTemporalAASamplesValue == 3 )

{

// 3xMSAA

// A..

// ..B

// .C.

// Rolling circle pattern (A,B,C).

float SamplesX[] = { -2.0f/3.0f, 2.0/3.0f, 0.0/3.0f };

float SamplesY[] = { -2.0f/3.0f, 0.0/3.0f, 2.0/3.0f };

check(TemporalAASamples == UE_ARRAY_COUNT(SamplesX));

SampleX = SamplesX[ TemporalSampleIndex ];

SampleY = SamplesY[ TemporalSampleIndex ];

}

else if( CVarTemporalAASamplesValue == 4 )

{

// 4xMSAA

// Pattern docs: http://msdn.microsoft.com/en-us/library/windows/desktop/ff476218(v=vs.85).aspx

// .N..

// ...E

// W...

// ..S.

// Rolling circle pattern (N,E,S,W).

float SamplesX[] = { -2.0f/16.0f, 6.0/16.0f, 2.0/16.0f, -6.0/16.0f };

float SamplesY[] = { -6.0f/16.0f, -2.0/16.0f, 6.0/16.0f, 2.0/16.0f };

check(TemporalAASamples == UE_ARRAY_COUNT(SamplesX));

SampleX = SamplesX[ TemporalSampleIndex ];

SampleY = SamplesY[ TemporalSampleIndex ];

}

else if( CVarTemporalAASamplesValue == 5 )

{

// Compressed 4 sample pattern on same vertical and horizontal line (less temporal flicker).

// Compressed 1/2 works better than correct 2/3 (reduced temporal flicker).

// . N .

// W . E

// . S .

// Rolling circle pattern (N,E,S,W).

float SamplesX[] = { 0.0f/2.0f, 1.0/2.0f, 0.0/2.0f, -1.0/2.0f };

float SamplesY[] = { -1.0f/2.0f, 0.0/2.0f, 1.0/2.0f, 0.0/2.0f };

check(TemporalAASamples == UE_ARRAY_COUNT(SamplesX));

SampleX = SamplesX[ TemporalSampleIndex ];

SampleY = SamplesY[ TemporalSampleIndex ];

}

else

{

float u1 = Halton( TemporalSampleIndex + 1, 2 );

float u2 = Halton( TemporalSampleIndex + 1, 3 );

// Generates samples in normal distribution

// exp( x^2 / Sigma^2 )

static auto CVar = IConsoleManager::Get().FindConsoleVariable(TEXT("r.TemporalAAFilterSize"));

float FilterSize = CVar->GetFloat();

// Scale distribution to set non-unit variance

// Variance = Sigma^2

float Sigma = 0.47f * FilterSize;

// Window to [-0.5, 0.5] output

// Without windowing we could generate samples far away on the infinite tails.

float OutWindow = 0.5f;

float InWindow = FMath::Exp( -0.5 * FMath::Square( OutWindow / Sigma ) );

// Box-Muller transform

float Theta = 2.0f * PI * u2;

float r = Sigma * FMath::Sqrt( -2.0f * FMath::Loge( (1.0f - u1) * InWindow + u1 ) );

SampleX = r * FMath::Cos( Theta );

SampleY = r * FMath::Sin( Theta );

}

View.TemporalJitterSequenceLength = TemporalAASamples;

View.TemporalJitterIndex = TemporalSampleIndex;

View.TemporalJitterPixels.X = SampleX;

View.TemporalJitterPixels.Y = SampleY;

View.ViewMatrices.HackAddTemporalAAProjectionJitter(FVector2D(SampleX * 2.0f / View.ViewRect.Width(), SampleY * -2.0f / View.ViewRect.Height()));

}

// Setup a new FPreviousViewInfo from current frame infos.

FPreviousViewInfo NewPrevViewInfo;

{

NewPrevViewInfo.ViewMatrices = View.ViewMatrices;

}

// 初始化view state

if ( ViewState )

{

// update previous frame matrices in case world origin was rebased on this frame

if (!View.OriginOffsetThisFrame.IsZero())

{

ViewState->PrevFrameViewInfo.ViewMatrices.ApplyWorldOffset(View.OriginOffsetThisFrame);

}

// determine if we are initializing or we should reset the persistent state

const float DeltaTime = View.Family->CurrentRealTime - ViewState->LastRenderTime;

const bool bFirstFrameOrTimeWasReset = DeltaTime < -0.0001f || ViewState->LastRenderTime < 0.0001f;

const bool bIsLargeCameraMovement = IsLargeCameraMovement(

View,

ViewState->PrevFrameViewInfo.ViewMatrices.GetViewMatrix(),

ViewState->PrevFrameViewInfo.ViewMatrices.GetViewOrigin(),

45.0f, 10000.0f);

const bool bResetCamera = (bFirstFrameOrTimeWasReset || View.bCameraCut || bIsLargeCameraMovement || View.bForceCameraVisibilityReset);

(......)

if (bResetCamera)

{

View.PrevViewInfo = NewPrevViewInfo;

// PT: If the motion blur shader is the last shader in the post-processing chain then it is the one that is

// adjusting for the viewport offset. So it is always required and we can't just disable the work the

// shader does. The correct fix would be to disable the effect when we don't need it and to properly mark

// the uber-postprocessing effect as the last effect in the chain.

View.bPrevTransformsReset = true;

}

else

{

View.PrevViewInfo = ViewState->PrevFrameViewInfo;

}

// Replace previous view info of the view state with this frame, clearing out references over render target.

if (!View.bStatePrevViewInfoIsReadOnly)

{

ViewState->PrevFrameViewInfo = NewPrevViewInfo;

}

// If the view has a previous view transform, then overwrite the previous view info for the _current_ frame.

if (View.PreviousViewTransform.IsSet())

{

// Note that we must ensure this transform ends up in ViewState->PrevFrameViewInfo else it will be used to calculate the next frame's motion vectors as well

View.PrevViewInfo.ViewMatrices.UpdateViewMatrix(View.PreviousViewTransform->GetTranslation(), View.PreviousViewTransform->GetRotation().Rotator());

}

// detect conditions where we should reset occlusion queries

if (bFirstFrameOrTimeWasReset ||

ViewState->LastRenderTime + GEngine->PrimitiveProbablyVisibleTime < View.Family->CurrentRealTime ||

View.bCameraCut ||

View.bForceCameraVisibilityReset ||

IsLargeCameraMovement(

View,

ViewState->PrevViewMatrixForOcclusionQuery,

ViewState->PrevViewOriginForOcclusionQuery,

GEngine->CameraRotationThreshold, GEngine->CameraTranslationThreshold))

{

View.bIgnoreExistingQueries = true;

View.bDisableDistanceBasedFadeTransitions = true;

}

// Turn on/off round-robin occlusion querying in the ViewState

static const auto CVarRROCC = IConsoleManager::Get().FindTConsoleVariableDataInt(TEXT("vr.RoundRobinOcclusion"));

const bool bEnableRoundRobin = CVarRROCC ? (CVarRROCC->GetValueOnAnyThread() != false) : false;

if (bEnableRoundRobin != ViewState->IsRoundRobinEnabled())

{

ViewState->UpdateRoundRobin(bEnableRoundRobin);

View.bIgnoreExistingQueries = true;

}

ViewState->PrevViewMatrixForOcclusionQuery = View.ViewMatrices.GetViewMatrix();

ViewState->PrevViewOriginForOcclusionQuery = View.ViewMatrices.GetViewOrigin();

(......)

// we don't use DeltaTime as it can be 0 (in editor) and is computed by subtracting floats (loses precision over time)

// Clamp DeltaWorldTime to reasonable values for the purposes of motion blur, things like TimeDilation can make it very small

// Offline renders always control the timestep for the view and always need the timescales calculated.

if (!ViewFamily.bWorldIsPaused || View.bIsOfflineRender)

{

ViewState->UpdateMotionBlurTimeScale(View);

}

ViewState->PrevFrameNumber = ViewState->PendingPrevFrameNumber;

ViewState->PendingPrevFrameNumber = View.Family->FrameNumber;

// This finishes the update of view state

ViewState->UpdateLastRenderTime(*View.Family);

ViewState->UpdateTemporalLODTransition(View);

}

else

{

// Without a viewstate, we just assume that camera has not moved.

View.PrevViewInfo = NewPrevViewInfo;

}

}

// 设置全局抖动参数和过渡uniform buffer.

for (int32 ViewIndex = 0; ViewIndex < Views.Num(); ViewIndex++)

{

FViewInfo& View = Views[ViewIndex];

FDitherUniformShaderParameters DitherUniformShaderParameters;

DitherUniformShaderParameters.LODFactor = View.GetTemporalLODTransition();

View.DitherFadeOutUniformBuffer = FDitherUniformBufferRef::CreateUniformBufferImmediate(DitherUniformShaderParameters, UniformBuffer_SingleFrame);

DitherUniformShaderParameters.LODFactor = View.GetTemporalLODTransition() - 1.0f;

View.DitherFadeInUniformBuffer = FDitherUniformBufferRef::CreateUniformBufferImmediate(DitherUniformShaderParameters, UniformBuffer_SingleFrame);

}

}

由此可知,PreVisibilityFrameSetup做了大量的初始化工作,如静态网格、Groom、SkinCache、特效、TAA、ViewState等等。接着继续分析ComputeViewVisibility:

// Engine\Source\Runtime\Renderer\Private\SceneVisibility.cpp

void FSceneRenderer::ComputeViewVisibility(FRHICommandListImmediate& RHICmdList, FExclusiveDepthStencil::Type BasePassDepthStencilAccess, FViewVisibleCommandsPerView& ViewCommandsPerView, FGlobalDynamicIndexBuffer& DynamicIndexBuffer, FGlobalDynamicVertexBuffer& DynamicVertexBuffer, FGlobalDynamicReadBuffer& DynamicReadBuffer)

{

// 分配可见光源信息列表。

if (Scene->Lights.GetMaxIndex() > 0)

{

VisibleLightInfos.AddZeroed(Scene->Lights.GetMaxIndex());

}

int32 NumPrimitives = Scene->Primitives.Num();

float CurrentRealTime = ViewFamily.CurrentRealTime;

FPrimitiveViewMasks HasDynamicMeshElementsMasks;

HasDynamicMeshElementsMasks.AddZeroed(NumPrimitives);

FPrimitiveViewMasks HasViewCustomDataMasks;

HasViewCustomDataMasks.AddZeroed(NumPrimitives);

FPrimitiveViewMasks HasDynamicEditorMeshElementsMasks;

if (GIsEditor)

{

HasDynamicEditorMeshElementsMasks.AddZeroed(NumPrimitives);

}

const bool bIsInstancedStereo = (Views.Num() > 0) ? (Views[0].IsInstancedStereoPass() || Views[0].bIsMobileMultiViewEnabled) : false;

UpdateReflectionSceneData(Scene);

// 更新不判断可见性的静态网格.

{

Scene->ConditionalMarkStaticMeshElementsForUpdate();

TArray<FPrimitiveSceneInfo*> UpdatedSceneInfos;

for (TSet<FPrimitiveSceneInfo*>::TIterator It(Scene->PrimitivesNeedingStaticMeshUpdateWithoutVisibilityCheck); It; ++It)

{

FPrimitiveSceneInfo* Primitive = *It;

if (Primitive->NeedsUpdateStaticMeshes())

{

UpdatedSceneInfos.Add(Primitive);

}

}

if (UpdatedSceneInfos.Num() > 0)

{

FPrimitiveSceneInfo::UpdateStaticMeshes(RHICmdList, Scene, UpdatedSceneInfos);

}

Scene->PrimitivesNeedingStaticMeshUpdateWithoutVisibilityCheck.Reset();

}

// 初始化所有view的数据.

uint8 ViewBit = 0x1;

for (int32 ViewIndex = 0; ViewIndex < Views.Num(); ++ViewIndex, ViewBit <<= 1)

{

STAT(NumProcessedPrimitives += NumPrimitives);

FViewInfo& View = Views[ViewIndex];

FViewCommands& ViewCommands = ViewCommandsPerView[ViewIndex];

FSceneViewState* ViewState = (FSceneViewState*)View.State;

// Allocate the view's visibility maps.

View.PrimitiveVisibilityMap.Init(false,Scene->Primitives.Num());

// we don't initialized as we overwrite the whole array (in GatherDynamicMeshElements)

View.DynamicMeshEndIndices.SetNumUninitialized(Scene->Primitives.Num());

View.PrimitiveDefinitelyUnoccludedMap.Init(false,Scene->Primitives.Num());

View.PotentiallyFadingPrimitiveMap.Init(false,Scene->Primitives.Num());

View.PrimitiveFadeUniformBuffers.AddZeroed(Scene->Primitives.Num());

View.PrimitiveFadeUniformBufferMap.Init(false, Scene->Primitives.Num());

View.StaticMeshVisibilityMap.Init(false,Scene->StaticMeshes.GetMaxIndex());

View.StaticMeshFadeOutDitheredLODMap.Init(false,Scene->StaticMeshes.GetMaxIndex());

View.StaticMeshFadeInDitheredLODMap.Init(false,Scene->StaticMeshes.GetMaxIndex());

View.StaticMeshBatchVisibility.AddZeroed(Scene->StaticMeshBatchVisibility.GetMaxIndex());

View.PrimitivesLODMask.Init(FLODMask(), Scene->Primitives.Num());

View.PrimitivesCustomData.Init(nullptr, Scene->Primitives.Num());

// We must reserve to prevent realloc otherwise it will cause memory leak if we Execute In Parallel

const bool WillExecuteInParallel = FApp::ShouldUseThreadingForPerformance() && CVarParallelInitViews.GetValueOnRenderThread() > 0;

View.PrimitiveCustomDataMemStack.Reserve(WillExecuteInParallel ? FMath::CeilToInt(((float)View.PrimitiveVisibilityMap.Num() / (float)FRelevancePrimSet<int32>::MaxInputPrims)) + 1 : 1);

View.AllocateCustomDataMemStack();

View.VisibleLightInfos.Empty(Scene->Lights.GetMaxIndex());

View.DirtyIndirectLightingCacheBufferPrimitives.Reserve(Scene->Primitives.Num());

// 创建光源信息.

for(int32 LightIndex = 0;LightIndex < Scene->Lights.GetMaxIndex();LightIndex++)

{

if( LightIndex+2 < Scene->Lights.GetMaxIndex() )

{

if (LightIndex > 2)

{

FLUSH_CACHE_LINE(&View.VisibleLightInfos(LightIndex-2));

}

}

new(View.VisibleLightInfos) FVisibleLightViewInfo();

}

View.PrimitiveViewRelevanceMap.Empty(Scene->Primitives.Num());

View.PrimitiveViewRelevanceMap.AddZeroed(Scene->Primitives.Num());

const bool bIsParent = ViewState && ViewState->IsViewParent();

if ( bIsParent )

{

ViewState->ParentPrimitives.Empty();

}

if (ViewState)

{

// 获取并解压上一帧的遮挡数据.

View.PrecomputedVisibilityData = ViewState->GetPrecomputedVisibilityData(View, Scene);

}

else

{

View.PrecomputedVisibilityData = NULL;

}

if (View.PrecomputedVisibilityData)

{

bUsedPrecomputedVisibility = true;

}

bool bNeedsFrustumCulling = true;

#if !(UE_BUILD_SHIPPING || UE_BUILD_TEST)

if( ViewState )

{

// 冻结可见性

if(ViewState->bIsFrozen)

{

bNeedsFrustumCulling = false;

for (FSceneBitArray::FIterator BitIt(View.PrimitiveVisibilityMap); BitIt; ++BitIt)

{

if (ViewState->FrozenPrimitives.Contains(Scene->PrimitiveComponentIds[BitIt.GetIndex()]))

{

BitIt.GetValue() = true;

}

}

}

}

#endif

// 平截头体裁剪.

if (bNeedsFrustumCulling)

{

// Update HLOD transition/visibility states to allow use during distance culling

FLODSceneTree& HLODTree = Scene->SceneLODHierarchy;

if (HLODTree.IsActive())

{

QUICK_SCOPE_CYCLE_COUNTER(STAT_ViewVisibilityTime_HLODUpdate);

HLODTree.UpdateVisibilityStates(View);

}

else

{

HLODTree.ClearVisibilityState(View);

}

int32 NumCulledPrimitivesForView;

const bool bUseFastIntersect = (View.ViewFrustum.PermutedPlanes.Num() == 8) && CVarUseFastIntersect.GetValueOnRenderThread();

if (View.CustomVisibilityQuery && View.CustomVisibilityQuery->Prepare())

{

if (CVarAlsoUseSphereForFrustumCull.GetValueOnRenderThread())

{

NumCulledPrimitivesForView = bUseFastIntersect ? FrustumCull<true, true, true>(Scene, View) : FrustumCull<true, true, false>(Scene, View);

}

else

{

NumCulledPrimitivesForView = bUseFastIntersect ? FrustumCull<true, false, true>(Scene, View) : FrustumCull<true, false, false>(Scene, View);

}

}

else

{

if (CVarAlsoUseSphereForFrustumCull.GetValueOnRenderThread())

{

NumCulledPrimitivesForView = bUseFastIntersect ? FrustumCull<false, true, true>(Scene, View) : FrustumCull<false, true, false>(Scene, View);

}

else

{

NumCulledPrimitivesForView = bUseFastIntersect ? FrustumCull<false, false, true>(Scene, View) : FrustumCull<false, false, false>(Scene, View);

}

}

STAT(NumCulledPrimitives += NumCulledPrimitivesForView);

UpdatePrimitiveFading(Scene, View);

}

// 处理隐藏物体.

if (View.HiddenPrimitives.Num())

{

for (FSceneSetBitIterator BitIt(View.PrimitiveVisibilityMap); BitIt; ++BitIt)

{

if (View.HiddenPrimitives.Contains(Scene->PrimitiveComponentIds[BitIt.GetIndex()]))

{

View.PrimitiveVisibilityMap.AccessCorrespondingBit(BitIt) = false;

}

}

}

(......)

// 处理静态场景.

if (View.bStaticSceneOnly)

{

for (FSceneSetBitIterator BitIt(View.PrimitiveVisibilityMap); BitIt; ++BitIt)

{

// Reflection captures should only capture objects that won't move, since reflection captures won't update at runtime

if (!Scene->Primitives[BitIt.GetIndex()]->Proxy->HasStaticLighting())

{

View.PrimitiveVisibilityMap.AccessCorrespondingBit(BitIt) = false;

}

}

}

(......)

// 非线框模式, 则只需遮挡剔除.

if (!View.Family->EngineShowFlags.Wireframe)

{

int32 NumOccludedPrimitivesInView = OcclusionCull(RHICmdList, Scene, View, DynamicVertexBuffer);

STAT(NumOccludedPrimitives += NumOccludedPrimitivesInView);

}

// 处理判断可见性的静态模型.

{

TArray<FPrimitiveSceneInfo*> AddedSceneInfos;

for (TConstDualSetBitIterator<SceneRenderingBitArrayAllocator, FDefaultBitArrayAllocator> BitIt(View.PrimitiveVisibilityMap, Scene->PrimitivesNeedingStaticMeshUpdate); BitIt; ++BitIt)

{

int32 PrimitiveIndex = BitIt.GetIndex();

AddedSceneInfos.Add(Scene->Primitives[PrimitiveIndex]);

}

if (AddedSceneInfos.Num() > 0)

{

FPrimitiveSceneInfo::UpdateStaticMeshes(RHICmdList, Scene, AddedSceneInfos);

}

}

(......)

}

(......)

// 收集所有view的动态网格元素. 上一篇已经详细解析过了.

{

GatherDynamicMeshElements(Views, Scene, ViewFamily, DynamicIndexBuffer, DynamicVertexBuffer, DynamicReadBuffer,

HasDynamicMeshElementsMasks, HasDynamicEditorMeshElementsMasks, HasViewCustomDataMasks, MeshCollector);

}

// 创建每个view的MeshPass数据.

for (int32 ViewIndex = 0; ViewIndex < Views.Num(); ViewIndex++)

{

FViewInfo& View = Views[ViewIndex];

if (!View.ShouldRenderView())

{

continue;

}

FViewCommands& ViewCommands = ViewCommandsPerView[ViewIndex];

SetupMeshPass(View, BasePassDepthStencilAccess, ViewCommands);

}

}

ComputeViewVisibility最重要的功能就是处理图元的可见性,包含平截头体裁剪、遮挡剔除,以及收集动态网格信息、创建光源信息等。下面继续粗略剖预计算可见性的过程:

// Engine\Source\Runtime\Renderer\Private\SceneOcclusion.cpp

const uint8* FSceneViewState::GetPrecomputedVisibilityData(FViewInfo& View, const FScene* Scene)

{

const uint8* PrecomputedVisibilityData = NULL;

if (Scene->PrecomputedVisibilityHandler && GAllowPrecomputedVisibility && View.Family->EngineShowFlags.PrecomputedVisibility)

{

const FPrecomputedVisibilityHandler& Handler = *Scene->PrecomputedVisibilityHandler;

FViewElementPDI VisibilityCellsPDI(&View, nullptr, nullptr);

// 绘制调试用的遮挡剔除方格.

if ((GShowPrecomputedVisibilityCells || View.Family->EngineShowFlags.PrecomputedVisibilityCells) && !GShowRelevantPrecomputedVisibilityCells)

{

for (int32 BucketIndex = 0; BucketIndex < Handler.PrecomputedVisibilityCellBuckets.Num(); BucketIndex++)

{

for (int32 CellIndex = 0; CellIndex < Handler.PrecomputedVisibilityCellBuckets[BucketIndex].Cells.Num(); CellIndex++)

{

const FPrecomputedVisibilityCell& CurrentCell = Handler.PrecomputedVisibilityCellBuckets[BucketIndex].Cells[CellIndex];

// Construct the cell's bounds

const FBox CellBounds(CurrentCell.Min, CurrentCell.Min + FVector(Handler.PrecomputedVisibilityCellSizeXY, Handler.PrecomputedVisibilityCellSizeXY, Handler.PrecomputedVisibilityCellSizeZ));

if (View.ViewFrustum.IntersectBox(CellBounds.GetCenter(), CellBounds.GetExtent()))

{

DrawWireBox(&VisibilityCellsPDI, CellBounds, FColor(50, 50, 255), SDPG_World);

}

}

}

}

// 计算哈希值和桶索引, 以减少搜索时间.

const float FloatOffsetX = (View.ViewMatrices.GetViewOrigin().X - Handler.PrecomputedVisibilityCellBucketOriginXY.X) / Handler.PrecomputedVisibilityCellSizeXY;

// FMath::TruncToInt rounds toward 0, we want to always round down

const int32 BucketIndexX = FMath::Abs((FMath::TruncToInt(FloatOffsetX) - (FloatOffsetX < 0.0f ? 1 : 0)) / Handler.PrecomputedVisibilityCellBucketSizeXY % Handler.PrecomputedVisibilityNumCellBuckets);

const float FloatOffsetY = (View.ViewMatrices.GetViewOrigin().Y -Handler.PrecomputedVisibilityCellBucketOriginXY.Y) / Handler.PrecomputedVisibilityCellSizeXY;

const int32 BucketIndexY = FMath::Abs((FMath::TruncToInt(FloatOffsetY) - (FloatOffsetY < 0.0f ? 1 : 0)) / Handler.PrecomputedVisibilityCellBucketSizeXY % Handler.PrecomputedVisibilityNumCellBuckets);

const int32 PrecomputedVisibilityBucketIndex = BucketIndexY * Handler.PrecomputedVisibilityCellBucketSizeXY + BucketIndexX;

// 绘制可见性物体对应的包围盒.

const FPrecomputedVisibilityBucket& CurrentBucket = Handler.PrecomputedVisibilityCellBuckets[PrecomputedVisibilityBucketIndex];

for (int32 CellIndex = 0; CellIndex < CurrentBucket.Cells.Num(); CellIndex++)

{

const FPrecomputedVisibilityCell& CurrentCell = CurrentBucket.Cells[CellIndex];

// 创建cell的包围盒.

const FBox CellBounds(CurrentCell.Min, CurrentCell.Min + FVector(Handler.PrecomputedVisibilityCellSizeXY, Handler.PrecomputedVisibilityCellSizeXY, Handler.PrecomputedVisibilityCellSizeZ));

// Check if ViewOrigin is inside the current cell

if (CellBounds.IsInside(View.ViewMatrices.GetViewOrigin()))

{

// 检测是否可重复使用已缓存的数据.

if (CachedVisibilityChunk

&& CachedVisibilityHandlerId == Scene->PrecomputedVisibilityHandler->GetId()

&& CachedVisibilityBucketIndex == PrecomputedVisibilityBucketIndex

&& CachedVisibilityChunkIndex == CurrentCell.ChunkIndex)

{

PrecomputedVisibilityData = &(*CachedVisibilityChunk)[CurrentCell.DataOffset];

}

else

{

const FCompressedVisibilityChunk& CompressedChunk = Handler.PrecomputedVisibilityCellBuckets[PrecomputedVisibilityBucketIndex].CellDataChunks[CurrentCell.ChunkIndex];

CachedVisibilityBucketIndex = PrecomputedVisibilityBucketIndex;

CachedVisibilityChunkIndex = CurrentCell.ChunkIndex;

CachedVisibilityHandlerId = Scene->PrecomputedVisibilityHandler->GetId();

// 解压遮挡数据.

if (CompressedChunk.bCompressed)

{

// Decompress the needed visibility data chunk

DecompressedVisibilityChunk.Reset();

DecompressedVisibilityChunk.AddUninitialized(CompressedChunk.UncompressedSize);

verify(FCompression::UncompressMemory(

NAME_Zlib,

DecompressedVisibilityChunk.GetData(),

CompressedChunk.UncompressedSize,

CompressedChunk.Data.GetData(),

CompressedChunk.Data.Num()));

CachedVisibilityChunk = &DecompressedVisibilityChunk;

}

else

{

CachedVisibilityChunk = &CompressedChunk.Data;

}

// Return a pointer to the cell containing ViewOrigin's decompressed visibility data

PrecomputedVisibilityData = &(*CachedVisibilityChunk)[CurrentCell.DataOffset];

}

(......)

}

(......)

}

return PrecomputedVisibilityData;

}

从代码中得知,可见性判定可以绘制出一些调试信息,如每个物体实际用于剔除时的包围盒大小,也可以冻结(Frozen)剔除结果,以便观察遮挡的效率。

由于可见性判定包含预计算、平截头体裁剪、遮挡剔除等,单单遮挡剔除涉及的知识点比较多(绘制、获取、数据压缩解压、存储结构、多线程传递、帧间交互、可见性判定、HLOD等等),此处只对可见性判定做了粗略的浅析,更多详细的机制和过程将会在后续的渲染优化专题中深入剖析。

接下来继续分析InitViews内的PostVisibilityFrameSetup:

// Engine\Source\Runtime\Renderer\Private\SceneVisibility.cpp

void FSceneRenderer::PostVisibilityFrameSetup(FILCUpdatePrimTaskData& OutILCTaskData)

{

// 处理贴花排序和调整历史RT.

{

QUICK_SCOPE_CYCLE_COUNTER(STAT_PostVisibilityFrameSetup_Sort);

for (int32 ViewIndex = 0; ViewIndex < Views.Num(); ViewIndex++)

{

FViewInfo& View = Views[ViewIndex];

View.MeshDecalBatches.Sort();

if (View.State)

{

((FSceneViewState*)View.State)->TrimHistoryRenderTargets(Scene);

}

}

}

(......)

// 处理光源可见性.

{

QUICK_SCOPE_CYCLE_COUNTER(STAT_PostVisibilityFrameSetup_Light_Visibility);

// 遍历所有光源, 结合view的视锥判断可见性(同一个光源可能在有的view可见但其它view不可见).

for(TSparseArray<FLightSceneInfoCompact>::TConstIterator LightIt(Scene->Lights);LightIt;++LightIt)

{

const FLightSceneInfoCompact& LightSceneInfoCompact = *LightIt;

const FLightSceneInfo* LightSceneInfo = LightSceneInfoCompact.LightSceneInfo;

// 利用每个view内的镜头视锥裁剪光源.

for(int32 ViewIndex = 0;ViewIndex < Views.Num();ViewIndex++)

{

const FLightSceneProxy* Proxy = LightSceneInfo->Proxy;

FViewInfo& View = Views[ViewIndex];

FVisibleLightViewInfo& VisibleLightViewInfo = View.VisibleLightInfos[LightIt.GetIndex()];

// 平行方向光不需要裁剪, 只有局部光源才需要裁剪

if( Proxy->GetLightType() == LightType_Point ||

Proxy->GetLightType() == LightType_Spot ||

Proxy->GetLightType() == LightType_Rect )

{

FSphere const& BoundingSphere = Proxy->GetBoundingSphere();

// 判断view的视锥是否和光源包围盒相交.

if (View.ViewFrustum.IntersectSphere(BoundingSphere.Center, BoundingSphere.W))

{

// 透视视锥需要针对最大距离做校正, 剔除距离视点太远的光源.

if (View.IsPerspectiveProjection())

{

FSphere Bounds = Proxy->GetBoundingSphere();

float DistanceSquared = (Bounds.Center - View.ViewMatrices.GetViewOrigin()).SizeSquared();

float MaxDistSquared = Proxy->GetMaxDrawDistance() * Proxy->GetMaxDrawDistance() * GLightMaxDrawDistanceScale * GLightMaxDrawDistanceScale;

// 考量了光源的半径、视图的LOD因子、最小光源屏幕半径等因素来决定最终光源是否需要绘制,以便剔除掉远距离屏幕占比很小的光源。

const bool bDrawLight = (FMath::Square(FMath::Min(0.0002f, GMinScreenRadiusForLights / Bounds.W) * View.LODDistanceFactor) * DistanceSquared < 1.0f)

&& (MaxDistSquared == 0 || DistanceSquared < MaxDistSquared);

VisibleLightViewInfo.bInViewFrustum = bDrawLight;

}

else

{

VisibleLightViewInfo.bInViewFrustum = true;

}

}

}

else

{

// 平行方向光一定可见.

VisibleLightViewInfo.bInViewFrustum = true;

(......)

}

(......)

}

在InitViews末期,会初始化每个view的RHI资源以及通知RHICommandList渲染开始事件。先看看FViewInfo::InitRHIResources:

// Engine\Source\Runtime\Renderer\Private\SceneRendering.cpp

void FViewInfo::InitRHIResources()

{

FBox VolumeBounds[TVC_MAX];

// 创建和设置缓存的视图Uniform Buffer.

CachedViewUniformShaderParameters = MakeUnique<FViewUniformShaderParameters>();

FSceneRenderTargets& SceneContext = FSceneRenderTargets::Get(FRHICommandListExecutor::GetImmediateCommandList());

SetupUniformBufferParameters(

SceneContext,

VolumeBounds,

TVC_MAX,

*CachedViewUniformShaderParameters);

// 创建和设置视图的Uniform Buffer.

ViewUniformBuffer = TUniformBufferRef<FViewUniformShaderParameters>::CreateUniformBufferImmediate(*CachedViewUniformShaderParameters, UniformBuffer_SingleFrame);

const int32 TranslucencyLightingVolumeDim = GetTranslucencyLightingVolumeDim();

// 重置缓存的Uniform Buffer.

FScene* Scene = Family->Scene ? Family->Scene->GetRenderScene() : nullptr;

if (Scene)

{

Scene->UniformBuffers.InvalidateCachedView();

}

// 初始化透明体积光照参数.

for (int32 CascadeIndex = 0; CascadeIndex < TVC_MAX; CascadeIndex++)

{

TranslucencyLightingVolumeMin[CascadeIndex] = VolumeBounds[CascadeIndex].Min;

TranslucencyVolumeVoxelSize[CascadeIndex] = (VolumeBounds[CascadeIndex].Max.X - VolumeBounds[CascadeIndex].Min.X) / TranslucencyLightingVolumeDim;

TranslucencyLightingVolumeSize[CascadeIndex] = VolumeBounds[CascadeIndex].Max - VolumeBounds[CascadeIndex].Min;

}

}

继续解析InitViews末尾的OnStartRender:

// Engine\Source\Runtime\Renderer\Private\SceneRendering.cpp

void FSceneRenderer::OnStartRender(FRHICommandListImmediate& RHICmdList)

{

// 场景MRT的初始化.

FSceneRenderTargets& SceneContext = FSceneRenderTargets::Get(RHICmdList);

FVisualizeTexturePresent::OnStartRender(Views[0]);

CompositionGraph_OnStartFrame();

SceneContext.bScreenSpaceAOIsValid = false;

SceneContext.bCustomDepthIsValid = false;

// 通知ViewState初始化.

for (FViewInfo& View : Views)

{

if (View.ViewState)

{

View.ViewState->OnStartRender(View, ViewFamily);

}

}

}

// Engine\Source\Runtime\Renderer\Private\ScenePrivate.h

void FSceneViewState::OnStartRender(FViewInfo& View, FSceneViewFamily& ViewFamily)

{

// 初始化和设置光照传输体积.

if(!(View.FinalPostProcessSettings.IndirectLightingColor * View.FinalPostProcessSettings.IndirectLightingIntensity).IsAlmostBlack())

{

SetupLightPropagationVolume(View, ViewFamily);

}

// 分配软件级场景遮挡剔除(针对不支持硬件遮挡剔除的低端机)

ConditionallyAllocateSceneSoftwareOcclusion(View.GetFeatureLevel());

}

PrePass

PrePass又被称为提前深度Pass、Depth Only Pass、Early-Z Pass,用来渲染不透明物体的深度。此Pass只会写入深度而不会写入颜色,写入深度时有disabled、occlusion only、complete depths三种模式,视不同的平台和Feature Level决定。

PrePass可以由DBuffer发起,也可由Forward Shading触发,通常用来建立Hierarchical-Z,以便能够开启硬件的Early-Z技术,还可被用于遮挡剔除,提升Base Pass的渲染效率。 PrePass在FDeferredShadingSceneRenderer::Render:

void FDeferredShadingSceneRenderer::Render(FRHICommandListImmediate& RHICmdList)

{

Scene->UpdateAllPrimitiveSceneInfos(RHICmdList, true);

(......)

InitViews(...);

(......)

UpdateGPUScene(RHICmdList, *Scene);

(......)

// 判断是否需要PrePass.

const bool bNeedsPrePass = NeedsPrePass(this);

// The Z-prepass

(......)

if (bNeedsPrePass)

{

// 绘制场景深度, 构建深度缓冲和层级Z缓冲(HiZ).

bDepthWasCleared = RenderPrePass(RHICmdList, AfterTasksAreStarted);

}

(......)

// Z-Prepass End

}

开启PrePass需要满足以下两个条件:

- 非硬件Tiled的GPU。现代移动端GPU通常自带Tiled,且是TBDR架构,已经在GPU层做了Early-Z,无需再显式绘制。

- 指定了有效的EarlyZPassMode或者渲染器的bEarlyZPassMovable不为0。

// Engine\Source\Runtime\Renderer\Private\DepthRendering.cpp

bool FDeferredShadingSceneRenderer::RenderPrePass(FRHICommandListImmediate& RHICmdList, TFunctionRef<void()> AfterTasksAreStarted)

{

bool bDepthWasCleared = false;

(......)

bool bDidPrePre = false;

FSceneRenderTargets& SceneContext = FSceneRenderTargets::Get(RHICmdList);

bool bParallel = GRHICommandList.UseParallelAlgorithms() && CVarParallelPrePass.GetValueOnRenderThread();

// 非并行模式.

if (!bParallel)

{

AfterTasksAreStarted();

bDepthWasCleared = PreRenderPrePass(RHICmdList);

bDidPrePre = true;

SceneContext.BeginRenderingPrePass(RHICmdList, false);

}

else // 并行模式

{

// 分配深度缓冲.

SceneContext.GetSceneDepthSurface();

}

// Draw a depth pass to avoid overdraw in the other passes.

if(EarlyZPassMode != DDM_None)

{

const bool bWaitForTasks = bParallel && (CVarRHICmdFlushRenderThreadTasksPrePass.GetValueOnRenderThread() > 0 || CVarRHICmdFlushRenderThreadTasks.GetValueOnRenderThread() > 0);

FScopedCommandListWaitForTasks Flusher(bWaitForTasks, RHICmdList);

// 每个view都绘制一遍深度缓冲.

for(int32 ViewIndex = 0;ViewIndex < Views.Num();ViewIndex++)

{

const FViewInfo& View = Views[ViewIndex];

// 创建和设置Uniform Buffer.

TUniformBufferRef<FSceneTexturesUniformParameters> PassUniformBuffer;

CreateDepthPassUniformBuffer(RHICmdList, View, PassUniformBuffer);

// 处理渲染状态.

FMeshPassProcessorRenderState DrawRenderState(View, PassUniformBuffer);

SetupDepthPassState(DrawRenderState);

if (View.ShouldRenderView())

{

Scene->UniformBuffers.UpdateViewUniformBuffer(View);

// 并行模式

if (bParallel)

{

check(RHICmdList.IsOutsideRenderPass());

bDepthWasCleared = RenderPrePassViewParallel(View, RHICmdList, DrawRenderState, AfterTasksAreStarted, !bDidPrePre) || bDepthWasCleared;

bDidPrePre = true;

}

else

{

RenderPrePassView(RHICmdList, View, DrawRenderState);

}

}

// Parallel rendering has self contained renderpasses so we need a new one for editor primitives.

if (bParallel)

{

SceneContext.BeginRenderingPrePass(RHICmdList, false);

}

RenderPrePassEditorPrimitives(RHICmdList, View, DrawRenderState, EarlyZPassMode, true);

if (bParallel)

{

RHICmdList.EndRenderPass();

}

}

}

(......)

if (bParallel)

{

// In parallel mode there will be no renderpass here. Need to restart.

SceneContext.BeginRenderingPrePass(RHICmdList, false);

}

(......)

SceneContext.FinishRenderingPrePass(RHICmdList);

return bDepthWasCleared;

}

PrePass的绘制流程跟上一篇解析的FMeshProcessor和Pass绘制类似,此处不再重复解析。不过这里可以重点看看PrePass的渲染状态:

void SetupDepthPassState(FMeshPassProcessorRenderState& DrawRenderState)

{

// 禁止写入颜色, 开启深度测试和写入, 深度比较函数是更近或相等.

DrawRenderState.SetBlendState(TStaticBlendState<CW_NONE>::GetRHI());

DrawRenderState.SetDepthStencilState(TStaticDepthStencilState<true, CF_DepthNearOrEqual>::GetRHI());

}

另外,绘制深度时,由于不需要写入颜色,那么渲染物体时使用的材质肯定不应该是物体本身的材质,而是某种简单的材质。为了验证猜想,进入FDepthPassMeshProcessor一探Depth Pass使用的材质:

// Engine\Source\Runtime\Renderer\Private\DepthRendering.cpp

void FDepthPassMeshProcessor::AddMeshBatch(const FMeshBatch& RESTRICT MeshBatch, uint64 BatchElementMask, const FPrimitiveSceneProxy* RESTRICT PrimitiveSceneProxy, int32 StaticMeshId)

{

(......)

if (bDraw)

{

(......)

// 获取Surface材质域的默认材质, 作为深度Pass的渲染材质.

const FMaterialRenderProxy& DefaultProxy = *UMaterial::GetDefaultMaterial(MD_Surface)->GetRenderProxy();

const FMaterial& DefaultMaterial = *DefaultProxy.GetMaterial(FeatureLevel);

Process<true>(MeshBatch, BatchElementMask, StaticMeshId, BlendMode, PrimitiveSceneProxy, DefaultProxy, DefaultMaterial, MeshFillMode, MeshCullMode);

(......)

}

}

// Engine\Source\Runtime\Engine\Private\Materials\Material.cpp

UMaterial* UMaterial::GetDefaultMaterial(EMaterialDomain Domain)

{

InitDefaultMaterials();

UMaterial* Default = GDefaultMaterials[Domain];

return Default;

}

void UMaterialInterface::InitDefaultMaterials()

{

static bool bInitialized = false;

if (!bInitialized)

{

(......)

for (int32 Domain = 0; Domain < MD_MAX; ++Domain)

{

if (GDefaultMaterials[Domain] == nullptr)

{

FString ResolvedPath = ResolveIniObjectsReference(GDefaultMaterialNames[Domain]);

GDefaultMaterials[Domain] = FindObject<UMaterial>(nullptr, *ResolvedPath);

if (GDefaultMaterials[Domain] == nullptr)

{

GDefaultMaterials[Domain] = LoadObject<UMaterial>(nullptr, *ResolvedPath, nullptr, LOAD_DisableDependencyPreloading, nullptr);

}

if (GDefaultMaterials[Domain])

{

GDefaultMaterials[Domain]->AddToRoot();

}

}

}

(......)

}

}

由上面可知,材质系统的默认材质由GDefaultMaterialNames指明,转到其声明:

static const TCHAR* GDefaultMaterialNames[MD_MAX] =

{

// Surface

TEXT("engine-ini:/Script/Engine.Engine.DefaultMaterialName"),

// Deferred Decal

TEXT("engine-ini:/Script/Engine.Engine.DefaultDeferredDecalMaterialName"),

// Light Function

TEXT("engine-ini:/Script/Engine.Engine.DefaultLightFunctionMaterialName"),

// Volume

//@todo - get a real MD_Volume default material

TEXT("engine-ini:/Script/Engine.Engine.DefaultMaterialName"),

// Post Process

TEXT("engine-ini:/Script/Engine.Engine.DefaultPostProcessMaterialName"),

// User Interface

TEXT("engine-ini:/Script/Engine.Engine.DefaultMaterialName"),

// Virtual Texture

TEXT("engine-ini:/Script/Engine.Engine.DefaultMaterialName"),

};

最终发现默认材质为ResolvedPath = L"/Engine/EngineMaterials/WorldGridMaterial.WorldGridMaterial"

非常值得一提的是:WorldGridMaterial使用的Shading Model是Default Lit,材质中也存在冗余的节点。如果想要做极致的优化,建议在配置文件中更改此材质,删除冗余的材质节点,改成Unlit模式更佳,以最大化地缩减shader指令,提升渲染效率。

还值得一提的是,绘制深度Pass时可以指定深度绘制模式:

// Engine\Source\Runtime\Renderer\Private\DepthRendering.h

enum EDepthDrawingMode

{

// 不绘制深度

DDM_None = 0,

// 只绘制Opaque材质(不包含Masked材质)

DDM_NonMaskedOnly = 1,

// Opaque和Masked材质, 但不包含关闭了bUseAsOccluder的物体.

DDM_AllOccluders = 2,

// 全部不透明物体模式, 所有物体需绘制, 且每个像素都要匹配Base Pass的深度.

DDM_AllOpaque = 3,

// 仅Masked模式.

DDM_MaskedOnly = 4,

};

具体是如何决定深度绘制模式的,由下面的接口决定:

// Engine\Source\Runtime\Renderer\Private\RendererScene.cpp

void FScene::UpdateEarlyZPassMode()

{

DefaultBasePassDepthStencilAccess = FExclusiveDepthStencil::DepthWrite_StencilWrite;

EarlyZPassMode = DDM_NonMaskedOnly; // 默认只绘制Opaque材质.

bEarlyZPassMovable = false;

// 延迟渲染管线下的深度策略

if (GetShadingPath(GetFeatureLevel()) == EShadingPath::Deferred)

{

// 由命令行重写, 也可由工程设置中指定.

{

const int32 CVarValue = CVarEarlyZPass.GetValueOnAnyThread();

switch (CVarValue)

{

case 0: EarlyZPassMode = DDM_None; break;

case 1: EarlyZPassMode = DDM_NonMaskedOnly; break;

case 2: EarlyZPassMode = DDM_AllOccluders; break;

case 3: break; // Note: 3 indicates "default behavior" and does not specify an override

}

}

const EShaderPlatform ShaderPlatform = GetFeatureLevelShaderPlatform(FeatureLevel);

if (ShouldForceFullDepthPass(ShaderPlatform))

{

// DBuffer贴图和模板LOD抖动强制全部模式.

EarlyZPassMode = DDM_AllOpaque;

bEarlyZPassMovable = true;

}

if (EarlyZPassMode == DDM_AllOpaque

&& CVarBasePassWriteDepthEvenWithFullPrepass.GetValueOnAnyThread() == 0)

{

DefaultBasePassDepthStencilAccess = FExclusiveDepthStencil::DepthRead_StencilWrite;

}

}

(......)

}

BasePass

UE的BasePass就是延迟渲染里的几何通道,用来渲染不透明物体的几何信息,包含法线、深度、颜色、AO、粗糙度、金属度等等,这些几何信息会写入若干张GBuffer中。

bool FDeferredShadingSceneRenderer::RenderBasePass(FRHICommandListImmediate& RHICmdList, FExclusiveDepthStencil::Type BasePassDepthStencilAccess, IPooledRenderTarget* ForwardScreenSpaceShadowMask, bool bParallelBasePass, bool bRenderLightmapDensity)

{

(......)

{

FExclusiveDepthStencil::Type BasePassDepthStencilAccess_NoDepthWrite = FExclusiveDepthStencil::Type(BasePassDepthStencilAccess & ~FExclusiveDepthStencil::DepthWrite);

// 并行模式

if (bParallelBasePass)

{

FScopedCommandListWaitForTasks Flusher(CVarRHICmdFlushRenderThreadTasksBasePass.GetValueOnRenderThread() > 0 || CVarRHICmdFlushRenderThreadTasks.GetValueOnRenderThread() > 0, RHICmdList);

// 遍历所有view渲染Base Pass

for (int32 ViewIndex = 0; ViewIndex < Views.Num(); ViewIndex++)

{

FViewInfo& View = Views[ViewIndex];

// Uniform Buffer

TUniformBufferRef<FOpaqueBasePassUniformParameters> BasePassUniformBuffer;

CreateOpaqueBasePassUniformBuffer(RHICmdList, View, ForwardScreenSpaceShadowMask, nullptr, nullptr, nullptr, BasePassUniformBuffer);

// Render State

FMeshPassProcessorRenderState DrawRenderState(View, BasePassUniformBuffer);

SetupBasePassState(BasePassDepthStencilAccess, ViewFamily.EngineShowFlags.ShaderComplexity, DrawRenderState);

const bool bShouldRenderView = View.ShouldRenderView();

if (bShouldRenderView)

{

Scene->UniformBuffers.UpdateViewUniformBuffer(View);

// 执行渲染.

RenderBasePassViewParallel(View, RHICmdList, BasePassDepthStencilAccess, DrawRenderState);

}

FSceneRenderTargets::Get(RHICmdList).BeginRenderingGBuffer(RHICmdList, ERenderTargetLoadAction::ELoad, ERenderTargetLoadAction::ELoad, BasePassDepthStencilAccess, this->ViewFamily.EngineShowFlags.ShaderComplexity);

RenderEditorPrimitives(RHICmdList, View, BasePassDepthStencilAccess, DrawRenderState, bDirty);

RHICmdList.EndRenderPass();

(......)

}

bDirty = true; // assume dirty since we are not going to wait

}

else // 非并行模式

{

(......)

}

}

(......)

}

Base Pass的渲染逻辑和Pre Pass的逻辑是很类似的,故而不再细究。接下来重点查看渲染Base Pass时使用的渲染状态和材质,下面是渲染状态:

void SetupBasePassState(FExclusiveDepthStencil::Type BasePassDepthStencilAccess, const bool bShaderComplexity, FMeshPassProcessorRenderState& DrawRenderState)

{

DrawRenderState.SetDepthStencilAccess(BasePassDepthStencilAccess);

(......)

{

// 所有GBuffer都开启了混合.

static const auto CVar = IConsoleManager::Get().FindTConsoleVariableDataInt(TEXT("r.BasePassOutputsVelocityDebug"));

if (CVar && CVar->GetValueOnRenderThread() == 2)

{

DrawRenderState.SetBlendState(TStaticBlendStateWriteMask<CW_RGBA, CW_RGBA, CW_RGBA, CW_RGBA, CW_RGBA, CW_RGBA, CW_NONE>::GetRHI());

}

else

{

DrawRenderState.SetBlendState(TStaticBlendStateWriteMask<CW_RGBA, CW_RGBA, CW_RGBA, CW_RGBA>::GetRHI());

}

// 开启了深度写入和测试, 比较函数为NearOrEqual.

if (DrawRenderState.GetDepthStencilAccess() & FExclusiveDepthStencil::DepthWrite)

{

DrawRenderState.SetDepthStencilState(TStaticDepthStencilState<true, CF_DepthNearOrEqual>::GetRHI());

}

else

{

DrawRenderState.SetDepthStencilState(TStaticDepthStencilState<false, CF_DepthNearOrEqual>::GetRHI());

}

}

}

LightingPass

Translucency

半透明阶段会渲染半透明的颜色、扰动纹理(用于折射等效果)、速度缓冲(用于TAA抗锯齿、后处理效果),其中最主要的渲染半透明的逻辑在RenderTranslucency:

// Source\Runtime\Renderer\Private\TranslucentRendering.cpp

void FDeferredShadingSceneRenderer::RenderTranslucency(FRHICommandListImmediate& RHICmdList, bool bDrawUnderwaterViews)

{

TRefCountPtr<IPooledRenderTarget> SceneColorCopy;

if (!bDrawUnderwaterViews)

{

ConditionalResolveSceneColorForTranslucentMaterials(RHICmdList, SceneColorCopy);

}

// Disable UAV cache flushing so we have optimal VT feedback performance.

RHICmdList.BeginUAVOverlap();

// 在景深之后渲染半透明物体。

if (ViewFamily.AllowTranslucencyAfterDOF())

{

// 第一个Pass渲染标准的半透明物体。

RenderTranslucencyInner(RHICmdList, ETranslucencyPass::TPT_StandardTranslucency, SceneColorCopy, bDrawUnderwaterViews);

// 第二个Pass渲染DOF之后的半透明物体, 会存储在单独的一张半透明RT中, 以便稍后使用.

RenderTranslucencyInner(RHICmdList, ETranslucencyPass::TPT_TranslucencyAfterDOF, SceneColorCopy, bDrawUnderwaterViews);

// 第三个Pass将半透明的RT和场景颜色缓冲在DOF pass之后混合起来.

RenderTranslucencyInner(RHICmdList, ETranslucencyPass::TPT_TranslucencyAfterDOFModulate, SceneColorCopy, bDrawUnderwaterViews);

}

else // 普通模式, 单个Pass即渲染完所有的半透明物体.

{

RenderTranslucencyInner(RHICmdList, ETranslucencyPass::TPT_AllTranslucency, SceneColorCopy, bDrawUnderwaterViews);

}

RHICmdList.EndUAVOverlap();

}

RenderTranslucencyInner在内部真正地渲染半透明物体,它的代码如下:

// Source\Runtime\Renderer\Private\TranslucentRendering.cpp

void FDeferredShadingSceneRenderer::RenderTranslucencyInner(FRHICommandListImmediate& RHICmdList, ETranslucencyPass::Type TranslucencyPass, IPooledRenderTarget* SceneColorCopy, bool bDrawUnderwaterViews)

{

if (!ShouldRenderTranslucency(TranslucencyPass))

{

return; // Early exit if nothing needs to be done.

}

FSceneRenderTargets& SceneContext = FSceneRenderTargets::Get(RHICmdList);

// 并行渲染支持.

const bool bUseParallel = GRHICommandList.UseParallelAlgorithms() && CVarParallelTranslucency.GetValueOnRenderThread();

if (bUseParallel)

{

SceneContext.AllocLightAttenuation(RHICmdList); // materials will attempt to get this texture before the deferred command to set it up executes

}

FScopedCommandListWaitForTasks Flusher(bUseParallel && (CVarRHICmdFlushRenderThreadTasksTranslucentPass.GetValueOnRenderThread() > 0 || CVarRHICmdFlushRenderThreadTasks.GetValueOnRenderThread() > 0), RHICmdList);

// 遍历所有view.

for (int32 ViewIndex = 0, NumProcessedViews = 0; ViewIndex < Views.Num(); ViewIndex++)

{

FViewInfo& View = Views[ViewIndex];

if (!View.ShouldRenderView() || (Views[ViewIndex].IsUnderwater() != bDrawUnderwaterViews))

{

continue;

}

// 更新场景的Uinform Buffer.

Scene->UniformBuffers.UpdateViewUniformBuffer(View);

TUniformBufferRef<FTranslucentBasePassUniformParameters> BasePassUniformBuffer;

// 更新半透明Pass的Uinform Buffer.

CreateTranslucentBasePassUniformBuffer(RHICmdList, View, SceneColorCopy, ESceneTextureSetupMode::All, BasePassUniformBuffer, ViewIndex);

// 渲染状态.

FMeshPassProcessorRenderState DrawRenderState(View, BasePassUniformBuffer);

// 渲染saparate列队.

if (!bDrawUnderwaterViews && RenderInSeparateTranslucency(SceneContext, TranslucencyPass, View.TranslucentPrimCount.DisableOffscreenRendering(TranslucencyPass)))

{

FIntPoint ScaledSize;

float DownsamplingScale = 1.f;

SceneContext.GetSeparateTranslucencyDimensions(ScaledSize, DownsamplingScale);

if (DownsamplingScale < 1.f)

{

FViewUniformShaderParameters DownsampledTranslucencyViewParameters;

SetupDownsampledTranslucencyViewParameters(RHICmdList, View, DownsampledTranslucencyViewParameters);

Scene->UniformBuffers.UpdateViewUniformBufferImmediate(DownsampledTranslucencyViewParameters);

DrawRenderState.SetViewUniformBuffer(Scene->UniformBuffers.ViewUniformBuffer);

(......)

}

// 渲染前的准备阶段.

if (TranslucencyPass == ETranslucencyPass::TPT_TranslucencyAfterDOF)

{

BeginTimingSeparateTranslucencyPass(RHICmdList, View);

SceneContext.BeginRenderingSeparateTranslucency(RHICmdList, View, *this, NumProcessedViews == 0 || View.Family->bMultiGPUForkAndJoin);

}

// 混合队列.

else if (TranslucencyPass == ETranslucencyPass::TPT_TranslucencyAfterDOFModulate)

{

BeginTimingSeparateTranslucencyModulatePass(RHICmdList, View);

SceneContext.BeginRenderingSeparateTranslucencyModulate(RHICmdList, View, *this, NumProcessedViews == 0 || View.Family->bMultiGPUForkAndJoin);

}

// 标准队列.

else

{

SceneContext.BeginRenderingSeparateTranslucency(RHICmdList, View, *this, NumProcessedViews == 0 || View.Family->bMultiGPUForkAndJoin);

}

// Draw only translucent prims that are in the SeparateTranslucency pass

DrawRenderState.SetDepthStencilState(TStaticDepthStencilState<false, CF_DepthNearOrEqual>::GetRHI());

// 真正地绘制半透明物体.

if (bUseParallel)

{

RHICmdList.EndRenderPass();

RenderViewTranslucencyParallel(RHICmdList, View, DrawRenderState, TranslucencyPass);

}

else

{

RenderViewTranslucency(RHICmdList, View, DrawRenderState, TranslucencyPass);

RHICmdList.EndRenderPass();

}

// 渲染后的结束阶段.

if (TranslucencyPass == ETranslucencyPass::TPT_TranslucencyAfterDOF)

{

SceneContext.ResolveSeparateTranslucency(RHICmdList, View);

EndTimingSeparateTranslucencyPass(RHICmdList, View);

}

else if (TranslucencyPass == ETranslucencyPass::TPT_TranslucencyAfterDOFModulate)

{

SceneContext.ResolveSeparateTranslucencyModulate(RHICmdList, View);

EndTimingSeparateTranslucencyModulatePass(RHICmdList, View);

}

else

{

SceneContext.ResolveSeparateTranslucency(RHICmdList, View);

}

// 上采样(放大)半透明物体的RT.