18 KiB

title, date, excerpt, tags, rating

| title | date | excerpt | tags | rating |

|---|---|---|---|---|

| ToonPostProcess | 2024-05-15 16:50:13 | ⭐ |

FFT

Bloom

Bloom主要分

- Bloom

- FFTBloom

- LensFlares

BloomThreshold,ClampMin = "-1.0", UIMax = "8.0"。 相关逻辑位于:

if (bBloomSetupRequiredEnabled)

{

const float BloomThreshold = View.FinalPostProcessSettings.BloomThreshold;

FBloomSetupInputs SetupPassInputs;

SetupPassInputs.SceneColor = DownsampleInput;

SetupPassInputs.EyeAdaptationBuffer = EyeAdaptationBuffer;

SetupPassInputs.EyeAdaptationParameters = &EyeAdaptationParameters;

SetupPassInputs.LocalExposureParameters = &LocalExposureParameters;

SetupPassInputs.LocalExposureTexture = CVarBloomApplyLocalExposure.GetValueOnRenderThread() ? LocalExposureTexture : nullptr;

SetupPassInputs.BlurredLogLuminanceTexture = LocalExposureBlurredLogLumTexture;

SetupPassInputs.Threshold = BloomThreshold;

SetupPassInputs.ToonThreshold = View.FinalPostProcessSettings.ToonBloomThreshold;

DownsampleInput = AddBloomSetupPass(GraphBuilder, View, SetupPassInputs);

}

FFTBloom

普通Bloom算法只能做到圆形光斑,对于自定义形状的就需要使用FFTBloom。

- FFT Bloom:https://zhuanlan.zhihu.com/p/611582936

- Unity FFT Bloom:https://github.com/AKGWSB/FFTConvolutionBloom

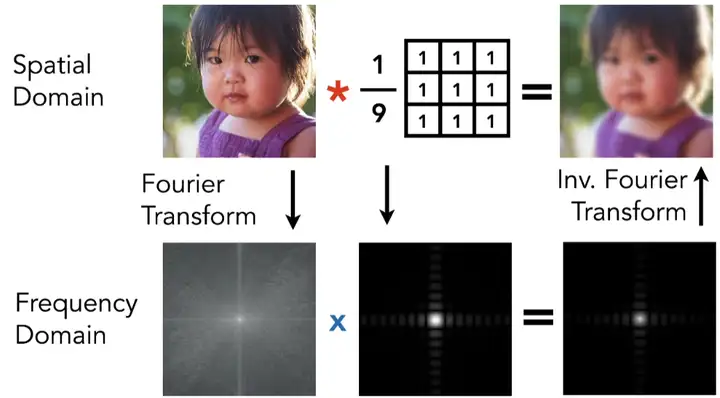

频域与卷积定理

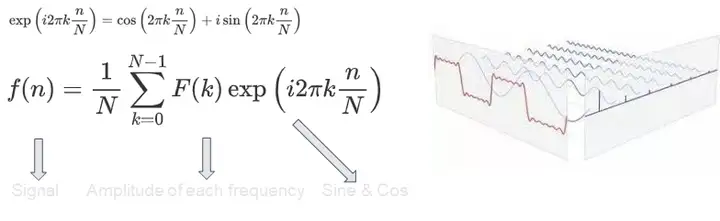

图像可以视为二维的信号,而一个信号可以通过 不同频率 的 Sine & Cosine 函数的线性叠加来近似得到。对于每个频率的函数,我们乘以一个常数振幅并叠加到最终的结果上,这些振幅叫做 频谱。值得注意的是所有的 F_k 都是 复数:

此时频域上的每个振幅不再代表某个单个的时域样本,而是代表该频段的 Sine & Cosine 函数对时域信号的 整体 贡献。频域信号包含了输入图像的全部时域信息,**因此卷积定理告诉我们在时域上对信号做卷积,等同于将源图像与滤波盒图像在频域上的频谱(上图系数 V_k)做简单复数 乘法:

此时频域上的每个振幅不再代表某个单个的时域样本,而是代表该频段的 Sine & Cosine 函数对时域信号的 整体 贡献。频域信号包含了输入图像的全部时域信息,**因此卷积定理告诉我们在时域上对信号做卷积,等同于将源图像与滤波盒图像在频域上的频谱(上图系数 V_k)做简单复数 乘法:

一一对位的乘法速度是远远快于需要循环累加的朴素卷积操作。因此接下来我们的目标就是找到一种方法,建立图像信号与其频域之间的联系。在通信领域通常使用傅里叶变换来进行信号的频、时域转换

一一对位的乘法速度是远远快于需要循环累加的朴素卷积操作。因此接下来我们的目标就是找到一种方法,建立图像信号与其频域之间的联系。在通信领域通常使用傅里叶变换来进行信号的频、时域转换

相关代码

- c++

- AddFFTBloomPass()

- FBloomFinalizeApplyConstantsCS (Bloom计算完成)

- AddTonemapPass(),PassInputs.Bloom = Bloom与PassInputs.SceneColorApplyParamaters

- AddFFTBloomPass()

-

Shader

FBloomFindKernelCenterCS:用于找到Bloom效果的核(Kernel)中心(纹理中找到最亮的像素)。用于在一个,并记录其位置。主要通过计算Luminance来获取到中心区域,而在这里的中心区域可以有多个,这也代表着在最终输出的SceneColor里可以有多个【曝点光晕(Bloom)效果】

实用代码

代码位于DeferredShadingCommon.ush:

// @param UV - UV space in the GBuffer textures (BufferSize resolution)

FGBufferData GetGBufferData(float2 UV, bool bGetNormalizedNormal = true)

{

#if GBUFFER_REFACTOR

return DecodeGBufferDataUV(UV,bGetNormalizedNormal);

#else

float4 GBufferA = Texture2DSampleLevel(SceneTexturesStruct.GBufferATexture, SceneTexturesStruct_GBufferATextureSampler, UV, 0);

float4 GBufferB = Texture2DSampleLevel(SceneTexturesStruct.GBufferBTexture, SceneTexturesStruct_GBufferBTextureSampler, UV, 0);

float4 GBufferC = Texture2DSampleLevel(SceneTexturesStruct.GBufferCTexture, SceneTexturesStruct_GBufferCTextureSampler, UV, 0);

float4 GBufferD = Texture2DSampleLevel(SceneTexturesStruct.GBufferDTexture, SceneTexturesStruct_GBufferDTextureSampler, UV, 0);

float CustomNativeDepth = Texture2DSampleLevel(SceneTexturesStruct.CustomDepthTexture, SceneTexturesStruct_CustomDepthTextureSampler, UV, 0).r;

// BufferToSceneTextureScale is necessary when translucent materials are rendered in a render target

// that has a different resolution than the scene color textures, e.g. r.SeparateTranslucencyScreenPercentage < 100.

int2 IntUV = (int2)trunc(UV * View.BufferSizeAndInvSize.xy * View.BufferToSceneTextureScale.xy);

uint CustomStencil = SceneTexturesStruct.CustomStencilTexture.Load(int3(IntUV, 0)) STENCIL_COMPONENT_SWIZZLE;

#if ALLOW_STATIC_LIGHTING

float4 GBufferE = Texture2DSampleLevel(SceneTexturesStruct.GBufferETexture, SceneTexturesStruct_GBufferETextureSampler, UV, 0);

#else

float4 GBufferE = 1;

#endif

float4 GBufferF = Texture2DSampleLevel(SceneTexturesStruct.GBufferFTexture, SceneTexturesStruct_GBufferFTextureSampler, UV, 0);

#if WRITES_VELOCITY_TO_GBUFFER

float4 GBufferVelocity = Texture2DSampleLevel(SceneTexturesStruct.GBufferVelocityTexture, SceneTexturesStruct_GBufferVelocityTextureSampler, UV, 0);

#else

float4 GBufferVelocity = 0;

#endif

float SceneDepth = CalcSceneDepth(UV);

return DecodeGBufferData(GBufferA, GBufferB, GBufferC, GBufferD, GBufferE, GBufferF, GBufferVelocity, CustomNativeDepth, CustomStencil, SceneDepth, bGetNormalizedNormal, CheckerFromSceneColorUV(UV));

#endif

}

// Minimal path for just the lighting model, used to branch around unlit pixels (skybox)

uint GetShadingModelId(float2 UV)

{

return DecodeShadingModelId(Texture2DSampleLevel(SceneTexturesStruct.GBufferBTexture, SceneTexturesStruct_GBufferBTextureSampler, UV, 0).a);

}

ShadingModel判断

bool IsToonShadingModel(float2 UV)

{

uint ShadingModel = DecodeShadingModelId(Texture2DSampleLevel(SceneTexturesStruct.GBufferBTexture, SceneTexturesStruct_GBufferBTextureSampler, UV, 0).a);

return ShadingModel == SHADINGMODELID_TOONSTANDARD

|| ShadingModel == SHADINGMODELID_PREINTEGRATED_SKIN;

}

PS.需要Shader添加FSceneTextureShaderParameters/FSceneTextureUniformParameters。

IMPLEMENT_STATIC_UNIFORM_BUFFER_STRUCT(FSceneTextureUniformParameters, "SceneTexturesStruct", SceneTextures);

BEGIN_SHADER_PARAMETER_STRUCT(FSceneTextureShaderParameters, ENGINE_API)

SHADER_PARAMETER_RDG_UNIFORM_BUFFER(FSceneTextureUniformParameters, SceneTextures)

SHADER_PARAMETER_RDG_UNIFORM_BUFFER(FMobileSceneTextureUniformParameters, MobileSceneTextures)

END_SHADER_PARAMETER_STRUCT()

ToneMapping

- UE5 官方文档High Dynamic Range Display Output:https://dev.epicgames.com/documentation/en-us/unreal-engine/high-dynamic-range-display-output-in-unreal-engine?application_version=5.3

- 现代游戏图形中的sRGB18%灰-中性灰的定义

- 游戏中的后处理(三):渲染流水线、ACES、Tonemapping和 HDR:https://zhuanlan.zhihu.com/p/118272193

ACES 色彩空间

ACES 标准定义了一些色域和色彩空间如下:

色域有:

- AP0,包含所有颜色的色域

- AP1,工作色域

色彩空间有:

- ACES2065-1/ACES 色彩空间,使用 AP0 色域,用于存储颜色,处理色彩转换

- ACEScg 色彩空间,使用 AP1 色域,一个线性的渲染计算工作空间

- ACEScc 色彩空间,AP1 色域,指数空间,用于调色

- ACEScct 色彩空间,使用 AP1 色域,和 ACEScc 类似,只是曲线略有不同,适用于不同的场景

UE5的ACES流程

ACES Viewing Transform在查看流程中将按以下顺序进行:

- Look Modification Transform (LMT) - 这部分抓取应用了创意"外观"(颜色分级和矫正)的ACES颜色编码图像, 输出由ACES和Reference Rendering Transform(RRT)及Output Device Transform(ODT)渲染的图像。

- Reference Rendering Transform (RRT) - 之后,这部分抓取参考场景的颜色值,将它们转换为参考显示。 在此流程中,它使渲染图像不再依赖于特定显示器,反而能保证它输出到特定显示器时拥有正确而宽泛的色域和动态范围(尚未创建的图像同样如此)。

- Output Device Transform (ODT) - 最后,这部分抓取RRT的HDR数据输出,将其与它们能够显示的不同设备和色彩空间进行比对。 因此,每个目标需要将其自身的ODT与Rec709、Rec2020、DCI-P3等进行比对。

ToneMapping种类

- ShaderToy效果演示:

- https://www.shadertoy.com/view/McG3WW

- ACES

- Narkowicz 2015, "ACES Filmic Tone Mapping Curve"

- https://knarkowicz.wordpress.com/2016/01/06/aces-filmic-tone-mapping-curve/

- PBR Neutral https://modelviewer.dev/examples/tone-mapping

- Uncharted tonemapping

- AgX

- ACES

- https://www.shadertoy.com/view/lslGzl

- https://www.shadertoy.com/view/Xstyzn

- https://www.shadertoy.com/view/McG3WW

- GT-ToneMapping:https://github.com/yaoling1997/GT-ToneMapping

- CCA-ToneMapping:?

UE中的相关实现

UE4版本的笔记:UE4 ToneMapping

TonemapCommon.ush中的FilmToneMap()在CombineLUTsCommon()中调用。其顺序为:

- AddCombineLUTPass() => PostProcessCombineLUTs.usf

- AddTonemapPass() => PostProcessTonemap.usf

void AddPostProcessingPasses()

{

...

{

FRDGTextureRef ColorGradingTexture = nullptr;

if (bPrimaryView)

{

ColorGradingTexture = AddCombineLUTPass(GraphBuilder, View);

}

// We can re-use the color grading texture from the primary view.

else if (View.GetTonemappingLUT())

{

ColorGradingTexture = TryRegisterExternalTexture(GraphBuilder, View.GetTonemappingLUT());

}

else

{

const FViewInfo* PrimaryView = static_cast<const FViewInfo*>(View.Family->Views[0]);

ColorGradingTexture = TryRegisterExternalTexture(GraphBuilder, PrimaryView->GetTonemappingLUT());

}

FTonemapInputs PassInputs;

PassSequence.AcceptOverrideIfLastPass(EPass::Tonemap, PassInputs.OverrideOutput);

PassInputs.SceneColor = SceneColorSlice;

PassInputs.Bloom = Bloom;

PassInputs.SceneColorApplyParamaters = SceneColorApplyParameters;

PassInputs.LocalExposureTexture = LocalExposureTexture;

PassInputs.BlurredLogLuminanceTexture = LocalExposureBlurredLogLumTexture;

PassInputs.LocalExposureParameters = &LocalExposureParameters;

PassInputs.EyeAdaptationParameters = &EyeAdaptationParameters;

PassInputs.EyeAdaptationBuffer = EyeAdaptationBuffer;

PassInputs.ColorGradingTexture = ColorGradingTexture;

PassInputs.bWriteAlphaChannel = AntiAliasingMethod == AAM_FXAA || bProcessSceneColorAlpha;

PassInputs.bOutputInHDR = bTonemapOutputInHDR;

SceneColor = AddTonemapPass(GraphBuilder, View, PassInputs);

}

...

}

SHADER_PARAMETER_STRUCT_INCLUDE(FSceneTextureShaderParameters, SceneTextures) CommonPassParameters.SceneTextures = SceneTextures.GetSceneTextureShaderParameters(View.FeatureLevel);

PostProcessCombineLUTs.usf

相关变量更新函数位于FCachedLUTSettings::GetCombineLUTParameters()

PostProcessTonemap.usf

实现方法

//BlueRose Modify

FGBufferData SamplerBuffer = GetGBufferData(UV * View.ResolutionFractionAndInv.x, false);

if (SamplerBuffer.CustomStencil > 1.0f && abs(SamplerBuffer.CustomDepth - SamplerBuffer.Depth) < 1)

{

// OutColor = SampleSceneColor(UV);

OutColor = TonemapCommonPS(UV, InVignette, GrainUV, ScreenPos, FullViewUV, SvPosition, Luminance);

}else

{

OutColor = TonemapCommonPS(UV, InVignette, GrainUV, ScreenPos, FullViewUV, SvPosition, Luminance);

}

//BlueRose Modify End

TextureArray参考

FIESAtlasAddTextureCS::FParameters:

- SHADER_PARAMETER_TEXTURE_ARRAY

- /Engine/Private/IESAtlas.usf

static void AddSlotsPassCS(

FRDGBuilder& GraphBuilder,

FGlobalShaderMap* ShaderMap,

const TArray<FAtlasSlot>& Slots,

FRDGTextureRef& OutAtlas)

{

FRDGTextureUAVRef AtlasTextureUAV = GraphBuilder.CreateUAV(OutAtlas);

TShaderMapRef<FIESAtlasAddTextureCS> ComputeShader(ShaderMap);

// Batch new slots into several passes

const uint32 SlotCountPerPass = 8u;

const uint32 PassCount = FMath::DivideAndRoundUp(uint32(Slots.Num()), SlotCountPerPass);

for (uint32 PassIt = 0; PassIt < PassCount; ++PassIt)

{

const uint32 SlotOffset = PassIt * SlotCountPerPass;

const uint32 SlotCount = SlotCountPerPass * (PassIt+1) <= uint32(Slots.Num()) ? SlotCountPerPass : uint32(Slots.Num()) - (SlotCountPerPass * PassIt);

FIESAtlasAddTextureCS::FParameters* Parameters = GraphBuilder.AllocParameters<FIESAtlasAddTextureCS::FParameters>();

Parameters->OutAtlasTexture = AtlasTextureUAV;

Parameters->AtlasResolution = OutAtlas->Desc.Extent;

Parameters->AtlasSliceCount = OutAtlas->Desc.ArraySize;

Parameters->ValidCount = SlotCount;

for (uint32 SlotIt = 0; SlotIt < SlotCountPerPass; ++SlotIt)

{

Parameters->InTexture[SlotIt] = GSystemTextures.BlackDummy->GetRHI();

Parameters->InSliceIndex[SlotIt].X = InvalidSlotIndex;

Parameters->InSampler[SlotIt] = TStaticSamplerState<SF_Bilinear, AM_Clamp, AM_Clamp, AM_Clamp>::GetRHI();

}

for (uint32 SlotIt = 0; SlotIt<SlotCount;++SlotIt)

{

const FAtlasSlot& Slot = Slots[SlotOffset + SlotIt];

check(Slot.SourceTexture);

Parameters->InTexture[SlotIt] = Slot.GetTextureRHI();

Parameters->InSliceIndex[SlotIt].X = Slot.SliceIndex;

}

const FIntVector DispatchCount = FComputeShaderUtils::GetGroupCount(FIntVector(Parameters->AtlasResolution.X, Parameters->AtlasResolution.Y, SlotCount), FIntVector(8, 8, 1));

FComputeShaderUtils::AddPass(GraphBuilder, RDG_EVENT_NAME("IESAtlas::AddTexture"), ComputeShader, Parameters, DispatchCount);

}

GraphBuilder.UseExternalAccessMode(OutAtlas, ERHIAccess::SRVMask);

}

Texture2D<float4> InTexture_0;

Texture2D<float4> InTexture_1;

Texture2D<float4> InTexture_2;

Texture2D<float4> InTexture_3;

Texture2D<float4> InTexture_4;

Texture2D<float4> InTexture_5;

Texture2D<float4> InTexture_6;

Texture2D<float4> InTexture_7;

uint4 InSliceIndex[8];

SamplerState InSampler_0;

SamplerState InSampler_1;

SamplerState InSampler_2;

SamplerState InSampler_3;

SamplerState InSampler_4;

SamplerState InSampler_5;

SamplerState InSampler_6;

SamplerState InSampler_7;

int2 AtlasResolution;

uint AtlasSliceCount;

uint ValidCount;

RWTexture2DArray<float> OutAtlasTexture;

[numthreads(8, 8, 1)]

void MainCS(uint3 DispatchThreadId : SV_DispatchThreadID)

{

if (all(DispatchThreadId.xy < uint2(AtlasResolution)))

{

const uint2 DstPixelPos = DispatchThreadId.xy;

uint DstSlice = 0;

const float2 SrcUV = (DstPixelPos + 0.5) / float2(AtlasResolution);

const uint SrcSlice = DispatchThreadId.z;

if (SrcSlice < ValidCount)

{

float Color = 0;

switch (SrcSlice)

{

case 0: Color = InTexture_0.SampleLevel(InSampler_0, SrcUV, 0).x; DstSlice = InSliceIndex[0].x; break;

case 1: Color = InTexture_1.SampleLevel(InSampler_1, SrcUV, 0).x; DstSlice = InSliceIndex[1].x; break;

case 2: Color = InTexture_2.SampleLevel(InSampler_2, SrcUV, 0).x; DstSlice = InSliceIndex[2].x; break;

case 3: Color = InTexture_3.SampleLevel(InSampler_3, SrcUV, 0).x; DstSlice = InSliceIndex[3].x; break;

case 4: Color = InTexture_4.SampleLevel(InSampler_4, SrcUV, 0).x; DstSlice = InSliceIndex[4].x; break;

case 5: Color = InTexture_5.SampleLevel(InSampler_5, SrcUV, 0).x; DstSlice = InSliceIndex[5].x; break;

case 6: Color = InTexture_6.SampleLevel(InSampler_6, SrcUV, 0).x; DstSlice = InSliceIndex[6].x; break;

case 7: Color = InTexture_7.SampleLevel(InSampler_7, SrcUV, 0).x; DstSlice = InSliceIndex[7].x; break;

}

// Ensure there is no NaN value

Color = -min(-Color, 0);

DstSlice = min(DstSlice, AtlasSliceCount-1);

OutAtlasTexture[uint3(DstPixelPos, DstSlice)] = Color;

}

}

}

ToneMapping Method更换

// Tonemapped color in the AP1 gamut

float3 ToneMappedColorAP1 = FilmToneMap( ColorAP1 );

// float3 ToneMappedColorAP1 = ColorAP1;

// float3 ToneMappedColorAP1 = AGXToneMap(ColorAP1);

// float3 ToneMappedColorAP1 = GTToneMap(ColorAP1);

// float3 ToneMappedColorAP1 = PBRNeutralToneMap(ColorAP1);

UE5 PostProcess 添加Pass代码

- UE4中添加自定义ComputerShader:https://zhuanlan.zhihu.com/p/413884878

- UE渲染学习(2)- 自定义PostProcess - Kuwahara滤镜:https://zhuanlan.zhihu.com/p/25790491262

添加

- 在AddPostProcessingPasses()(PostProcessing.cpp)中的EPass与PassNames数组中添加Pass名称枚举与Pass名称字符串。

- 在PassSequence.Finalize() 之前添加

PS:

Tonemap之后的Pass因为超采样的关系使得ViewportResoution与BufferResoution不同,所以需要使用SHADER_PARAMETER(FScreenTransform, SvPositionToInputTextureUV)以及FScreenTransform::ChangeTextureBasisFromTo计算变换比例。具体可以参考FFXAAPS。 1. ToneMapPass 传入FScreenPassTextureSlice,传出FScreenPassTexture。如果关闭Tonemap则直接运行SceneColor = FScreenPassTexture(SceneColorSlice);。MotionBlur~TonemapMotionBlur之前(后处理材质BL_BeforeTonemapping之前),传入FScreenPassTexture SceneColor进行绘制。

FPostProcessMaterialInputs PassInputs;

PassSequence.AcceptOverrideIfLastPass(EPass::Tonemap, PassInputs.OverrideOutput);

PassInputs.SetInput(EPostProcessMaterialInput::SceneColor, FScreenPassTexture::CopyFromSlice(GraphBuilder, SceneColorSlice));

创建FScreenPassTextureSlice:

FScreenPassTextureSlice SceneColorSlice = FScreenPassTextureSlice::CreateFromScreenPassTexture(GraphBuilder, SceneColor);//FScreenPassTexture SceneColor

将渲染结果转换成FScreenPassTextureSlice:

SceneColorSlice = FScreenPassTextureSlice::CreateFromScreenPassTexture(GraphBuilder, AddToonPostProcessBeforeTonemappingPass(GraphBuilder, View, PassInputs));

// Allows for the scene color to be the slice of an array between temporal upscaler and tonemaper.

FScreenPassTextureSlice SceneColorSlice = FScreenPassTextureSlice::CreateFromScreenPassTexture(GraphBuilder, SceneColor);

Viewport => TextureUV

PassParameters->SvPositionToInputTextureUV = (

FScreenTransform::SvPositionToViewportUV(Output.ViewRect) *

FScreenTransform::ChangeTextureBasisFromTo(FScreenPassTextureViewport(Inputs.SceneColorSlice), FScreenTransform::ETextureBasis::ViewportUV, FScreenTransform::ETextureBasis::TextureUV));

在Shader中:

Texture2D SceneColorTexture;

SamplerState SceneColorSampler;

FScreenTransform SvPositionToInputTextureUV;

void MainPS(

float4 SvPosition : SV_POSITION,

out float4 OutColor : SV_Target0)

{

float2 SceneColorUV = ApplyScreenTransform(SvPosition.xy, SvPositionToInputTextureUV);

OutColor.rgba = SceneColorTexture.SampleLevel(SceneColorSampler, UV, 0).rgba;

}